Daehee Lee

Multi-agent Coordination via Flow Matching

Nov 07, 2025Abstract:This work presents MAC-Flow, a simple yet expressive framework for multi-agent coordination. We argue that requirements of effective coordination are twofold: (i) a rich representation of the diverse joint behaviors present in offline data and (ii) the ability to act efficiently in real time. However, prior approaches often sacrifice one for the other, i.e., denoising diffusion-based solutions capture complex coordination but are computationally slow, while Gaussian policy-based solutions are fast but brittle in handling multi-agent interaction. MAC-Flow addresses this trade-off by first learning a flow-based representation of joint behaviors, and then distilling it into decentralized one-step policies that preserve coordination while enabling fast execution. Across four different benchmarks, including $12$ environments and $34$ datasets, MAC-Flow alleviates the trade-off between performance and computational cost, specifically achieving about $\boldsymbol{\times14.5}$ faster inference compared to diffusion-based MARL methods, while maintaining good performance. At the same time, its inference speed is similar to that of prior Gaussian policy-based offline multi-agent reinforcement learning (MARL) methods.

Incremental Learning of Retrievable Skills For Efficient Continual Task Adaptation

Oct 30, 2024

Abstract:Continual Imitation Learning (CiL) involves extracting and accumulating task knowledge from demonstrations across multiple stages and tasks to achieve a multi-task policy. With recent advancements in foundation models, there has been a growing interest in adapter-based CiL approaches, where adapters are established parameter-efficiently for tasks newly demonstrated. While these approaches isolate parameters for specific tasks and tend to mitigate catastrophic forgetting, they limit knowledge sharing among different demonstrations. We introduce IsCiL, an adapter-based CiL framework that addresses this limitation of knowledge sharing by incrementally learning shareable skills from different demonstrations, thus enabling sample-efficient task adaptation using the skills particularly in non-stationary CiL environments. In IsCiL, demonstrations are mapped into the state embedding space, where proper skills can be retrieved upon input states through prototype-based memory. These retrievable skills are incrementally learned on their corresponding adapters. Our CiL experiments with complex tasks in Franka-Kitchen and Meta-World demonstrate robust performance of IsCiL in both task adaptation and sample-efficiency. We also show a simple extension of IsCiL for task unlearning scenarios.

Cross-Detection and Dual-Side Monitoring Schemes for FPGA-Based High-Accuracy and High-Precision Time-to-Digital Converters

Oct 12, 2024

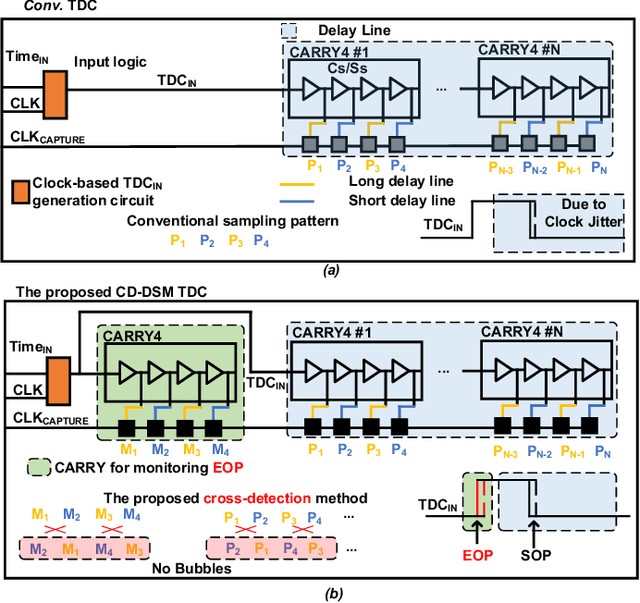

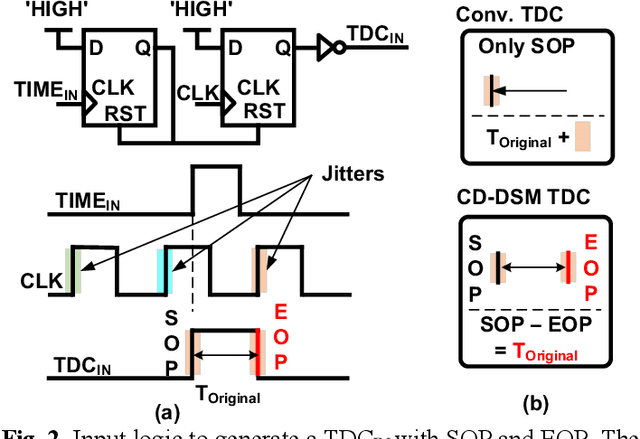

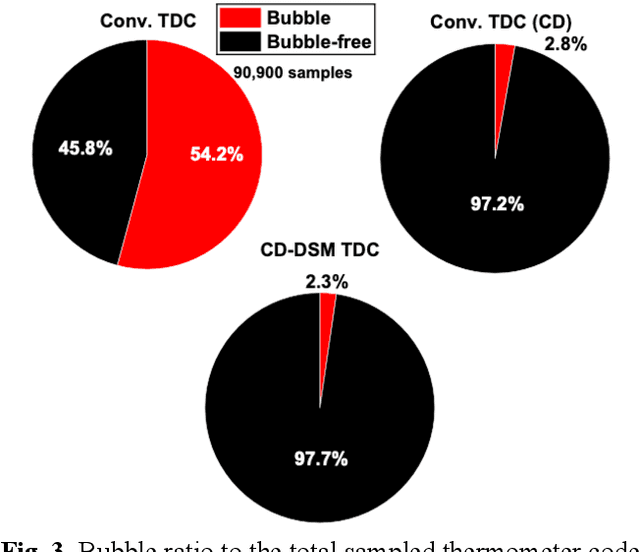

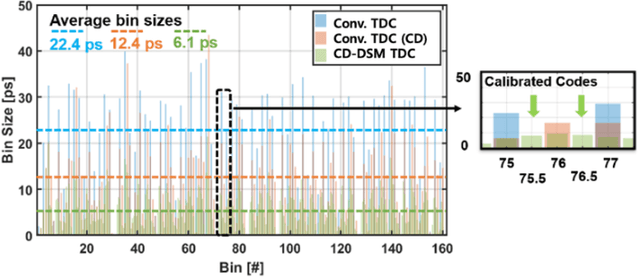

Abstract:This study presents a novel field-programmable gate array (FPGA)-based Time-to-Digital Converter (TDC) design suitable for high timing resolution applications, utilizing two new techniques. First, a cross-detection (CD) method is introduced that minimizes the occurrence of bubbles, which cause inaccuracy in the timing measurement of a TDC in thermometer codes, by altering the conventional sampling pattern, thereby yielding an average bin size half of its typical size. The second technique employs dual-side monitoring (DSM) of thermometer codes, including end-of-propagation (EOP) and start-of-propagation (SOP). Distinct from conventional TDCs, which focus solely on SOP thermometer codes, this technique utilizes EOP to calibrate SOP, simultaneously enhancing time resolution and the TDC's stability against changes in temperature and location. The proposed DSM scheme necessitates only an additional CARRY4 for capturing the EOP thermometer code, rendering it a resource-efficient solution. The CD-DSM TDC has been successfully implemented on a Virtex-7 Xilinx FPGA (a 28-nm process), with an average bin size of 6.1 ps and a root mean square of 3.8 ps. Compared to conventional TDCs, the CD-DSM TDC offers superior linearity. The successful measurement of ultra-high coincidence timing resolution (CTR) from two Cerenkov radiator integrated microchannel plate photomultiplier tubes (CRI-MCP-PMTs) was conducted with the CD-DSM TDCs for sub-100 ps timing measurements. A comparison with current-edge TDCs further highlights the superior performance of the CD-DSM TDCs.

One-shot Imitation in a Non-Stationary Environment via Multi-Modal Skill

Feb 13, 2024

Abstract:One-shot imitation is to learn a new task from a single demonstration, yet it is a challenging problem to adopt it for complex tasks with the high domain diversity inherent in a non-stationary environment. To tackle the problem, we explore the compositionality of complex tasks, and present a novel skill-based imitation learning framework enabling one-shot imitation and zero-shot adaptation; from a single demonstration for a complex unseen task, a semantic skill sequence is inferred and then each skill in the sequence is converted into an action sequence optimized for environmental hidden dynamics that can vary over time. Specifically, we leverage a vision-language model to learn a semantic skill set from offline video datasets, where each skill is represented on the vision-language embedding space, and adapt meta-learning with dynamics inference to enable zero-shot skill adaptation. We evaluate our framework with various one-shot imitation scenarios for extended multi-stage Meta-world tasks, showing its superiority in learning complex tasks, generalizing to dynamics changes, and extending to different demonstration conditions and modalities, compared to other baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge