Curtis Chou

Professional Basketball Player Behavior Synthesis via Planning with Diffusion

Jun 10, 2023

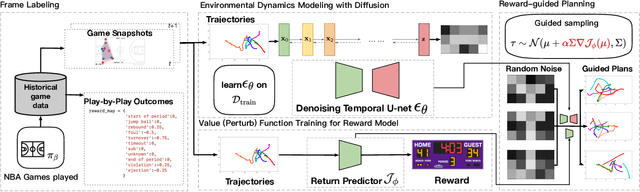

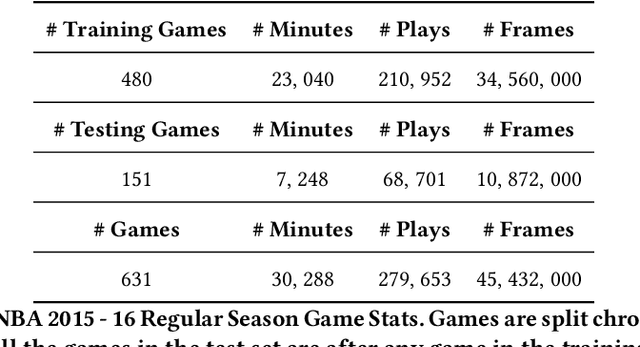

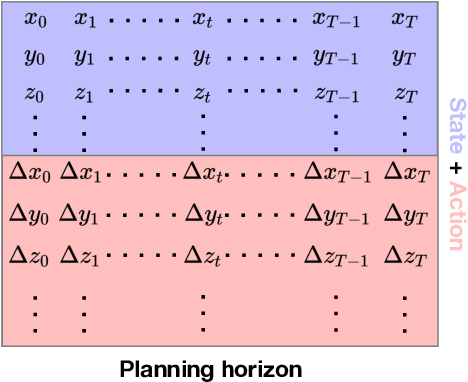

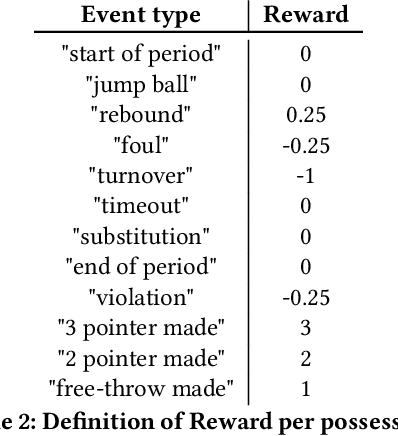

Abstract:Dynamically planning in multi-agent systems has been explored to improve decision-making in various domains. Professional basketball serves as a compelling example of a dynamic spatio-temporal game, encompassing both concealed strategic policies and decision-making. However, processing the diverse on-court signals and navigating the vast space of potential actions and outcomes makes it difficult for existing approaches to swiftly identify optimal strategies in response to evolving circumstances. In this study, we first formulate the sequential decision-making process as a conditional trajectory generation process. We further introduce PLAYBEST (PLAYer BEhavior SynThesis), a method for enhancing player decision-making. We extend the state-of-the-art generative model, diffusion probabilistic model, to learn challenging multi-agent environmental dynamics from historical National Basketball Association (NBA) player motion tracking data. To incorporate data-driven strategies, an auxiliary value function is trained using the play-by-play data with corresponding rewards acting as the plan guidance. To accomplish reward-guided trajectory generation, conditional sampling is introduced to condition the diffusion model on the value function and conduct classifier-guided sampling. We validate the effectiveness of PLAYBEST via comprehensive simulation studies from real-world data, contrasting the generated trajectories and play strategies with those employed by professional basketball teams. Our results reveal that the model excels at generating high-quality basketball trajectories that yield efficient plays, surpassing conventional planning techniques in terms of adaptability, flexibility, and overall performance. Moreover, the synthesized play strategies exhibit a remarkable alignment with professional tactics, highlighting the model's capacity to capture the intricate dynamics of basketball games.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge