Cristina Bustos

Experimenting with Affective Computing Models in Video Interviews with Spanish-speaking Older Adults

Jan 28, 2025

Abstract:Understanding emotional signals in older adults is crucial for designing virtual assistants that support their well-being. However, existing affective computing models often face significant limitations: (1) limited availability of datasets representing older adults, especially in non-English-speaking populations, and (2) poor generalization of models trained on younger or homogeneous demographics. To address these gaps, this study evaluates state-of-the-art affective computing models -- including facial expression recognition, text sentiment analysis, and smile detection -- using videos of older adults interacting with either a person or a virtual avatar. As part of this effort, we introduce a novel dataset featuring Spanish-speaking older adults engaged in human-to-human video interviews. Through three comprehensive analyses, we investigate (1) the alignment between human-annotated labels and automatic model outputs, (2) the relationships between model outputs across different modalities, and (3) individual variations in emotional signals. Using both the Wizard of Oz (WoZ) dataset and our newly collected dataset, we uncover limited agreement between human annotations and model predictions, weak consistency across modalities, and significant variability among individuals. These findings highlight the shortcomings of generalized emotion perception models and emphasize the need of incorporating personal variability and cultural nuances into future systems.

Analyzing the contribution of different passively collected data to predict Stress and Depression

Oct 20, 2023

Abstract:The possibility of recognizing diverse aspects of human behavior and environmental context from passively captured data motivates its use for mental health assessment. In this paper, we analyze the contribution of different passively collected sensor data types (WiFi, GPS, Social interaction, Phone Log, Physical Activity, Audio, and Academic features) to predict daily selfreport stress and PHQ-9 depression score. First, we compute 125 mid-level features from the original raw data. These 125 features include groups of features from the different sensor data types. Then, we evaluate the contribution of each feature type by comparing the performance of Neural Network models trained with all features against Neural Network models trained with specific feature groups. Our results show that WiFi features (which encode mobility patterns) and Phone Log features (which encode information correlated with sleep patterns), provide significative information for stress and depression prediction.

On the use of Vision-Language models for Visual Sentiment Analysis: a study on CLIP

Oct 18, 2023Abstract:This work presents a study on how to exploit the CLIP embedding space to perform Visual Sentiment Analysis. We experiment with two architectures built on top of the CLIP embedding space, which we denote by CLIP-E. We train the CLIP-E models with WEBEmo, the largest publicly available and manually labeled benchmark for Visual Sentiment Analysis, and perform two sets of experiments. First, we test on WEBEmo and compare the CLIP-E architectures with state-of-the-art (SOTA) models and with CLIP Zero-Shot. Second, we perform cross dataset evaluation, and test the CLIP-E architectures trained with WEBEmo on other Visual Sentiment Analysis benchmarks. Our results show that the CLIP-E approaches outperform SOTA models in WEBEmo fine grained categorization, and they also generalize better when tested on datasets that have not been seen during training. Interestingly, we observed that for the FI dataset, CLIP Zero-Shot produces better accuracies than SOTA models and CLIP-E trained on WEBEmo. These results motivate several questions that we discuss in this paper, such as how we should design new benchmarks and evaluate Visual Sentiment Analysis, and whether we should keep designing tailored Deep Learning models for Visual Sentiment Analysis or focus our efforts on better using the knowledge encoded in large vision-language models such as CLIP for this task.

Predicting the impact of urban change in pedestrian and road safety

Feb 03, 2022

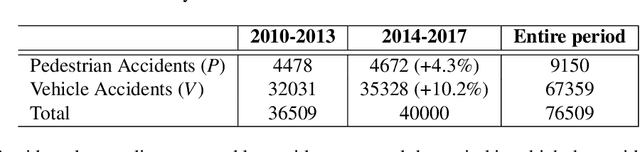

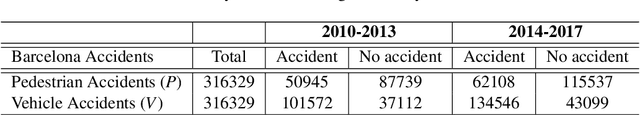

Abstract:Increased interaction between and among pedestrians and vehicles in the crowded urban environments of today gives rise to a negative side-effect: a growth in traffic accidents, with pedestrians being the most vulnerable elements. Recent work has shown that Convolutional Neural Networks are able to accurately predict accident rates exploiting Street View imagery along urban roads. The promising results point to the plausibility of aided design of safe urban landscapes, for both pedestrians and vehicles. In this paper, by considering historical accident data and Street View images, we detail how to automatically predict the impact (increase or decrease) of urban interventions on accident incidence. The results are positive, rendering an accuracies ranging from 60 to 80%. We additionally provide an interpretability analysis to unveil which specific categories of urban features impact accident rates positively or negatively. Considering the transportation network substrates (sidewalk and road networks) and their demand, we integrate these results to a complex network framework, to estimate the effective impact of urban change on the safety of pedestrians and vehicles. Results show that public authorities may leverage on machine learning tools to prioritize targeted interventions, since our analysis show that limited improvement is obtained with current tools. Further, our findings have a wider application range such as the design of safe urban routes for pedestrians or to the field of driver-assistance technologies.

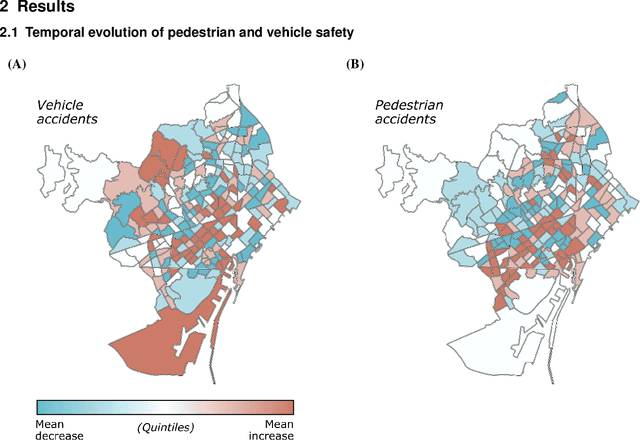

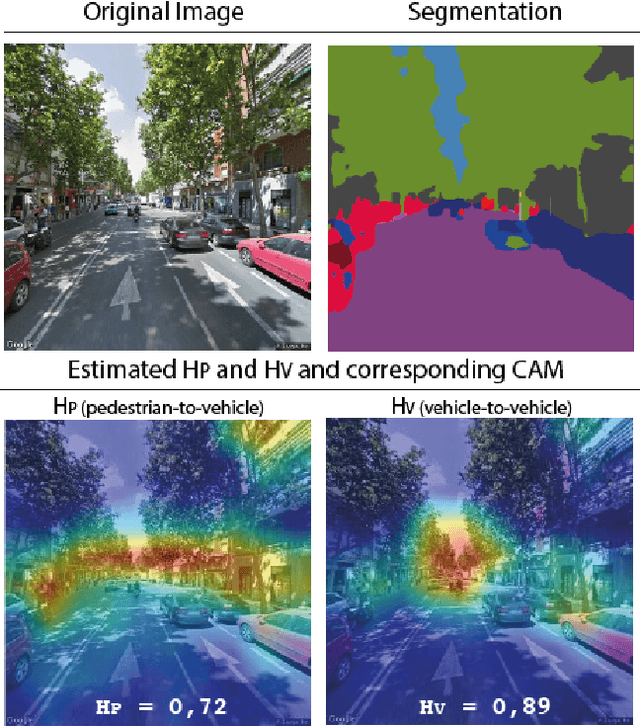

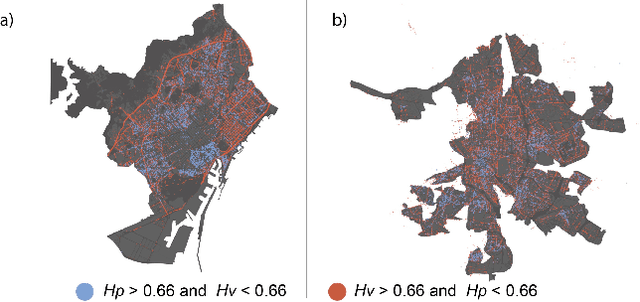

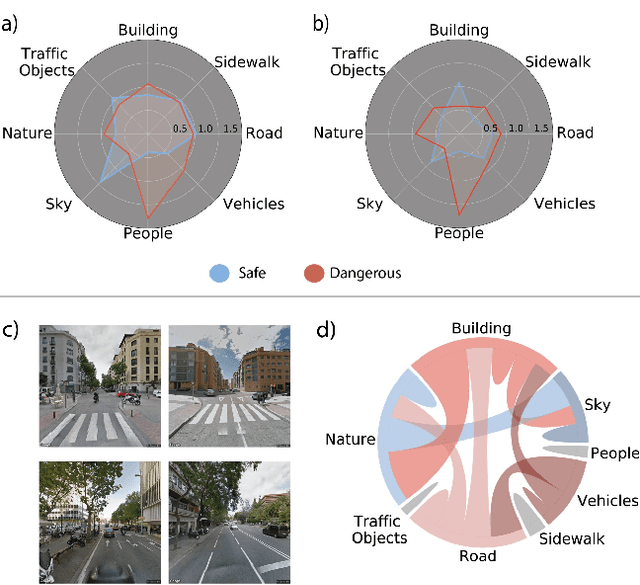

Explainable, automated urban interventions to improve pedestrian and vehicle safety

Nov 08, 2021

Abstract:At the moment, urban mobility research and governmental initiatives are mostly focused on motor-related issues, e.g. the problems of congestion and pollution. And yet, we can not disregard the most vulnerable elements in the urban landscape: pedestrians, exposed to higher risks than other road users. Indeed, safe, accessible, and sustainable transport systems in cities are a core target of the UN's 2030 Agenda. Thus, there is an opportunity to apply advanced computational tools to the problem of traffic safety, in regards especially to pedestrians, who have been often overlooked in the past. This paper combines public data sources, large-scale street imagery and computer vision techniques to approach pedestrian and vehicle safety with an automated, relatively simple, and universally-applicable data-processing scheme. The steps involved in this pipeline include the adaptation and training of a Residual Convolutional Neural Network to determine a hazard index for each given urban scene, as well as an interpretability analysis based on image segmentation and class activation mapping on those same images. Combined, the outcome of this computational approach is a fine-grained map of hazard levels across a city, and an heuristic to identify interventions that might simultaneously improve pedestrian and vehicle safety. The proposed framework should be taken as a complement to the work of urban planners and public authorities.

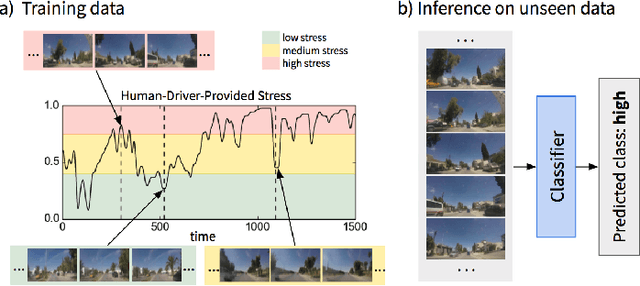

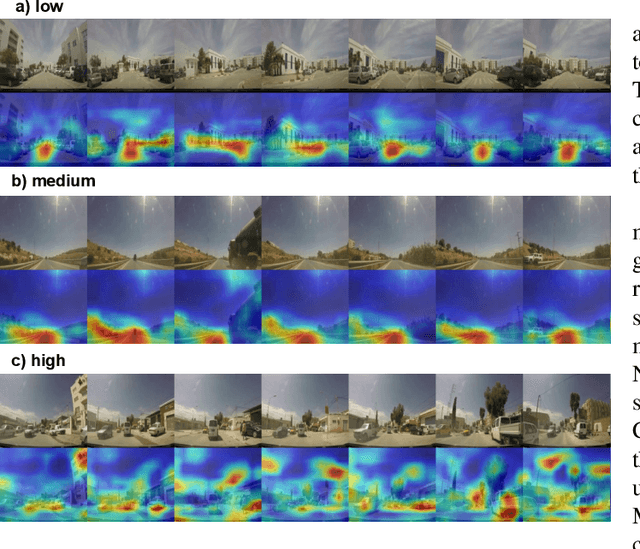

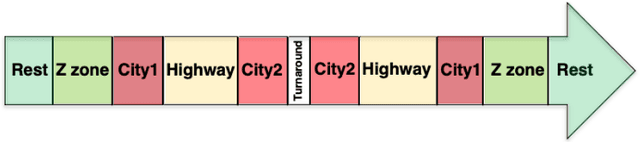

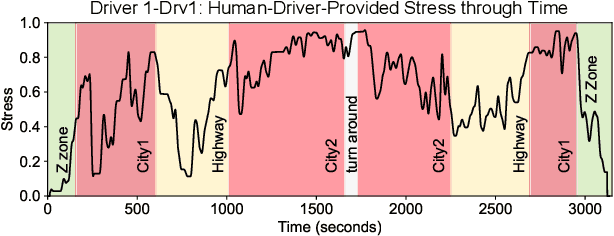

Predicting Driver Self-Reported Stress by Analyzing the Road Scene

Sep 27, 2021

Abstract:Several studies have shown the relevance of biosignals in driver stress recognition. In this work, we examine something important that has been less frequently explored: We develop methods to test if the visual driving scene can be used to estimate a drivers' subjective stress levels. For this purpose, we use the AffectiveROAD video recordings and their corresponding stress labels, a continuous human-driver-provided stress metric. We use the common class discretization for stress, dividing its continuous values into three classes: low, medium, and high. We design and evaluate three computer vision modeling approaches to classify the driver's stress levels: (1) object presence features, where features are computed using automatic scene segmentation; (2) end-to-end image classification; and (3) end-to-end video classification. All three approaches show promising results, suggesting that it is possible to approximate the drivers' subjective stress from the information found in the visual scene. We observe that the video classification, which processes the temporal information integrated with the visual information, obtains the highest accuracy of $0.72$, compared to a random baseline accuracy of $0.33$ when tested on a set of nine drivers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge