Raquel Ros

HEXAR: a Hierarchical Explainability Architecture for Robots

Jan 06, 2026Abstract:As robotic systems become increasingly complex, the need for explainable decision-making becomes critical. Existing explainability approaches in robotics typically either focus on individual modules, which can be difficult to query from the perspective of high-level behaviour, or employ monolithic approaches, which do not exploit the modularity of robotic architectures. We present HEXAR (Hierarchical EXplainability Architecture for Robots), a novel framework that provides a plug-in, hierarchical approach to generate explanations about robotic systems. HEXAR consists of specialised component explainers using diverse explanation techniques (e.g., LLM-based reasoning, causal models, feature importance, etc) tailored to specific robot modules, orchestrated by an explainer selector that chooses the most appropriate one for a given query. We implement and evaluate HEXAR on a TIAGo robot performing assistive tasks in a home environment, comparing it against end-to-end and aggregated baseline approaches across 180 scenario-query variations. We observe that HEXAR significantly outperforms baselines in root cause identification, incorrect information exclusion, and runtime, offering a promising direction for transparent autonomous systems.

Experimenting with Affective Computing Models in Video Interviews with Spanish-speaking Older Adults

Jan 28, 2025

Abstract:Understanding emotional signals in older adults is crucial for designing virtual assistants that support their well-being. However, existing affective computing models often face significant limitations: (1) limited availability of datasets representing older adults, especially in non-English-speaking populations, and (2) poor generalization of models trained on younger or homogeneous demographics. To address these gaps, this study evaluates state-of-the-art affective computing models -- including facial expression recognition, text sentiment analysis, and smile detection -- using videos of older adults interacting with either a person or a virtual avatar. As part of this effort, we introduce a novel dataset featuring Spanish-speaking older adults engaged in human-to-human video interviews. Through three comprehensive analyses, we investigate (1) the alignment between human-annotated labels and automatic model outputs, (2) the relationships between model outputs across different modalities, and (3) individual variations in emotional signals. Using both the Wizard of Oz (WoZ) dataset and our newly collected dataset, we uncover limited agreement between human annotations and model predictions, weak consistency across modalities, and significant variability among individuals. These findings highlight the shortcomings of generalized emotion perception models and emphasize the need of incorporating personal variability and cultural nuances into future systems.

Analysing the behaviour of robot teams through relational sequential pattern mining

Oct 29, 2010

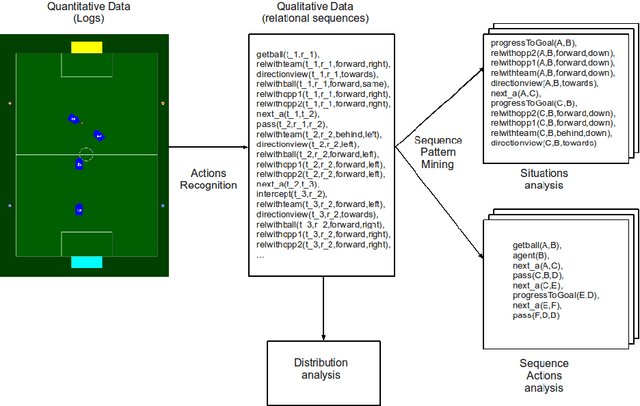

Abstract:This report outlines the use of a relational representation in a Multi-Agent domain to model the behaviour of the whole system. A desired property in this systems is the ability of the team members to work together to achieve a common goal in a cooperative manner. The aim is to define a systematic method to verify the effective collaboration among the members of a team and comparing the different multi-agent behaviours. Using external observations of a Multi-Agent System to analyse, model, recognize agent behaviour could be very useful to direct team actions. In particular, this report focuses on the challenge of autonomous unsupervised sequential learning of the team's behaviour from observations. Our approach allows to learn a symbolic sequence (a relational representation) to translate raw multi-agent, multi-variate observations of a dynamic, complex environment, into a set of sequential behaviours that are characteristic of the team in question, represented by a set of sequences expressed in first-order logic atoms. We propose to use a relational learning algorithm to mine meaningful frequent patterns among the relational sequences to characterise team behaviours. We compared the performance of two teams in the RoboCup four-legged league environment, that have a very different approach to the game. One uses a Case Based Reasoning approach, the other uses a pure reactive behaviour.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge