Clemens Blumer

Priming Deep Neural Networks with Synthetic Faces for Enhanced Performance

Nov 19, 2018

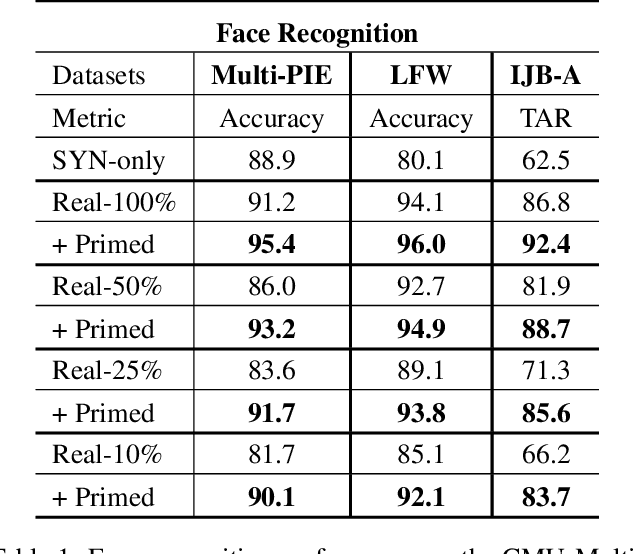

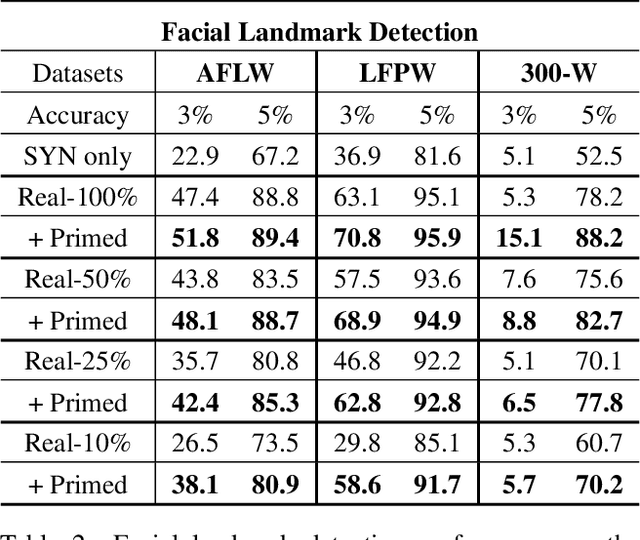

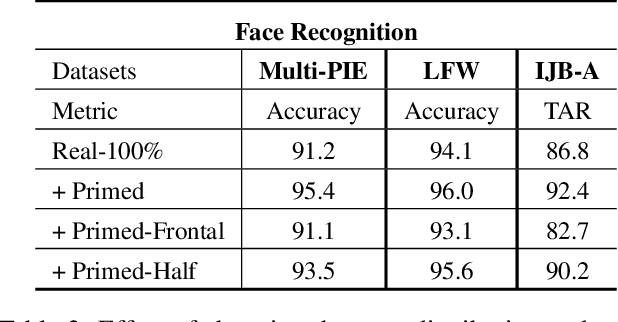

Abstract:Today's most successful facial image analysis systems are based on deep neural networks. However, a major limitation of such deep learning approaches is that their performance depends strongly on the availability of large annotated datasets. In this work, we prime deep neural networks by pre-training them with synthetic face images for specific facial analysis tasks. We demonstrate that this approach enhances both the generalization performance as well as the dataset efficiency of deep neural networks. Using a 3D morphable face model, we generate arbitrary amounts of annotated data with full control over image characteristics such as facial shape and color, pose, illumination, and background. With a series of experiments, we extensively test the effect of priming deep neural networks with synthetic face examples for two popular facial image analysis tasks: face recognition and facial landmark detection. We observed the following positive effects for both tasks: 1) Priming with synthetic face images improves the generalization performance consistently across all benchmark datasets. 2) The amount of real-world data needed to achieve competitive performance is reduced by 75% for face recognition and by 50% for facial landmark detection. 3) Priming with synthetic faces is consistently superior at enhancing the performance of deep neural networks than data augmentation and transfer learning techniques. Furthermore, our experiments provide evidence that priming with synthetic faces is able to enhance performance because it reduces the negative effects of biases present in real-world training data. The proposed synthetic face image generator, as well as the software used for our experiments, have been made publicly available.

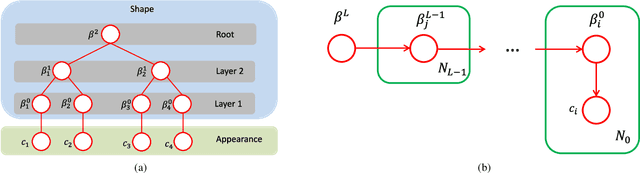

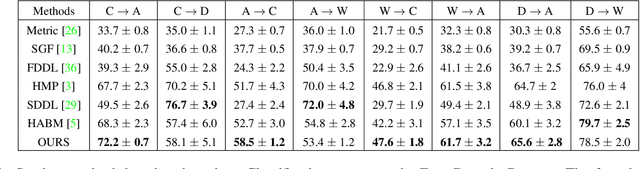

Greedy Structure Learning of Hierarchical Compositional Models

May 29, 2018

Abstract:Learning the representation of an object in terms of individual parts and their spatial as well as hierarchical relation is a fundamental problem of vision. Existing approaches are limited by the need of segmented training data and prior knowledge of the object's geometry. In this paper, we overcome those limitations by integrating an explicit background model into the structure learning process. We formulate the structure learning as a hierarchical clustering process. Thereby, we iterate in an EM-type procedure between clustering image patches and using the clustered data for learning part models. The hierarchical clustering proceeds in a bottom-up manner, learning the parts of lower layers in the hierarchy first and subsequently composing them into higher-order parts. A final top-down process composes the learned hierarchical parts into a holistic object model. The key novelty of our approach is that we learn a background model that competes with the object model (the foreground) in explaining the training data. In this way, we integrate the foreground-background segmentation task into the structure learning process and therefore overcome the need for segmented training data. As a result, we can infer the number of layers in the hierarchy, the number of parts per layer and the foreground-background segmentation in a joint optimization process. We demonstrate the ability to learn semantically meaningful hierarchical compositional models from a small set of natural images of an object without detailed supervision. We show that the learned object models achieve state-of-the-art results when compared with other generative object models at object classification on a standard transfer learning dataset. The code and data used in this work are publicly available.

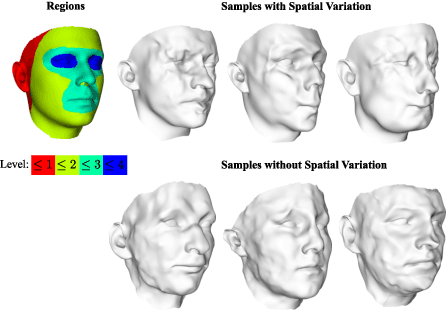

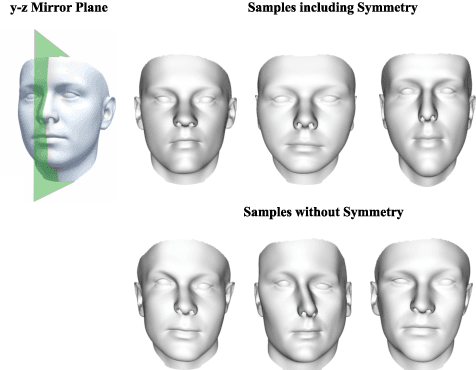

Morphable Face Models - An Open Framework

Sep 26, 2017

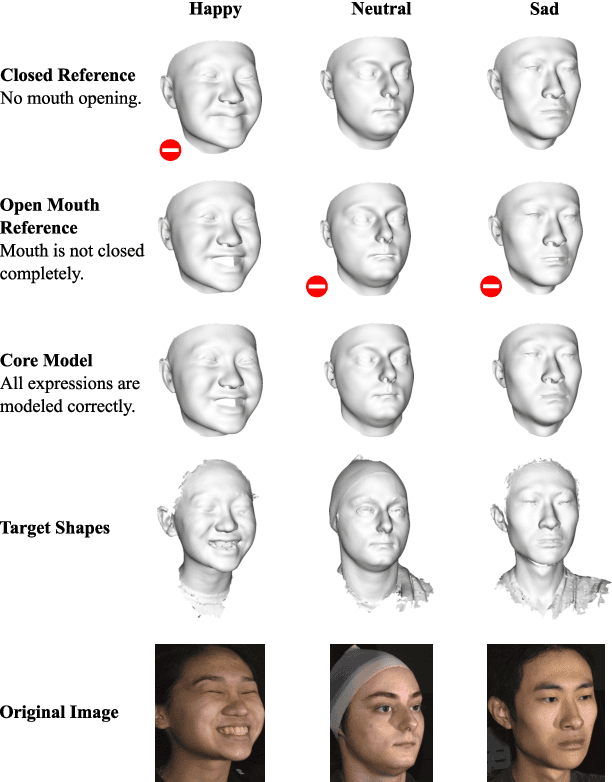

Abstract:In this paper, we present a novel open-source pipeline for face registration based on Gaussian processes as well as an application to face image analysis. Non-rigid registration of faces is significant for many applications in computer vision, such as the construction of 3D Morphable face models (3DMMs). Gaussian Process Morphable Models (GPMMs) unify a variety of non-rigid deformation models with B-splines and PCA models as examples. GPMM separate problem specific requirements from the registration algorithm by incorporating domain-specific adaptions as a prior model. The novelties of this paper are the following: (i) We present a strategy and modeling technique for face registration that considers symmetry, multi-scale and spatially-varying details. The registration is applied to neutral faces and facial expressions. (ii) We release an open-source software framework for registration and model-building, demonstrated on the publicly available BU3D-FE database. The released pipeline also contains an implementation of an Analysis-by-Synthesis model adaption of 2D face images, tested on the Multi-PIE and LFW database. This enables the community to reproduce, evaluate and compare the individual steps of registration to model-building and 3D/2D model fitting. (iii) Along with the framework release, we publish a new version of the Basel Face Model (BFM-2017) with an improved age distribution and an additional facial expression model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge