Chuangui Rao

Improved Label Design for Timing Synchronization in OFDM Systems against Multi-path Uncertainty

Jul 19, 2023

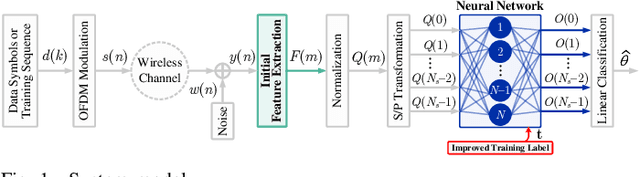

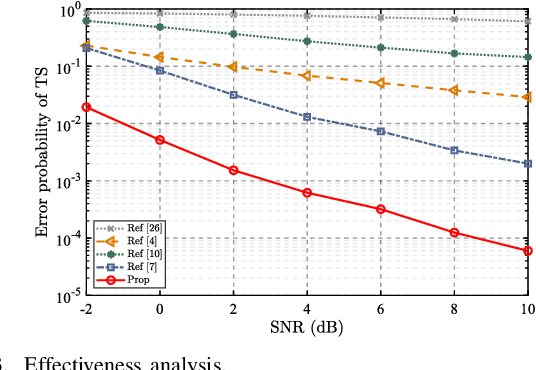

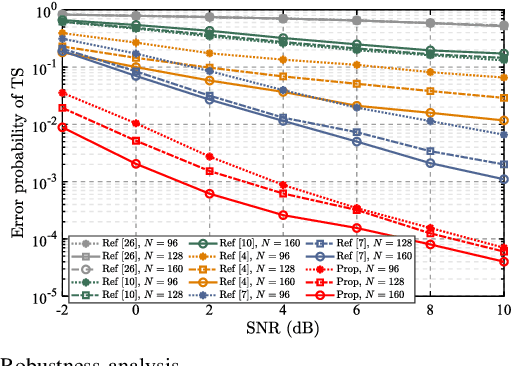

Abstract:Timing synchronization (TS) is vital for orthogonal frequency division multiplexing (OFDM) systems, which makes the discrete Fourier transform (DFT) window start at the inter-symbol-interference (ISI)-free region. However, the multi-path uncertainty in wireless communication scenarios degrades the TS correctness. To alleviate this degradation, we propose a learning-based TS method enhanced by improving the design of training label. In the proposed method, the classic cross-correlator extracts the initial TS feature for benefiting the following machine learning. Wherein, the network architecture unfolds one classic cross-correlation process. Against the multi-path uncertainty, a novel training label is designed by representing the ISI-free region and especially highlighting its approximate midpoint. Therein, the closer to the region boundary of ISI-free the smaller label values are set, expecting to locate the maximum network output in ISI-free region with a high probability. Then, to guarantee the correctness of labeling, we exploit the priori information of line-of-sight (LOS) to form a LOS-aided labeling. Numerical results confirm that, the proposed training label effectively enhances the correctness of the proposed TS learner against the multi-path uncertainty.

Metric Learning-Based Timing Synchronization by Using Lightweight Neural Network

Jul 01, 2023

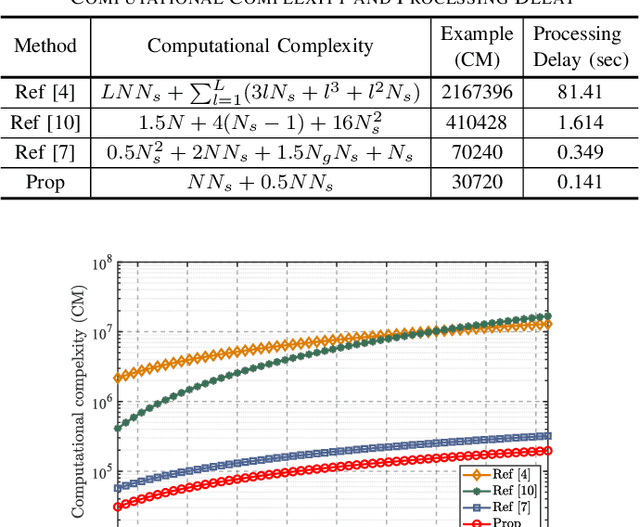

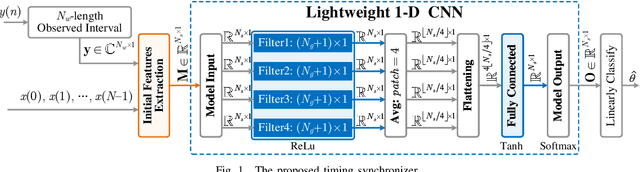

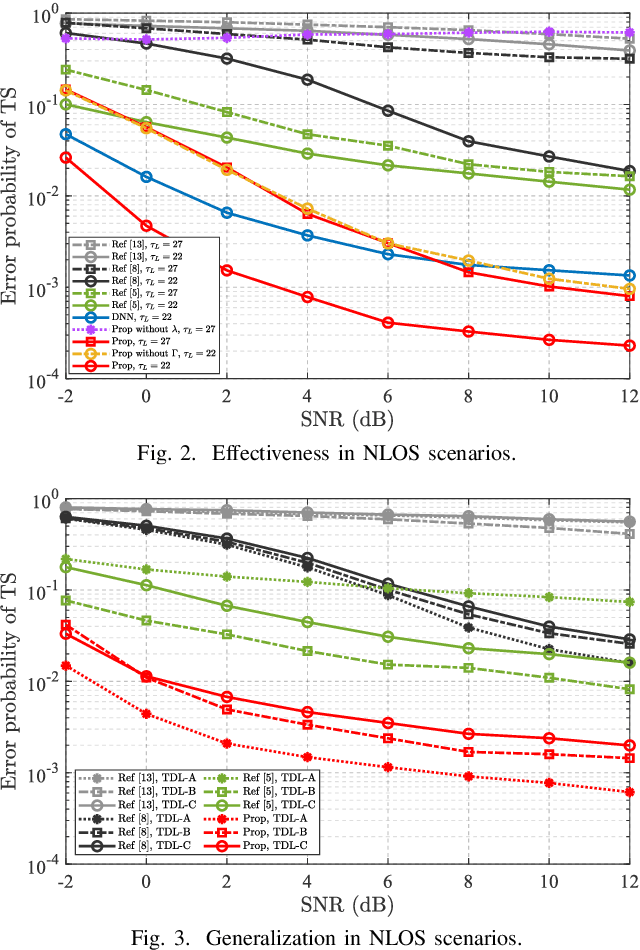

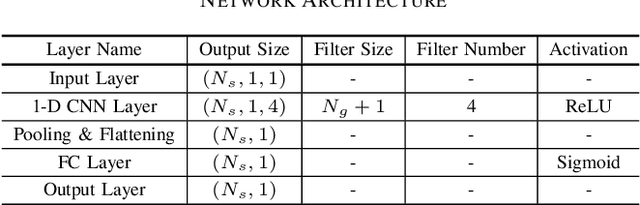

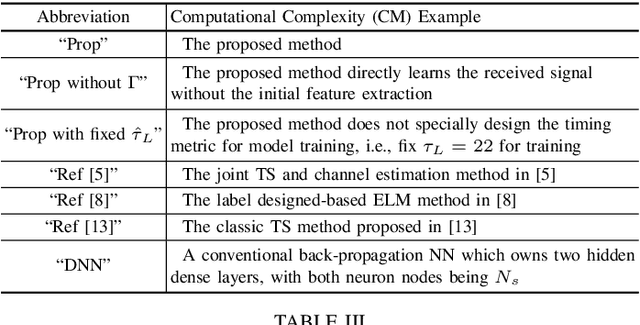

Abstract:Timing synchronization (TS) is one of the key tasks in orthogonal frequency division multiplexing (OFDM) systems. However, multi-path uncertainty corrupts the TS correctness, making OFDM systems suffer from a severe inter-symbol-interference (ISI). To tackle this issue, we propose a timing-metric learning-based TS method assisted by a lightweight one-dimensional convolutional neural network (1-D CNN). Specifically, the receptive field of 1-D CNN is specifically designed to extract the metric features from the classic synchronizer. Then, to combat the multi-path uncertainty, we employ the varying delays and gains of multi-path (the characteristics of multi-path uncertainty) to design the timing-metric objective, and thus form the training labels. This is typically different from the existing timing-metric objectives with respect to the timing synchronization point. Our method substantively increases the completeness of training data against the multi-path uncertainty due to the complete preservation of metric information. By this mean, the TS correctness is improved against the multi-path uncertainty. Numerical results demonstrate the effectiveness and generalization of the proposed TS method against the multi-path uncertainty.

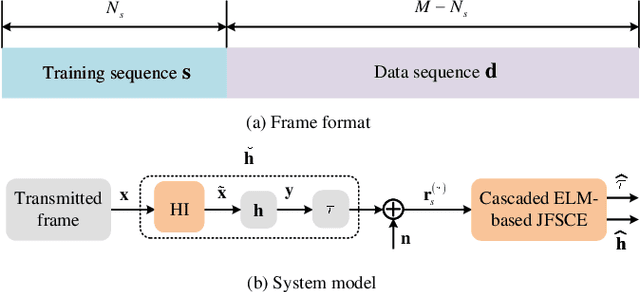

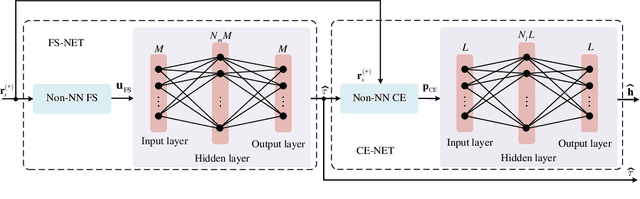

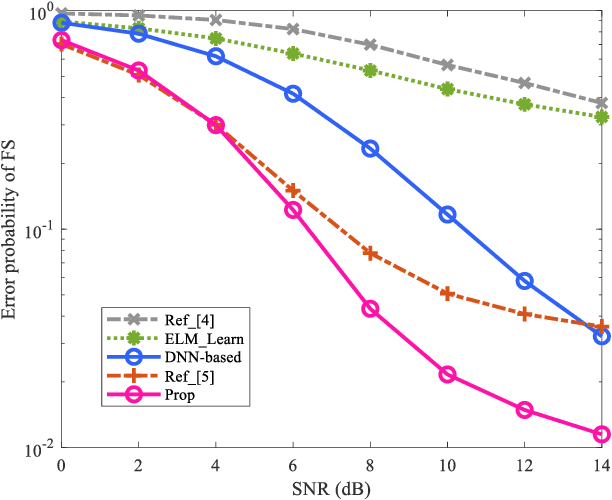

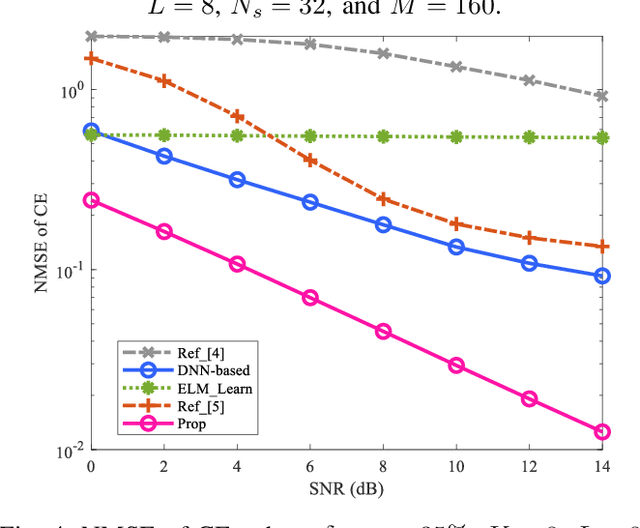

Cascaded ELM-based Joint Frame Synchronization and Channel Estimation over Rician Fading Channel with Hardware Imperfections

Feb 24, 2023

Abstract:Due to the interdependency of frame synchronization (FS) and channel estimation (CE), joint FS and CE (JFSCE) schemes are proposed to enhance their functionalities and therefore boost the overall performance of wireless communication systems. Although traditional JFSCE schemes alleviate the influence between FS and CE, they show deficiencies in dealing with hardware imperfection (HI) and deterministic line-of-sight (LOS) path. To tackle this challenge, we proposed a cascaded ELM-based JFSCE to alleviate the influence of HI in the scenario of the Rician fading channel. Specifically, the conventional JFSCE method is first employed to extract the initial features, and thus forms the non-Neural Network (NN) solutions for FS and CE, respectively. Then, the ELM-based networks, named FS-NET and CE-NET, are cascaded to capture the NN solutions of FS and CE. Simulation and analysis results show that, compared with the conventional JFSCE methods, the proposed cascaded ELM-based JFSCE significantly reduces the error probability of FS and the normalized mean square error (NMSE) of CE, even against the impacts of parameter variations.

CNN-based Timing Synchronization for OFDM Systems Assisted by Initial Path Acquisition in Frequency Selective Fading Channel

Dec 06, 2022Abstract:Multi-path fading seriously affects the accuracy of timing synchronization (TS) in orthogonal frequency division multiplexing (OFDM) systems. To tackle this issue, we propose a convolutional neural network (CNN)-based TS scheme assisted by initial path acquisition in this paper. Specifically, the classic cross-correlation method is first employed to estimate a coarse timing offset and capture an initial path, which shrinks the TS search region. Then, a one-dimensional (1-D) CNN is developed to optimize the TS of OFDM systems. Due to the narrowed search region of TS, the CNN-based TS effectively locates the accurate TS point and inspires us to construct a lightweight network in terms of computational complexity and online running time. Compared with the compressed sensing-based TS method and extreme learning machine-based TS method, simulation results show that the proposed method can effectively improve the TS performance with the reduced computational complexity and online running time. Besides, the proposed TS method presents robustness against the variant parameters of multi-path fading channels.

Label Design-based ELM Network for Timing Synchronization in OFDM Systems with Nonlinear Distortion

Jul 28, 2021

Abstract:Due to the nonlinear distortion in Orthogonal frequency division multiplexing (OFDM) systems, the timing synchronization (TS) performance is inevitably degraded at the receiver. To relieve this issue, an extreme learning machine (ELM)-based network with a novel learning label is proposed to the TS of OFDM system in our work and increases the possibility of symbol timing offset (STO) estimation residing in inter-symbol interference (ISI)-free region. Especially, by exploiting the prior information of the ISI-free region, two types of learning labels are developed to facilitate the ELM-based TS network. With designed learning labels, a timing-processing by classic TS scheme is first executed to capture the coarse timing metric (TM) and then followed by an ELM network to refine the TM. According to experiments and analysis, our scheme shows its effectiveness in the improvement of TS performance and reveals its generalization performance in different training and testing channel scenarios.

ELM-based Frame Synchronization in Nonlinear Distortion Scenario Using Superimposed Training

Mar 27, 2021

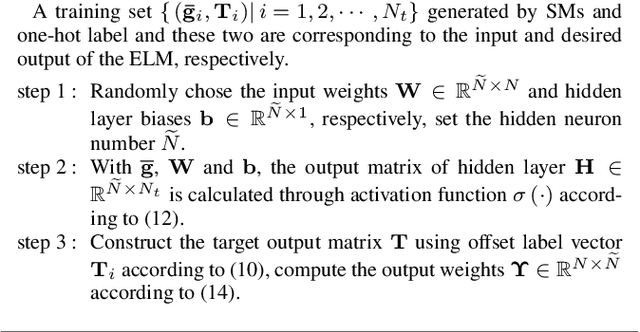

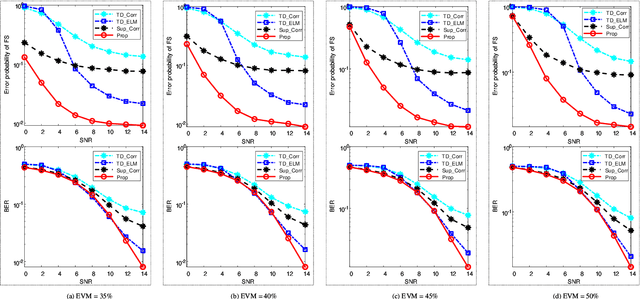

Abstract:The requirement of high spectrum efficiency puts forward higher requirements on frame synchronization (FS) in wireless communication systems. Meanwhile, a large number of nonlinear devices or blocks will inevitably cause nonlinear distortion. To avoid the occupation of bandwidth resources and overcome the difficulty of nonlinear distortion, an extreme learning machine (ELM)-based network is introduced into the superimposed training-based FS with nonlinear distortion. Firstly, a preprocessing procedure is utilized to reap the features of synchronization metric (SM). Then, based on the rough features of SM, an ELM network is constructed to estimate the offset of frame boundary. The analysis and experiment results show that, compared with existing methods, the proposed method can improve the error probability of FS and bit error rate (BER) of symbol detection (SD). In addition, this improvement has its robustness against the impacts of parameter variations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge