Chaojin Qing

Amplitude Prediction from Uplink to Downlink CSI against Receiver Distortion in FDD Systems

Aug 31, 2023

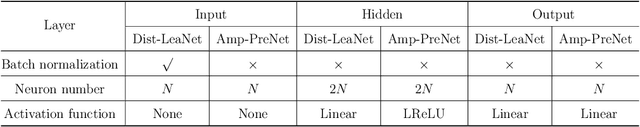

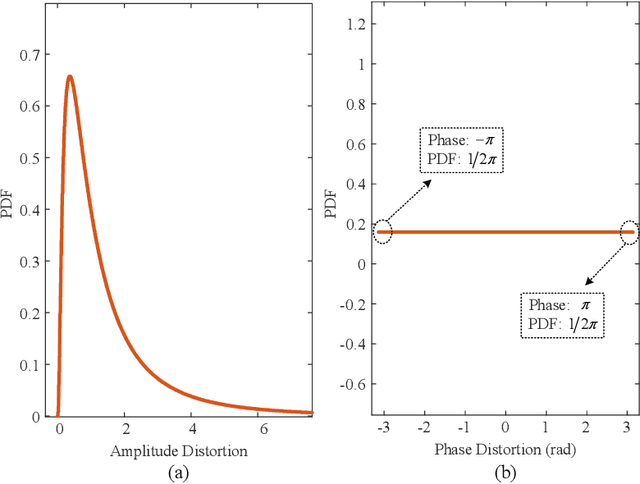

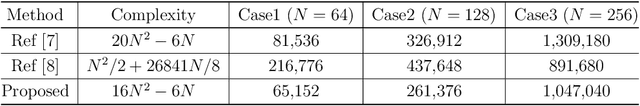

Abstract:In frequency division duplex (FDD) massive multiple-input multiple-output (mMIMO) systems, the reciprocity mismatch caused by receiver distortion seriously degrades the amplitude prediction performance of channel state information (CSI). To tackle this issue, from the perspective of distortion suppression and reciprocity calibration, a lightweight neural network-based amplitude prediction method is proposed in this paper. Specifically, with the receiver distortion at the base station (BS), conventional methods are employed to extract the amplitude feature of uplink CSI. Then, learning along the direction of the uplink wireless propagation channel, a dedicated and lightweight distortion-learning network (Dist-LeaNet) is designed to restrain the receiver distortion and calibrate the amplitude reciprocity between the uplink and downlink CSI. Subsequently, by cascading, a single hidden layer-based amplitude-prediction network (Amp-PreNet) is developed to accomplish amplitude prediction of downlink CSI based on the strong amplitude reciprocity. Simulation results show that, considering the receiver distortion in FDD systems, the proposed scheme effectively improves the amplitude prediction accuracy of downlink CSI while reducing the transmission and processing delay.

Improved Label Design for Timing Synchronization in OFDM Systems against Multi-path Uncertainty

Jul 19, 2023

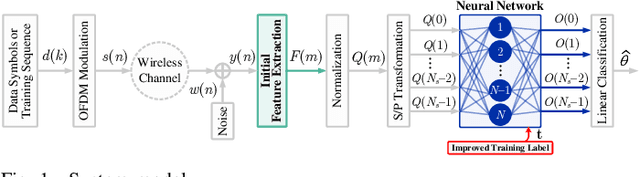

Abstract:Timing synchronization (TS) is vital for orthogonal frequency division multiplexing (OFDM) systems, which makes the discrete Fourier transform (DFT) window start at the inter-symbol-interference (ISI)-free region. However, the multi-path uncertainty in wireless communication scenarios degrades the TS correctness. To alleviate this degradation, we propose a learning-based TS method enhanced by improving the design of training label. In the proposed method, the classic cross-correlator extracts the initial TS feature for benefiting the following machine learning. Wherein, the network architecture unfolds one classic cross-correlation process. Against the multi-path uncertainty, a novel training label is designed by representing the ISI-free region and especially highlighting its approximate midpoint. Therein, the closer to the region boundary of ISI-free the smaller label values are set, expecting to locate the maximum network output in ISI-free region with a high probability. Then, to guarantee the correctness of labeling, we exploit the priori information of line-of-sight (LOS) to form a LOS-aided labeling. Numerical results confirm that, the proposed training label effectively enhances the correctness of the proposed TS learner against the multi-path uncertainty.

Metric Learning-Based Timing Synchronization by Using Lightweight Neural Network

Jul 01, 2023

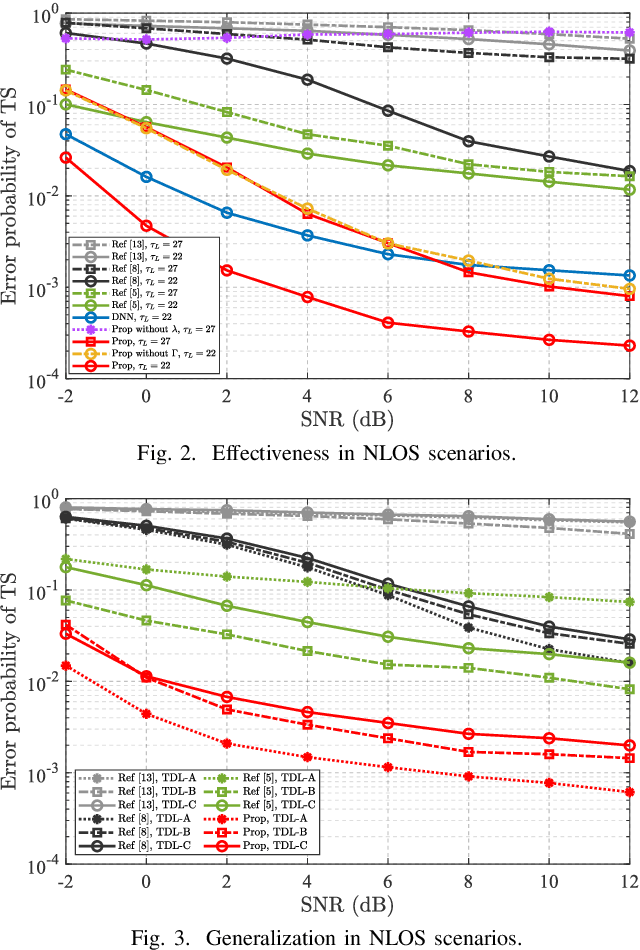

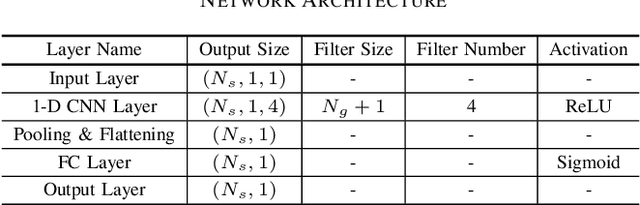

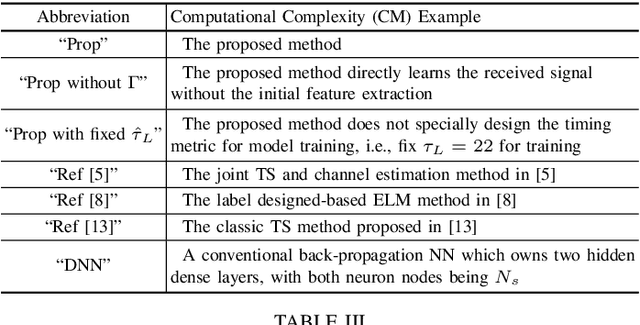

Abstract:Timing synchronization (TS) is one of the key tasks in orthogonal frequency division multiplexing (OFDM) systems. However, multi-path uncertainty corrupts the TS correctness, making OFDM systems suffer from a severe inter-symbol-interference (ISI). To tackle this issue, we propose a timing-metric learning-based TS method assisted by a lightweight one-dimensional convolutional neural network (1-D CNN). Specifically, the receptive field of 1-D CNN is specifically designed to extract the metric features from the classic synchronizer. Then, to combat the multi-path uncertainty, we employ the varying delays and gains of multi-path (the characteristics of multi-path uncertainty) to design the timing-metric objective, and thus form the training labels. This is typically different from the existing timing-metric objectives with respect to the timing synchronization point. Our method substantively increases the completeness of training data against the multi-path uncertainty due to the complete preservation of metric information. By this mean, the TS correctness is improved against the multi-path uncertainty. Numerical results demonstrate the effectiveness and generalization of the proposed TS method against the multi-path uncertainty.

ELM-based Timing Synchronization for OFDM Systems by Exploiting Computer-aided Training Strategy

Jun 30, 2023Abstract:Due to the implementation bottleneck of training data collection in realistic wireless communications systems, supervised learning-based timing synchronization (TS) is challenged by the incompleteness of training data. To tackle this bottleneck, we extend the computer-aided approach, with which the local device can generate the training data instead of generating learning labels from the received samples collected in realistic systems, and then construct an extreme learning machine (ELM)-based TS network in orthogonal frequency division multiplexing (OFDM) systems. Specifically, by leveraging the rough information of channel impulse responses (CIRs), i.e., root-mean-square (r.m.s) delay, we propose the loose constraint-based and flexible constraint-based training strategies for the learning-label design against the maximum multi-path delay. The underlying mechanism is to improve the completeness of multi-path delays that may appear in the realistic wireless channels and thus increase the statistical efficiency of the designed TS learner. By this means, the proposed ELM-based TS network can alleviate the degradation of generalization performance. Numerical results reveal the robustness and generalization of the proposed scheme against varying parameters.

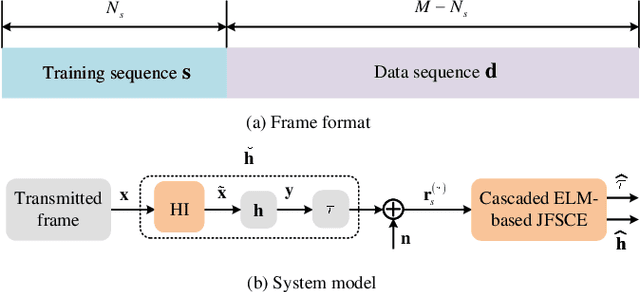

Cascaded ELM-based Joint Frame Synchronization and Channel Estimation over Rician Fading Channel with Hardware Imperfections

Feb 24, 2023

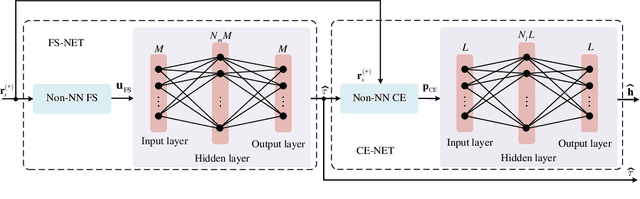

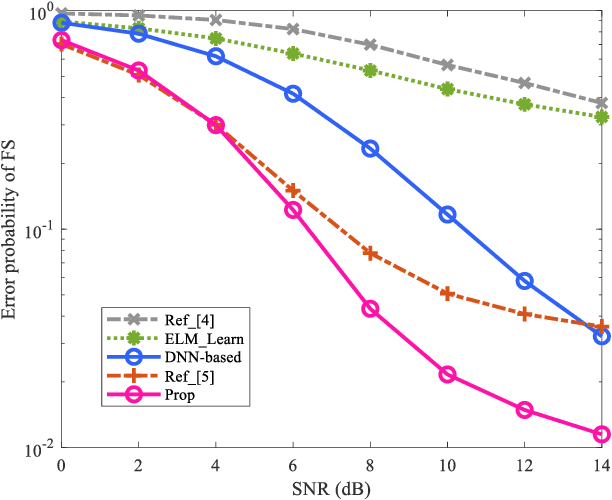

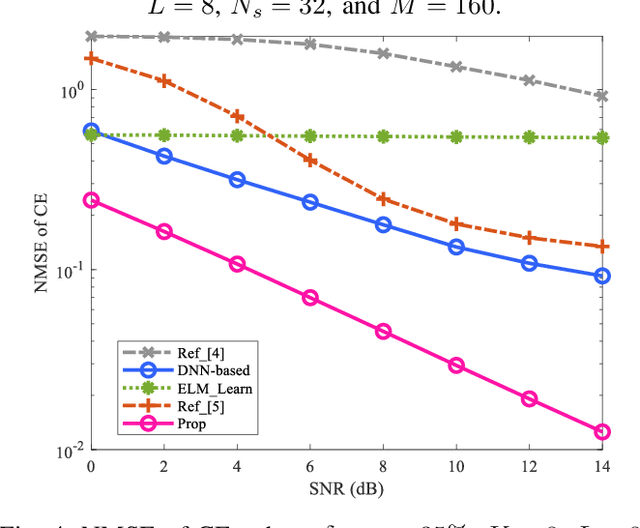

Abstract:Due to the interdependency of frame synchronization (FS) and channel estimation (CE), joint FS and CE (JFSCE) schemes are proposed to enhance their functionalities and therefore boost the overall performance of wireless communication systems. Although traditional JFSCE schemes alleviate the influence between FS and CE, they show deficiencies in dealing with hardware imperfection (HI) and deterministic line-of-sight (LOS) path. To tackle this challenge, we proposed a cascaded ELM-based JFSCE to alleviate the influence of HI in the scenario of the Rician fading channel. Specifically, the conventional JFSCE method is first employed to extract the initial features, and thus forms the non-Neural Network (NN) solutions for FS and CE, respectively. Then, the ELM-based networks, named FS-NET and CE-NET, are cascaded to capture the NN solutions of FS and CE. Simulation and analysis results show that, compared with the conventional JFSCE methods, the proposed cascaded ELM-based JFSCE significantly reduces the error probability of FS and the normalized mean square error (NMSE) of CE, even against the impacts of parameter variations.

LoS sensing-based superimposed CSI feedback for UAV-Assisted mmWave systems

Feb 21, 2023

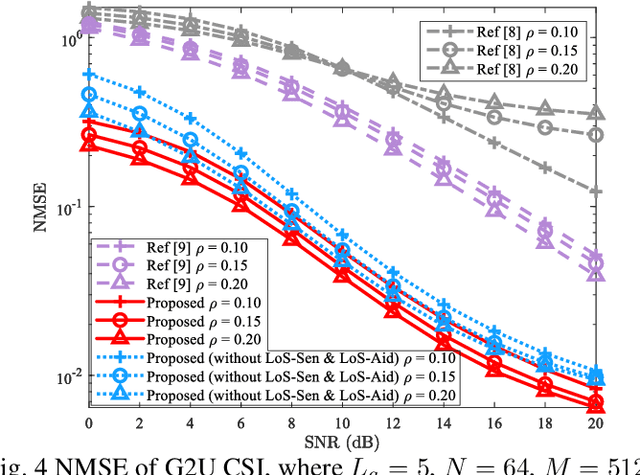

Abstract:In unmanned aerial vehicle (UAV)-assisted millimeter wave (mmWave) systems, channel state information (CSI) feedback is critical for the selection of modulation schemes, resource management, beamforming, etc. However, traditional CSI feedback methods lead to significant feedback overhead and energy consumption of the UAV transmitter, therefore shortening the system operation time. To tackle these issues, inspired by superimposed feedback and integrated sensing and communications (ISAC), a line of sight (LoS) sensing-based superimposed CSI feedback scheme is proposed. Specifically, on the UAV transmitter side, the ground-to-UAV (G2U) CSI is superimposed on the UAVto-ground (U2G) data to feed back to the ground base station (gBS). At the gBS, the dedicated LoS sensing network (LoSSenNet) is designed to sense the U2G CSI in LoS and NLoS scenarios. With the sensed result of LoS-SenNet, the determined G2U CSI from the initial feature extraction will work as the priori information to guide the subsequent operation. Specifically, for the G2U CSI in NLoS, a CSI recovery network (CSI-RecNet) and superimposed interference cancellation are developed to recover the G2U CSI and U2G data. As for the LoS scenario, a dedicated LoS aid network (LoS-AidNet) is embedded before the CSI-RecNet and the block of superimposed interference cancellation to highlight the feature of the G2U CSI. Compared with other methods of superimposed CSI feedback, simulation results demonstrate that the proposed feedback scheme effectively improves the recovery accuracy of the G2U CSI and U2G data. Besides, against parameter variations, the proposed feedback scheme presents its robustness.

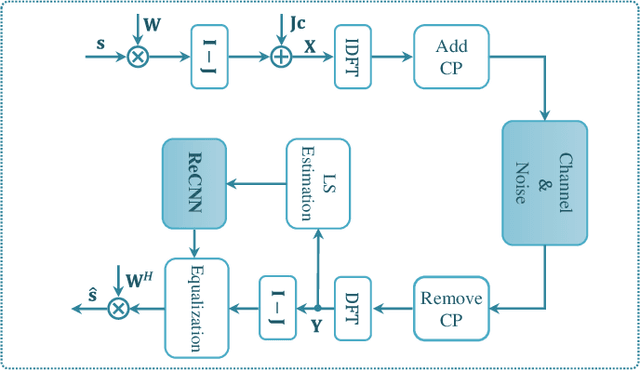

Superimposed Pilot-based Channel Estimation for RIS-Assisted IoT Systems Using Lightweight Networks

Dec 07, 2022Abstract:Conventional channel estimation (CE) for Internet of Things (IoT) systems encounters challenges such as low spectral efficiency, high energy consumption, and blocked propagation paths. Although superimposed pilot-based CE schemes and the reconfigurable intelligent surface (RIS) could partially tackle these challenges, limited researches have been done for a systematic solution. In this paper, a superimposed pilot-based CE with the reconfigurable intelligent surface (RIS)-assisted mode is proposed and further enhanced the performance by networks. Specifically, at the user equipment (UE), the pilot for CE is superimposed on the uplink user data to improve the spectral efficiency and energy consumption for IoT systems, and two lightweight networks at the base station (BS) alleviate the computational complexity and processing delay for the CE and symbol detection (SD). These dedicated networks are developed in a cooperation manner. That is, the conventional methods are employed to perform initial feature extraction, and the developed neural networks (NNs) are oriented to learn along with the extracted features. With the assistance of the extracted initial feature, the number of training data for network training is reduced. Simulation results show that, the computational complexity and processing delay are decreased without sacrificing the accuracy of CE and SD, and the normalized mean square error (NMSE) and bit error rate (BER) performance at the BS are improved against the parameter variance.

CNN-based Timing Synchronization for OFDM Systems Assisted by Initial Path Acquisition in Frequency Selective Fading Channel

Dec 06, 2022Abstract:Multi-path fading seriously affects the accuracy of timing synchronization (TS) in orthogonal frequency division multiplexing (OFDM) systems. To tackle this issue, we propose a convolutional neural network (CNN)-based TS scheme assisted by initial path acquisition in this paper. Specifically, the classic cross-correlation method is first employed to estimate a coarse timing offset and capture an initial path, which shrinks the TS search region. Then, a one-dimensional (1-D) CNN is developed to optimize the TS of OFDM systems. Due to the narrowed search region of TS, the CNN-based TS effectively locates the accurate TS point and inspires us to construct a lightweight network in terms of computational complexity and online running time. Compared with the compressed sensing-based TS method and extreme learning machine-based TS method, simulation results show that the proposed method can effectively improve the TS performance with the reduced computational complexity and online running time. Besides, the proposed TS method presents robustness against the variant parameters of multi-path fading channels.

Lightweight 1-D CNN-based Timing Synchronization for OFDM Systems with CIR Uncertainty

Sep 14, 2022

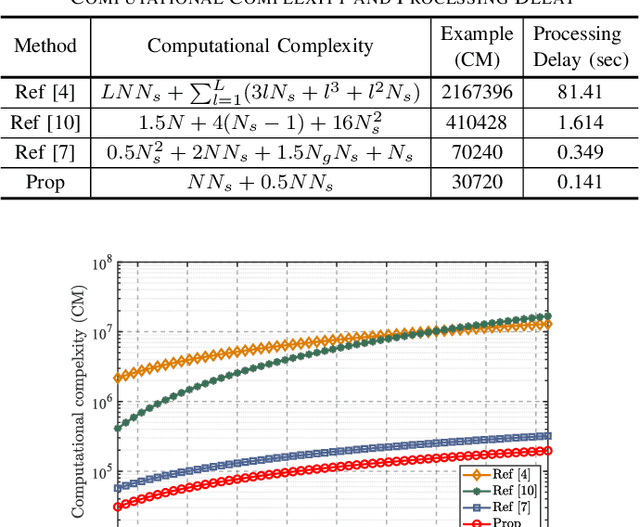

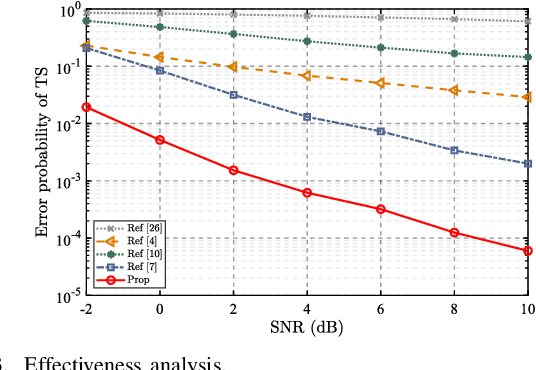

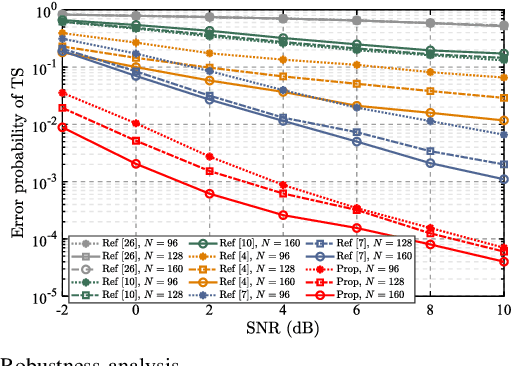

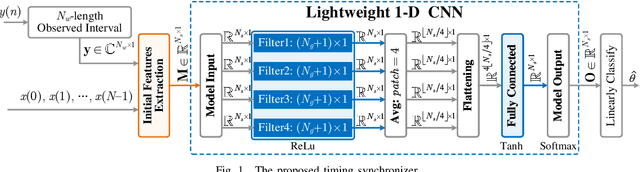

Abstract:In this letter, a lightweight one-dimensional convolutional neural network (1-D CNN)-based timing synchronization (TS) method is proposed to reduce the computational complexity and processing delay and hold the timing accuracy in orthogonal frequency division multiplexing (OFDM) systems. Specifically, the TS task is first transformed into a deep learning (DL)-based classification task, and then three iterations of the compressed sensing (CS)-based TS strategy are simplified to form a lightweight network, whose CNN layers are specially designed to highlight the classification features. Besides, to enhance the generalization performance of the proposed method against the channel impulse responses (CIR) uncertainty, the relaxed restriction for propagation delay is exploited to augment the completeness of training data. Numerical results reflect that the proposed 1-D CNN-based TS method effectively improves the TS accuracy, reduces the computational complexity and processing delay, and possesses a good generalization performance against the CIR uncertainty. The source codes of the proposed method are available at https://github.com/qingchj851/CNNTS.

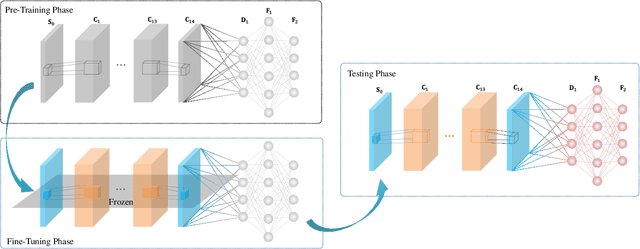

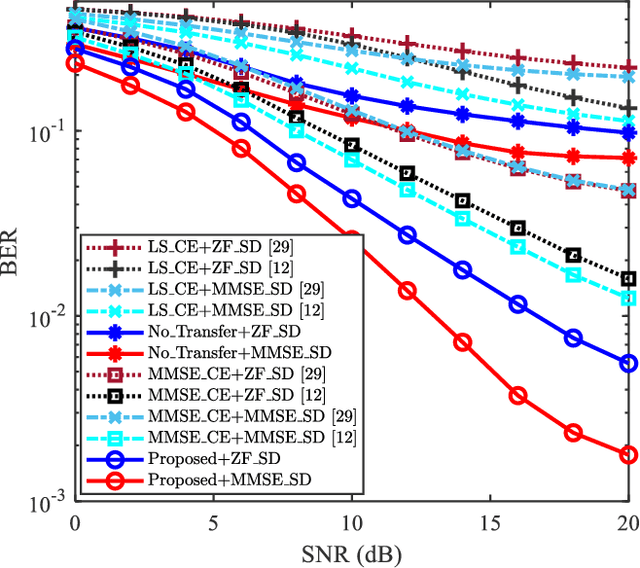

Transfer Learning-based Channel Estimation in Orthogonal Frequency Division Multiplexing Systems Using Data-nulling Superimposed Pilots

May 28, 2022

Abstract:Data-nulling superimposed pilot (DNSP) effectively alleviates the superimposed interference of superimposed training (ST)-based channel estimation (CE) in orthogonal frequency division multiplexing (OFDM) systems, while facing the challenges of the estimation accuracy and computational complexity. By developing the promising solutions of deep learning (DL) in the physical layer of wireless communication, we fuse the DNSP and DL to tackle these challenges in this paper. Nevertheless, due to the changes of wireless scenarios, the model mismatch of DL leads to the performance degradation of CE, and thus faces the issue of network retraining. To address this issue, a lightweight transfer learning (TL) network is further proposed for the DL-based DNSP scheme, and thus structures a TL-based CE in OFDM systems. Specifically, based on the linear receiver, the least squares estimation is first employed to extract the initial features of CE. With the extracted features, we develop a convolutional neural network (CNN) to fuse the solutions of DLbased CE and the CE of DNSP. Finally, a lightweight TL network is constructed to address the model mismatch. To this end, a novel CE network for the DNSP scheme in OFDM systems is structured, which improves its estimation accuracy and alleviates the model mismatch. The experimental results show that in all signal-to-noise-ratio (SNR) regions, the proposed method achieves lower normalized mean squared error (NMSE) than the existing DNSP schemes with minimum mean square error (MMSE)-based CE. For example, when the SNR is 0 decibel (dB), the proposed scheme achieves similar NMSE as that of the MMSE-based CE scheme at 20 dB, thereby significantly improving the estimation accuracy of CE. In addition, relative to the existing schemes, the improvement of the proposed scheme presents its robustness against the impacts of parameter variations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge