Christopher Ferrie

Quantum-Inspired Optimization Process for Data Imputation

May 07, 2025Abstract:Data imputation is a critical step in data pre-processing, particularly for datasets with missing or unreliable values. This study introduces a novel quantum-inspired imputation framework evaluated on the UCI Diabetes dataset, which contains biologically implausible missing values across several clinical features. The method integrates Principal Component Analysis (PCA) with quantum-assisted rotations, optimized through gradient-free classical optimizers -COBYLA, Simulated Annealing, and Differential Evolution to reconstruct missing values while preserving statistical fidelity. Reconstructed values are constrained within +/-2 standard deviations of original feature distributions, avoiding unrealistic clustering around central tendencies. This approach achieves a substantial and statistically significant improvement, including an average reduction of over 85% in Wasserstein distance and Kolmogorov-Smirnov test p-values between 0.18 and 0.22, compared to p-values > 0.99 in classical methods such as Mean, KNN, and MICE. The method also eliminates zero-value artifacts and enhances the realism and variability of imputed data. By combining quantum-inspired transformations with a scalable classical framework, this methodology provides a robust solution for imputation tasks in domains such as healthcare and AI pipelines, where data quality and integrity are crucial.

Quantum SMOTE with Angular Outliers: Redefining Minority Class Handling

Jan 31, 2025Abstract:This paper introduces Quantum-SMOTEV2, an advanced variant of the Quantum-SMOTE method, leveraging quantum computing to address class imbalance in machine learning datasets without K-Means clustering. Quantum-SMOTEV2 synthesizes data samples using swap tests and quantum rotation centered around a single data centroid, concentrating on the angular distribution of minority data points and the concept of angular outliers (AOL). Experimental results show significant enhancements in model performance metrics at moderate SMOTE levels (30-36%), which previously required up to 50% with the original method. Quantum-SMOTEV2 maintains essential features of its predecessor (arXiv:2402.17398), such as rotation angle, minority percentage, and splitting factor, allowing for tailored adaptation to specific dataset needs. The method is scalable, utilizing compact swap tests and low depth quantum circuits to accommodate a large number of features. Evaluation on the public Cell-to-Cell Telecom dataset with Random Forest (RF), K-Nearest Neighbours (KNN) Classifier, and Neural Network (NN) illustrates that integrating Angular Outliers modestly boosts classification metrics like accuracy, F1 Score, AUC-ROC, and AUC-PR across different proportions of synthetic data, highlighting the effectiveness of Quantum-SMOTEV2 in enhancing model performance for edge cases.

A Quantum Approach to Synthetic Minority Oversampling Technique (SMOTE)

Feb 28, 2024Abstract:The paper proposes the Quantum-SMOTE method, a novel solution that uses quantum computing techniques to solve the prevalent problem of class imbalance in machine learning datasets. Quantum-SMOTE, inspired by the Synthetic Minority Oversampling Technique (SMOTE), generates synthetic data points using quantum processes such as swap tests and quantum rotation. The process varies from the conventional SMOTE algorithm's usage of K-Nearest Neighbors (KNN) and Euclidean distances, enabling synthetic instances to be generated from minority class data points without relying on neighbor proximity. The algorithm asserts greater control over the synthetic data generation process by introducing hyperparameters such as rotation angle, minority percentage, and splitting factor, which allow for customization to specific dataset requirements. The approach is tested on a public dataset of TelecomChurn and evaluated alongside two prominent classification algorithms, Random Forest and Logistic Regression, to determine its impact along with varying proportions of synthetic data.

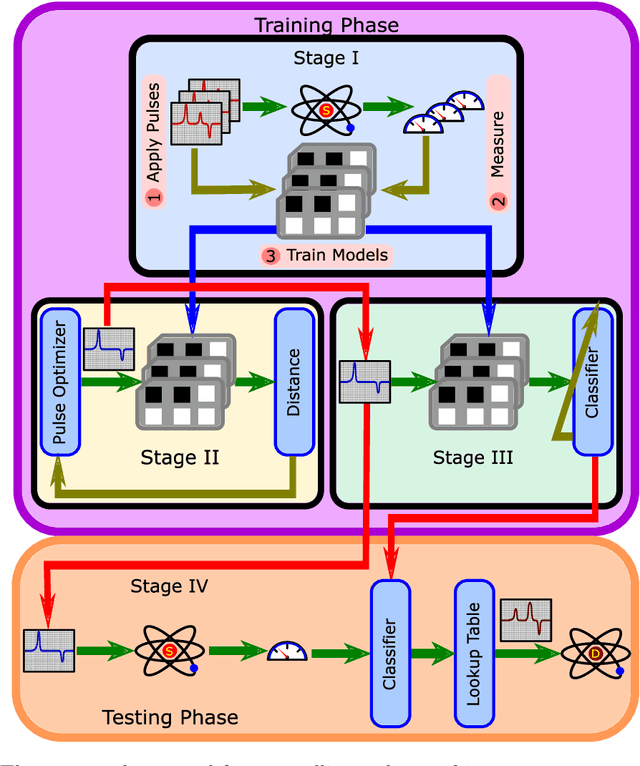

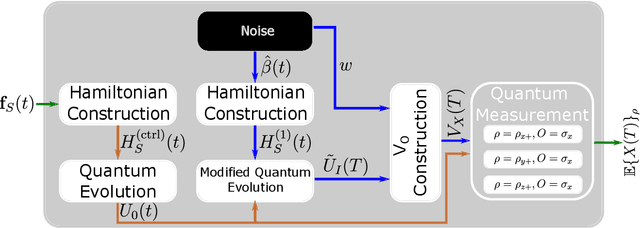

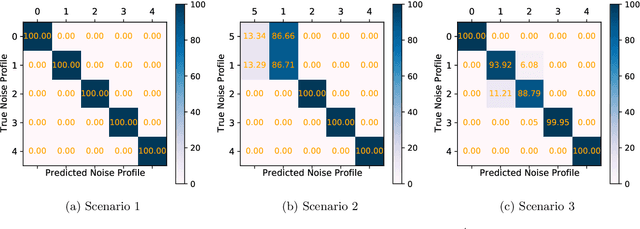

Noise Detection with Spectator Qubits and Quantum Feature Engineering

Mar 24, 2021

Abstract:Designing optimal control pulses that drive a noisy qubit to a target state is a challenging and crucial task for quantum engineering. In a situation where the properties of the quantum noise affecting the system are dynamic, a periodic characterization procedure is essential to ensure the models are updated. As a result, the operation of the qubit is disrupted frequently. In this paper, we propose a protocol that addresses this challenge by making use of a spectator qubit to monitor the noise in real-time. We develop a quantum machine-learning-based quantum feature engineering approach for designing the protocol. The complexity of the protocol is front-loaded in a characterization phase, which allow real-time execution during the quantum computations. We present the results of numerical simulations that showcase the favorable performance of the protocol.

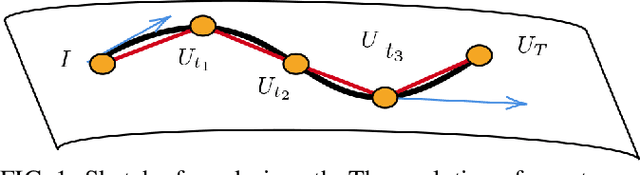

Quantum Geometric Machine Learning for Quantum Circuits and Control

Jul 07, 2020

Abstract:The application of machine learning techniques to solve problems in quantum control together with established geometric methods for solving optimisation problems leads naturally to an exploration of how machine learning approaches can be used to enhance geometric approaches to solving problems in quantum information processing. In this work, we review and extend the application of deep learning to quantum geometric control problems. Specifically, we demonstrate enhancements in time-optimal control in the context of quantum circuit synthesis problems by applying novel deep learning algorithms in order to approximate geodesics (and thus minimal circuits) along Lie group manifolds relevant to low-dimensional multi-qubit systems, such as SU(2), SU(4) and SU(8). We demonstrate the superior performance of greybox models, which combine traditional blackbox algorithms with prior domain knowledge of quantum mechanics, as means of learning underlying quantum circuit distributions of interest. Our results demonstrate how geometric control techniques can be used to both (a) verify the extent to which geometrically synthesised quantum circuits lie along geodesic, and thus time-optimal, routes and (b) synthesise those circuits. Our results are of interest to researchers in quantum control and quantum information theory seeking to combine machine learning and geometric techniques for time-optimal control problems.

Robust Online Hamiltonian Learning

Sep 18, 2012

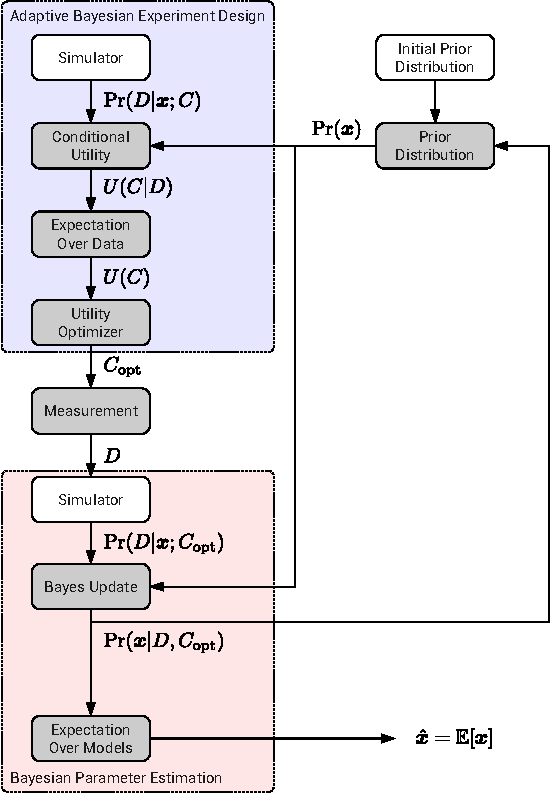

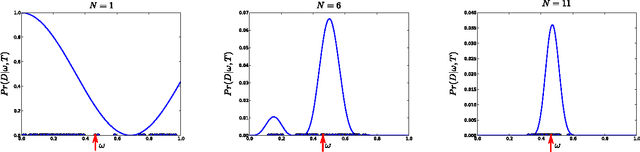

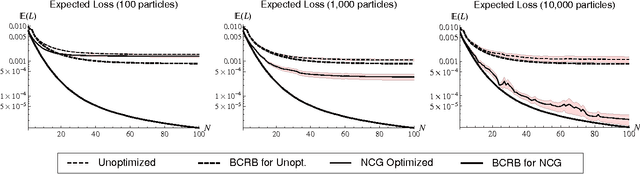

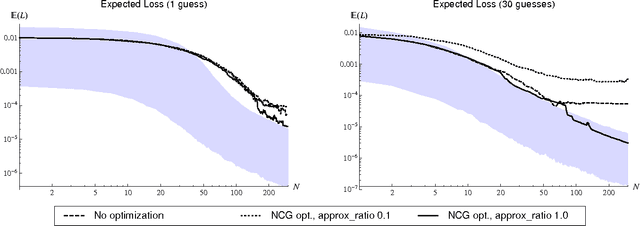

Abstract:In this work we combine two distinct machine learning methodologies, sequential Monte Carlo and Bayesian experimental design, and apply them to the problem of inferring the dynamical parameters of a quantum system. We design the algorithm with practicality in mind by including parameters that control trade-offs between the requirements on computational and experimental resources. The algorithm can be implemented online (during experimental data collection), avoiding the need for storage and post-processing. Most importantly, our algorithm is capable of learning Hamiltonian parameters even when the parameters change from experiment-to-experiment, and also when additional noise processes are present and unknown. The algorithm also numerically estimates the Cramer-Rao lower bound, certifying its own performance.

* 24 pages, 12 figures; to appear in New Journal of Physics

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge