Christopher C Holmes

Multi-Facet Clustering Variational Autoencoders

Jun 09, 2021

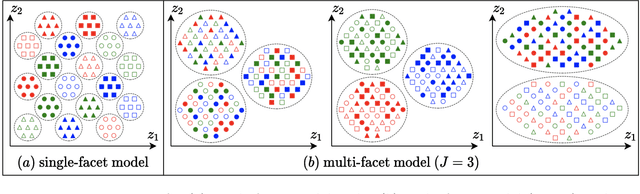

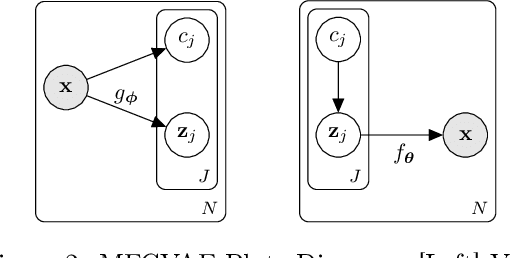

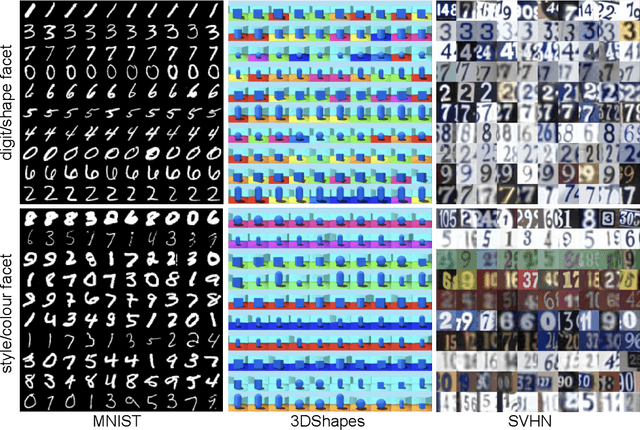

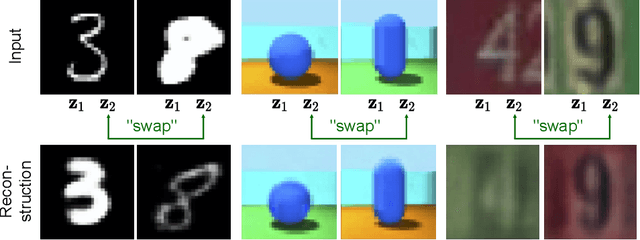

Abstract:Work in deep clustering focuses on finding a single partition of data. However, high-dimensional data, such as images, typically feature multiple interesting characteristics one could cluster over. For example, images of objects against a background could be clustered over the shape of the object and separately by the colour of the background. In this paper, we introduce Multi-Facet Clustering Variational Autoencoders (MFCVAE), a novel class of variational autoencoders with a hierarchy of latent variables, each with a Mixture-of-Gaussians prior, that learns multiple clusterings simultaneously, and is trained fully unsupervised and end-to-end. MFCVAE uses a progressively-trained ladder architecture which leads to highly stable performance. We provide novel theoretical results for optimising the ELBO analytically with respect to the categorical variational posterior distribution, and corrects earlier influential theoretical work. On image benchmarks, we demonstrate that our approach separates out and clusters over different aspects of the data in a disentangled manner. We also show other advantages of our model: the compositionality of its latent space and that it provides controlled generation of samples.

Semi-Unsupervised Learning with Deep Generative Models: Clustering and Classifying using Ultra-Sparse Labels

Jan 24, 2019

Abstract:We introduce $\textit{semi-unsupervised learning}$, an extreme case of semi-supervised learning with ultra-sparse categorisation where some classes have no labels in the training set. That is, in the training data some classes are sparsely labelled and other classes appear only as unlabelled data. Many real-world datasets are conceivably of this type. We demonstrate that effective learning in this regime is only possible when a model is capable of capturing both semi-supervised and unsupervised learning. We develop two deep generative models for classification in this regime that extend previous deep generative models designed for semi-supervised learning. By changing their probabilistic structure to contain a mixture of Gaussians in their continuous latent space, these new models can learn in both unsupervised and semi-unsupervised paradigms. We demonstrate their performance both for semi-unsupervised and unsupervised learning on various standard datasets. We show that our models can learn in an semi-unsupervised manner on Fashion-MNIST. Here we artificially mask out all labels for half of the classes of data and keep $2\%$ of labels for the remaining classes. Our model is able to learn effectively, obtaining a trained classifier with $(77.2\pm1.3)\%$ test set accuracy. We also can train on Fashion-MNIST unsupervised, obtaining $(75.2\pm1.5)\%$ test set accuracy. Additionally, doing the same for MNIST unsupervised we get $(96.3\pm0.9)\%$ test set accuracy, which is state-of-the art for fully probabilistic deep generative models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge