Christian Friedrich

A Unified Control Architecture for Macro-Micro Manipulation using a Active Remote Center of Compliance for Manufacturing Applications

Feb 02, 2026Abstract:Macro-micro manipulators combine a macro manipulator with a large workspace, such as an industrial robot, with a lightweight, high-bandwidth micro manipulator. This enables highly dynamic interaction control while preserving the wide workspace of the robot. Traditionally, position control is assigned to the macro manipulator, while the micro manipulator handles the interaction with the environment, limiting the achievable interaction control bandwidth. To solve this, we propose a novel control architecture that incorporates the macro manipulator into the active interaction control. This leads to a increase in control bandwidth by a factor of 2.1 compared to the state of the art architecture, based on the leader-follower approach and factor 12.5 compared to traditional robot-based force control. Further we propose surrogate models for a more efficient controller design and easy adaptation to hardware changes. We validate our approach by comparing it against the other control schemes in different experiments, like collision with an object, following a force trajectory and industrial assembly tasks.

Automated Generation of Precedence Graphs in Digital Value Chains for Automotive Production

Apr 28, 2025Abstract:This study examines the digital value chain in automotive manufacturing, focusing on the identification, software flashing, customization, and commissioning of electronic control units in vehicle networks. A novel precedence graph design is proposed to optimize this process chain using an automated scheduling algorithm that employs mixed integer linear programming techniques. The results show significant improvements in key metrics. The algorithm reduces the number of production stations equipped with expensive hardware and software to execute digital value chain processes, while increasing capacity utilization through efficient scheduling and reduced idle time. Task parallelization is optimized, resulting in streamlined workflows and increased throughput. Compared to the traditional method, the automated approach has reduced preparation time by 50% and reduced scheduling activities, as it now takes two minutes to create the precedence graph. The flexibility of the algorithm's constraints allows for vehicle-specific configurations while maintaining high responsiveness, eliminating backup stations and facilitating the integration of new topologies. Automated scheduling significantly outperforms manual methods in efficiency, functionality, and adaptability.

QBIT: Quality-Aware Cloud-Based Benchmarking for Robotic Insertion Tasks

Mar 10, 2025

Abstract:Insertion tasks are fundamental yet challenging for robots, particularly in autonomous operations, due to their continuous interaction with the environment. AI-based approaches appear to be up to the challenge, but in production they must not only achieve high success rates. They must also ensure insertion quality and reliability. To address this, we introduce QBIT, a quality-aware benchmarking framework that incorporates additional metrics such as force energy, force smoothness and completion time to provide a comprehensive assessment. To ensure statistical significance and minimize the sim-to-real gap, we randomize contact parameters in the MuJoCo simulator, account for perceptual uncertainty, and conduct large-scale experiments on a Kubernetes-based infrastructure. Our microservice-oriented architecture ensures extensibility, broad applicability, and improved reproducibility. To facilitate seamless transitions to physical robotic testing, we use ROS2 with containerization to reduce integration barriers. We evaluate QBIT using three insertion approaches: geometricbased, force-based, and learning-based, in both simulated and real-world environments. In simulation, we compare the accuracy of contact simulation using different mesh decomposition techniques. Our results demonstrate the effectiveness of QBIT in comparing different insertion approaches and accelerating the transition from laboratory to real-world applications. Code is available on GitHub.

Evaluation of Artificial Intelligence Methods for Lead Time Prediction in Non-Cycled Areas of Automotive Production

Jan 15, 2025

Abstract:The present study examines the effectiveness of applying Artificial Intelligence methods in an automotive production environment to predict unknown lead times in a non-cycle-controlled production area. Data structures are analyzed to identify contextual features and then preprocessed using one-hot encoding. Methods selection focuses on supervised machine learning techniques. In supervised learning methods, regression and classification methods are evaluated. Continuous regression based on target size distribution is not feasible. Classification methods analysis shows that Ensemble Learning and Support Vector Machines are the most suitable. Preliminary study results indicate that gradient boosting algorithms LightGBM, XGBoost, and CatBoost yield the best results. After further testing and extensive hyperparameter optimization, the final method choice is the LightGBM algorithm. Depending on feature availability and prediction interval granularity, relative prediction accuracies of up to 90% can be achieved. Further tests highlight the importance of periodic retraining of AI models to accurately represent complex production processes using the database. The research demonstrates that AI methods can be effectively applied to highly variable production data, adding business value by providing an additional metric for various control tasks while outperforming current non AI-based systems.

Data-driven tool wear prediction in milling, based on a process-integrated single-sensor approach

Dec 27, 2024Abstract:Accurate tool wear prediction is essential for maintaining productivity and minimizing costs in machining. However, the complex nature of the tool wear process poses significant challenges to achieving reliable predictions. This study explores data-driven methods, in particular deep learning, for tool wear prediction. Traditional data-driven approaches often focus on a single process, relying on multi-sensor setups and extensive data generation, which limits generalization to new settings. Moreover, multi-sensor integration is often impractical in industrial environments. To address these limitations, this research investigates the transferability of predictive models using minimal training data, validated across two processes. Furthermore, it uses a simple setup with a single acceleration sensor to establish a low-cost data generation approach that facilitates the generalization of models to other processes via transfer learning. The study evaluates several machine learning models, including convolutional neural networks (CNN), long short-term memory networks (LSTM), support vector machines (SVM) and decision trees, trained on different input formats such as feature vectors and short-time Fourier transform (STFT). The performance of the models is evaluated on different amounts of training data, including scenarios with significantly reduced datasets, providing insight into their effectiveness under constrained data conditions. The results demonstrate the potential of specific models and configurations for effective tool wear prediction, contributing to the development of more adaptable and efficient predictive maintenance strategies in machining. Notably, the ConvNeXt model has an exceptional performance, achieving an 99.1% accuracy in identifying tool wear using data from only four milling tools operated until they are worn.

Highly dynamic physical interaction for robotics: design and control of an active remote center of compliance

Sep 16, 2024

Abstract:Robot interaction control is often limited to low dynamics or low flexibility, depending on whether an active or passive approach is chosen. In this work, we introduce a hybrid control scheme that combines the advantages of active and passive interaction control. To accomplish this, we propose the design of a novel Active Remote Center of Compliance (ARCC), which is based on a passive and active element which can be used to directly control the interaction forces. We introduce surrogate models for a dynamic comparison against purely robot-based interaction schemes. In a comparative validation, ARCC drastically improves the interaction dynamics, leading to an increase in the motion bandwidth of up to 31 times. We introduce further our control approach as well as the integration in the robot controller. Finally, we analyze ARCC on different industrial benchmarks like peg-in-hole, top-hat rail assembly and contour following problems and compare it against the state of the art, to highlight the dynamic and flexibility. The proposed system is especially suited if the application requires a low cycle time combined with a sensitive manipulation.

PIPE: Process Informed Parameter Estimation, a learning based approach to task generalized system identification

May 11, 2024

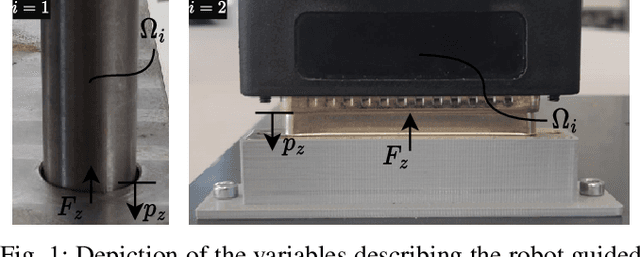

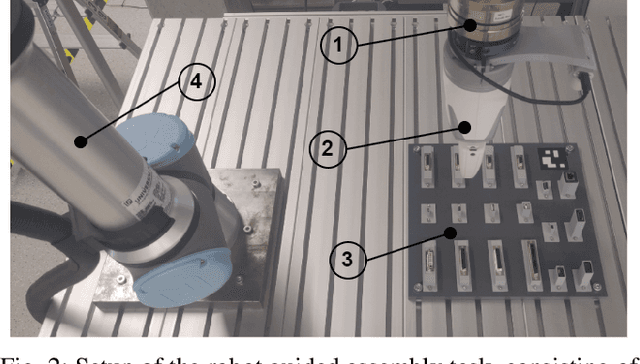

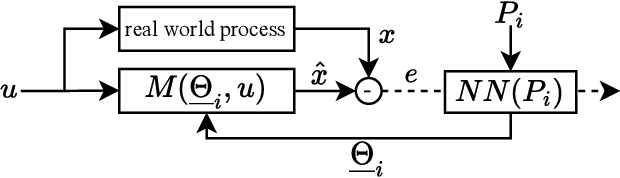

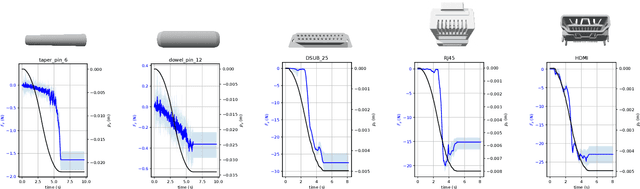

Abstract:We address the problem of robot guided assembly tasks, by using a learning-based approach to identify contact model parameters for known and novel parts. First, a Variational Autoencoder (VAE) is used to extract geometric features of assembly parts. Then, we combine the extracted features with physical knowledge to derive the parameters of a contact model using our newly proposed neural network structure. The measured force from real experiments is used to supervise the predicted forces, thus avoiding the need for ground truth model parameters. Although trained only on a small set of assembly parts, good contact model estimation for unknown objects were achieved. Our main contribution is the network structure that allows us to estimate contact models of assembly tasks depending on the geometry of the part to be joined. Where current system identification processes have to record new data for a new assembly process, our method only requires the 3D model of the assembly part. We evaluate our method by estimating contact models for robot-guided assembly tasks of pin connectors as well as electronic plugs and compare the results with real experiments.

Robot Agnostic Visual Servoing considering kinematic constraints enabled by a decoupled network trajectory planner structure

May 11, 2024Abstract:We propose a visual servoing method consisting of a detection network and a velocity trajectory planner. First, the detection network estimates the objects position and orientation in the image space. Furthermore, these are normalized and filtered. The direction and orientation is then the input to the trajectory planner, which considers the kinematic constrains of the used robotic system. This allows safe and stable control, since the kinematic boundary values are taken into account in planning. Also, by having direction estimation and velocity planner separated, the learning part of the method does not directly influence the control value. This also enables the transfer of the method to different robotic systems without retraining, therefore being robot agnostic. We evaluate our method on different visual servoing tasks with and without clutter on two different robotic systems. Our method achieved mean absolute position errors of <0.5 mm and orientation errors of <1{\deg}. Additionally, we transferred the method to a new system which differs in robot and camera, emphasizing robot agnostic capability of our method.

Robot Learning of 6 DoF Grasping using Model-based Adaptive Primitives

Mar 23, 2021

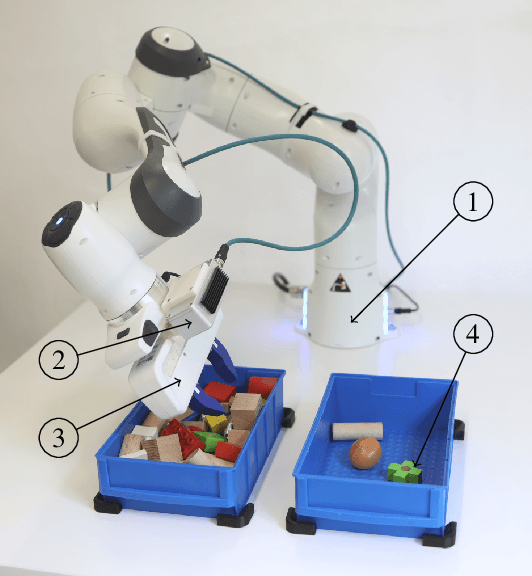

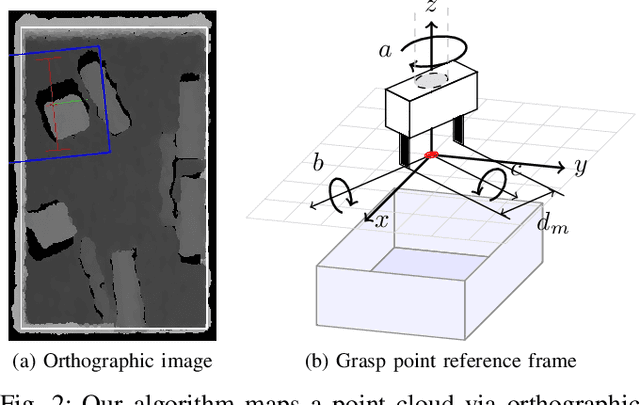

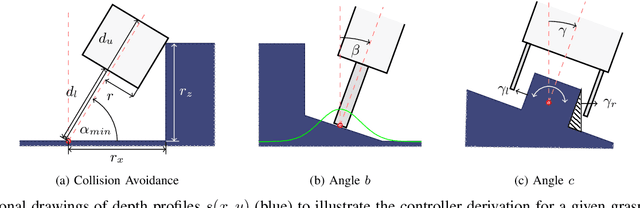

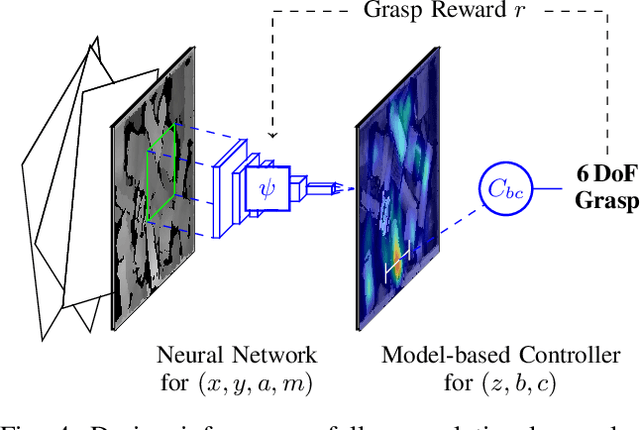

Abstract:Robot learning is often simplified to planar manipulation due to its data consumption. Then, a common approach is to use a fully-convolutional neural network to estimate the reward of grasp primitives. In this work, we extend this approach by parametrizing the two remaining, lateral Degrees of Freedom (DoFs) of the primitives. We apply this principle to the task of 6 DoF bin picking: We introduce a model-based controller to calculate angles that avoid collisions, maximize the grasp quality while keeping the uncertainty small. As the controller is integrated into the training, our hybrid approach is able to learn about and exploit the model-based controller. After real-world training of 27000 grasp attempts, the robot is able to grasp known objects with a success rate of over 92% in dense clutter. Grasp inference takes less than 50ms. In further real-world experiments, we evaluate grasp rates in a range of scenarios including its ability to generalize to unknown objects. We show that the system is able to avoid collisions, enabling grasps that would not be possible without primitive adaption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge