Constantin Schempp

QBIT: Quality-Aware Cloud-Based Benchmarking for Robotic Insertion Tasks

Mar 10, 2025

Abstract:Insertion tasks are fundamental yet challenging for robots, particularly in autonomous operations, due to their continuous interaction with the environment. AI-based approaches appear to be up to the challenge, but in production they must not only achieve high success rates. They must also ensure insertion quality and reliability. To address this, we introduce QBIT, a quality-aware benchmarking framework that incorporates additional metrics such as force energy, force smoothness and completion time to provide a comprehensive assessment. To ensure statistical significance and minimize the sim-to-real gap, we randomize contact parameters in the MuJoCo simulator, account for perceptual uncertainty, and conduct large-scale experiments on a Kubernetes-based infrastructure. Our microservice-oriented architecture ensures extensibility, broad applicability, and improved reproducibility. To facilitate seamless transitions to physical robotic testing, we use ROS2 with containerization to reduce integration barriers. We evaluate QBIT using three insertion approaches: geometricbased, force-based, and learning-based, in both simulated and real-world environments. In simulation, we compare the accuracy of contact simulation using different mesh decomposition techniques. Our results demonstrate the effectiveness of QBIT in comparing different insertion approaches and accelerating the transition from laboratory to real-world applications. Code is available on GitHub.

PIPE: Process Informed Parameter Estimation, a learning based approach to task generalized system identification

May 11, 2024

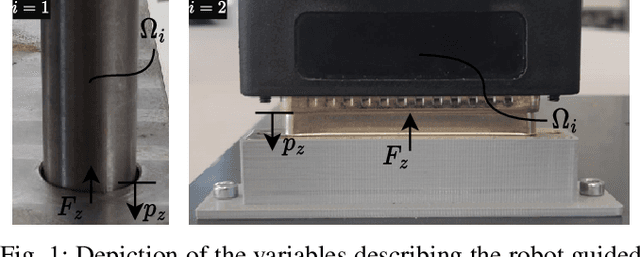

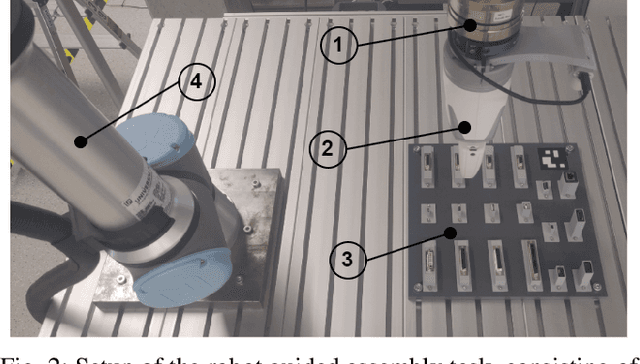

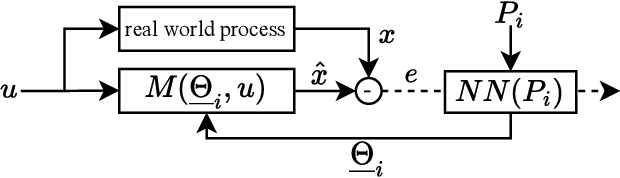

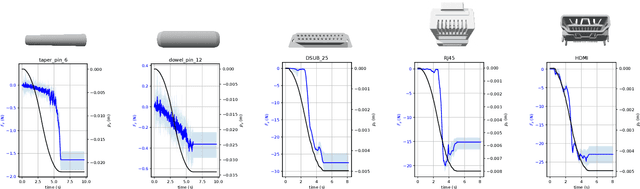

Abstract:We address the problem of robot guided assembly tasks, by using a learning-based approach to identify contact model parameters for known and novel parts. First, a Variational Autoencoder (VAE) is used to extract geometric features of assembly parts. Then, we combine the extracted features with physical knowledge to derive the parameters of a contact model using our newly proposed neural network structure. The measured force from real experiments is used to supervise the predicted forces, thus avoiding the need for ground truth model parameters. Although trained only on a small set of assembly parts, good contact model estimation for unknown objects were achieved. Our main contribution is the network structure that allows us to estimate contact models of assembly tasks depending on the geometry of the part to be joined. Where current system identification processes have to record new data for a new assembly process, our method only requires the 3D model of the assembly part. We evaluate our method by estimating contact models for robot-guided assembly tasks of pin connectors as well as electronic plugs and compare the results with real experiments.

Robot Agnostic Visual Servoing considering kinematic constraints enabled by a decoupled network trajectory planner structure

May 11, 2024Abstract:We propose a visual servoing method consisting of a detection network and a velocity trajectory planner. First, the detection network estimates the objects position and orientation in the image space. Furthermore, these are normalized and filtered. The direction and orientation is then the input to the trajectory planner, which considers the kinematic constrains of the used robotic system. This allows safe and stable control, since the kinematic boundary values are taken into account in planning. Also, by having direction estimation and velocity planner separated, the learning part of the method does not directly influence the control value. This also enables the transfer of the method to different robotic systems without retraining, therefore being robot agnostic. We evaluate our method on different visual servoing tasks with and without clutter on two different robotic systems. Our method achieved mean absolute position errors of <0.5 mm and orientation errors of <1{\deg}. Additionally, we transferred the method to a new system which differs in robot and camera, emphasizing robot agnostic capability of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge