Choh Man Teng

The Role of Semantic Parsing in Understanding Procedural Text

Feb 14, 2023

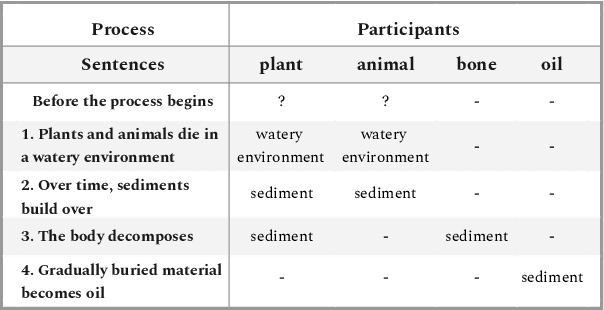

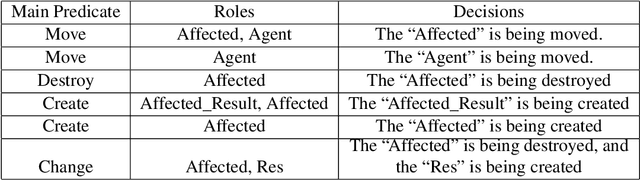

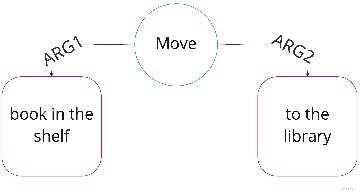

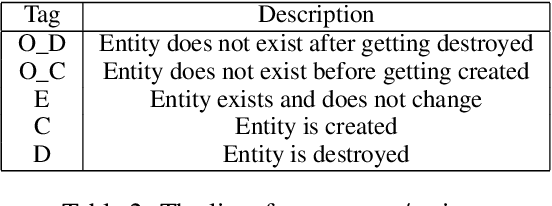

Abstract:In this paper, we investigate whether symbolic semantic representations, extracted from deep semantic parsers, can help reasoning over the states of involved entities in a procedural text. We consider a deep semantic parser~(TRIPS) and semantic role labeling as two sources of semantic parsing knowledge. First, we propose PROPOLIS, a symbolic parsing-based procedural reasoning framework. Second, we integrate semantic parsing information into state-of-the-art neural models to conduct procedural reasoning. Our experiments indicate that explicitly incorporating such semantic knowledge improves procedural understanding. This paper presents new metrics for evaluating procedural reasoning tasks that clarify the challenges and identify differences among neural, symbolic, and integrated models.

A Broad-Coverage Deep Semantic Lexicon for Verbs

Jul 06, 2020

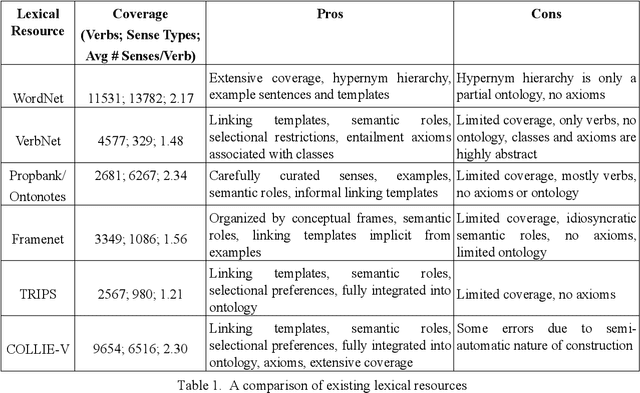

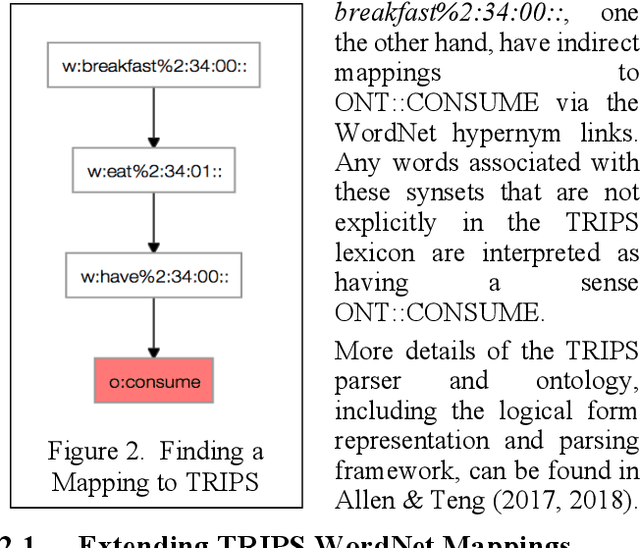

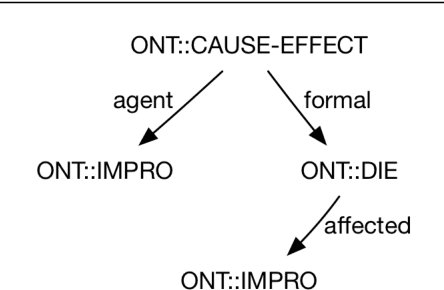

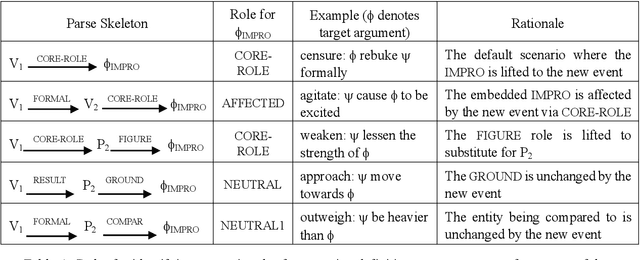

Abstract:Progress on deep language understanding is inhibited by the lack of a broad coverage lexicon that connects linguistic behavior to ontological concepts and axioms. We have developed COLLIE-V, a deep lexical resource for verbs, with the coverage of WordNet and syntactic and semantic details that meet or exceed existing resources. Bootstrapping from a hand-built lexicon and ontology, new ontological concepts and lexical entries, together with semantic role preferences and entailment axioms, are automatically derived by combining multiple constraints from parsing dictionary definitions and examples. We evaluated the accuracy of the technique along a number of different dimensions and were able to obtain high accuracy in deriving new concepts and lexical entries. COLLIE-V is publicly available.

Possible World Partition Sequences: A Unifying Framework for Uncertain Reasoning

Feb 13, 2013Abstract:When we work with information from multiple sources, the formalism each employs to handle uncertainty may not be uniform. In order to be able to combine these knowledge bases of different formats, we need to first establish a common basis for characterizing and evaluating the different formalisms, and provide a semantics for the combined mechanism. A common framework can provide an infrastructure for building an integrated system, and is essential if we are to understand its behavior. We present a unifying framework based on an ordered partition of possible worlds called partition sequences, which corresponds to our intuitive notion of biasing towards certain possible scenarios when we are uncertain of the actual situation. We show that some of the existing formalisms, namely, default logic, autoepistemic logic, probabilistic conditioning and thresholding (generalized conditioning), and possibility theory can be incorporated into this general framework.

Sequential Thresholds: Context Sensitive Default Extensions

Feb 06, 2013Abstract:Default logic encounters some conceptual difficulties in representing common sense reasoning tasks. We argue that we should not try to formulate modular default rules that are presumed to work in all or most circumstances. We need to take into account the importance of the context which is continuously evolving during the reasoning process. Sequential thresholding is a quantitative counterpart of default logic which makes explicit the role context plays in the construction of a non-monotonic extension. We present a semantic characterization of generic non-monotonic reasoning, as well as the instantiations pertaining to default logic and sequential thresholding. This provides a link between the two mechanisms as well as a way to integrate the two that can be beneficial to both.

Choosing Among Interpretations of Probability

Jan 23, 2013

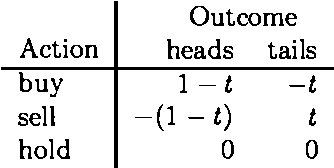

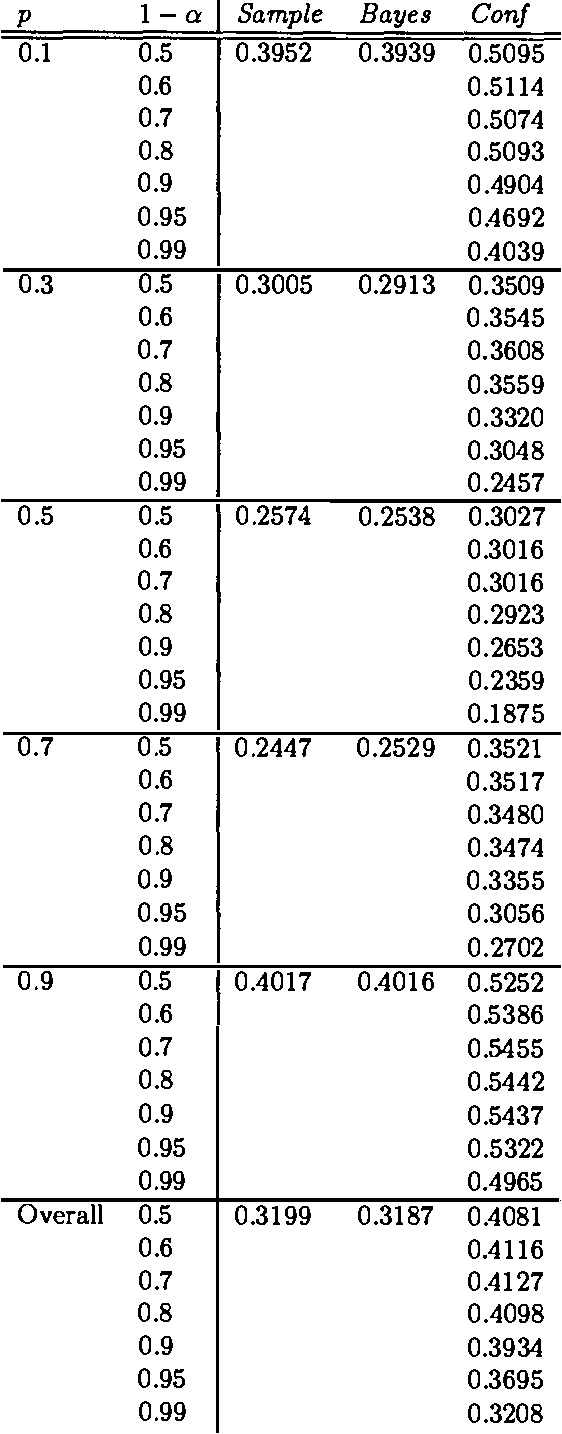

Abstract:There is available an ever-increasing variety of procedures for managing uncertainty. These methods are discussed in the literature of artificial intelligence, as well as in the literature of philosophy of science. Heretofore these methods have been evaluated by intuition, discussion, and the general philosophical method of argument and counterexample. Almost any method of uncertainty management will have the property that in the long run it will deliver numbers approaching the relative frequency of the kinds of events at issue. To find a measure that will provide a meaningful evaluation of these treatments of uncertainty, we must look, not at the long run, but at the short or intermediate run. Our project attempts to develop such a measure in terms of short or intermediate length performance. We represent the effects of practical choices by the outcomes of bets offered to agents characterized by two uncertainty management approaches: the subjective Bayesian approach and the Classical confidence interval approach. Experimental evaluation suggests that the confidence interval approach can outperform the subjective approach in the relatively short run.

Evaluating Defaults

Jul 24, 2002Abstract:We seek to find normative criteria of adequacy for nonmonotonic logic similar to the criterion of validity for deductive logic. Rather than stipulating that the conclusion of an inference be true in all models in which the premises are true, we require that the conclusion of a nonmonotonic inference be true in ``almost all'' models of a certain sort in which the premises are true. This ``certain sort'' specification picks out the models that are relevant to the inference, taking into account factors such as specificity and vagueness, and previous inferences. The frequencies characterizing the relevant models reflect known frequencies in our actual world. The criteria of adequacy for a default inference can be extended by thresholding to criteria of adequacy for an extension. We show that this avoids the implausibilities that might otherwise result from the chaining of default inferences. The model proportions, when construed in terms of frequencies, provide a verifiable grounding of default rules, and can become the basis for generating default rules from statistics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge