Chinmay Hedge

Decentralized Deep Learning using Momentum-Accelerated Consensus

Oct 21, 2020

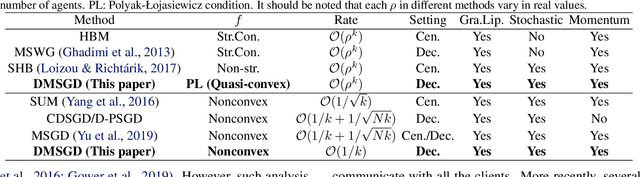

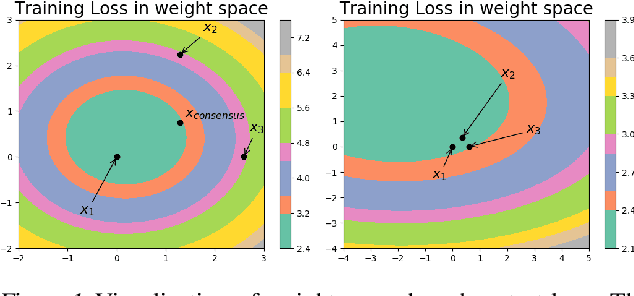

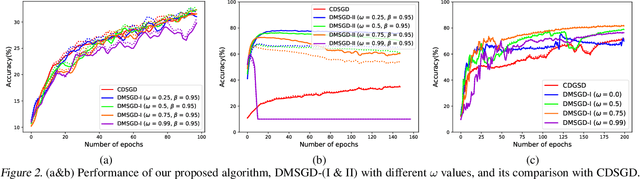

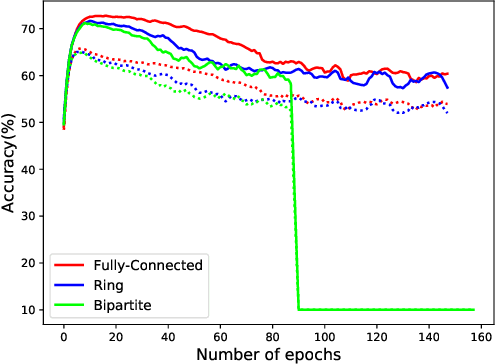

Abstract:We consider the problem of decentralized deep learning where multiple agents collaborate to learn from a distributed dataset. While there exist several decentralized deep learning approaches, the majority consider a central parameter-server topology for aggregating the model parameters from the agents. However, such a topology may be inapplicable in networked systems such as ad-hoc mobile networks, field robotics, and power network systems where direct communication with the central parameter server may be inefficient. In this context, we propose and analyze a novel decentralized deep learning algorithm where the agents interact over a fixed communication topology (without a central server). Our algorithm is based on the heavy-ball acceleration method used in gradient-based optimization. We propose a novel consensus protocol where each agent shares with its neighbors its model parameters as well as gradient-momentum values during the optimization process. We consider both strongly convex and non-convex objective functions and theoretically analyze our algorithm's performance. We present several empirical comparisons with competing decentralized learning methods to demonstrate the efficacy of our approach under different communication topologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge