Chia-Ling Tsai

A Multimodal Approach Combining Structural and Cross-domain Textual Guidance for Weakly Supervised OCT Segmentation

Nov 19, 2024

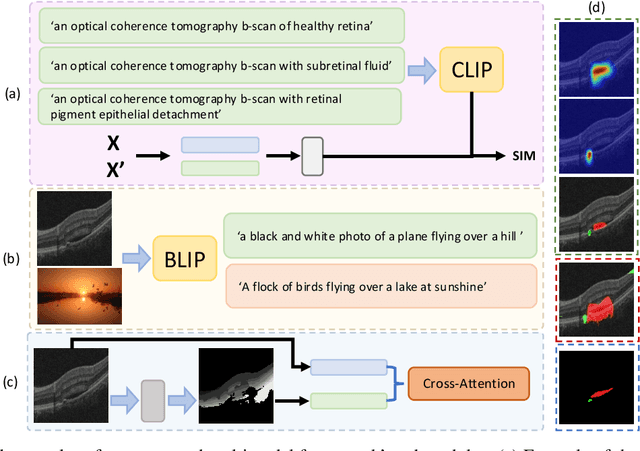

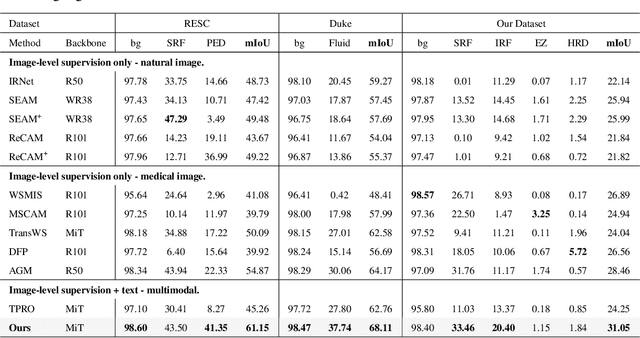

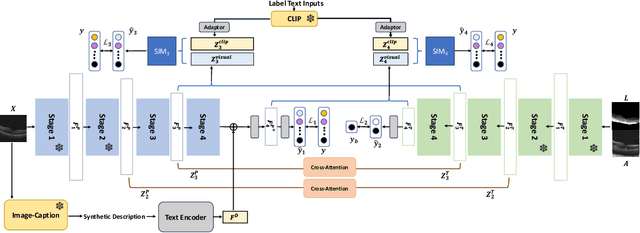

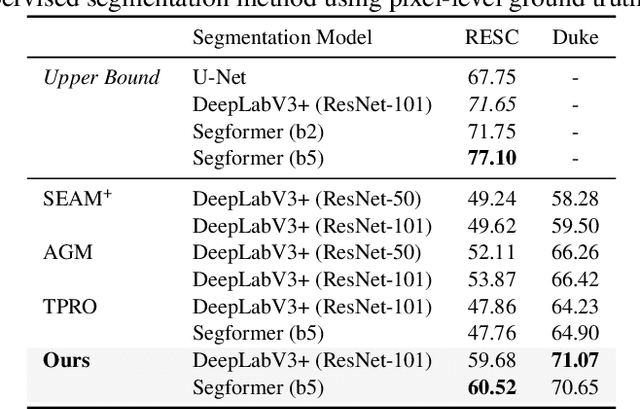

Abstract:Accurate segmentation of Optical Coherence Tomography (OCT) images is crucial for diagnosing and monitoring retinal diseases. However, the labor-intensive nature of pixel-level annotation limits the scalability of supervised learning with large datasets. Weakly Supervised Semantic Segmentation (WSSS) provides a promising alternative by leveraging image-level labels. In this study, we propose a novel WSSS approach that integrates structural guidance with text-driven strategies to generate high-quality pseudo labels, significantly improving segmentation performance. In terms of visual information, our method employs two processing modules that exchange raw image features and structural features from OCT images, guiding the model to identify where lesions are likely to occur. In terms of textual information, we utilize large-scale pretrained models from cross-domain sources to implement label-informed textual guidance and synthetic descriptive integration with two textual processing modules that combine local semantic features with consistent synthetic descriptions. By fusing these visual and textual components within a multimodal framework, our approach enhances lesion localization accuracy. Experimental results on three OCT datasets demonstrate that our method achieves state-of-the-art performance, highlighting its potential to improve diagnostic accuracy and efficiency in medical imaging.

Adversarial Vessel-Unveiling Semi-Supervised Segmentation for Retinopathy of Prematurity Diagnosis

Nov 14, 2024

Abstract:Accurate segmentation of retinal images plays a crucial role in aiding ophthalmologists in diagnosing retinopathy of prematurity (ROP) and assessing its severity. However, due to their underdeveloped, thinner vessels, manual annotation in infant fundus images is very complex, and this presents challenges for fully supervised learning. To address the scarcity of annotations, we propose a semi supervised segmentation framework designed to advance ROP studies without the need for extensive manual vessel annotation. Unlike previous methods that rely solely on limited labeled data, our approach leverages teacher student learning by integrating two powerful components: an uncertainty weighted vessel unveiling module and domain adversarial learning. The vessel unveiling module helps the model effectively reveal obscured and hard to detect vessel structures, while adversarial training aligns feature representations across different domains, ensuring robust and generalizable vessel segmentations. We validate our approach on public datasets (CHASEDB, STARE) and an in-house ROP dataset, demonstrating its superior performance across multiple evaluation metrics. Additionally, we extend the model's utility to a downstream task of ROP multi-stage classification, where vessel masks extracted by our segmentation model improve diagnostic accuracy. The promising results in classification underscore the model's potential for clinical application, particularly in early-stage ROP diagnosis and intervention. Overall, our work offers a scalable solution for leveraging unlabeled data in pediatric ophthalmology, opening new avenues for biomarker discovery and clinical research.

Fusion of Diffusion Weighted MRI and Clinical Data for Predicting Functional Outcome after Acute Ischemic Stroke with Deep Contrastive Learning

Feb 16, 2024

Abstract:Stroke is a common disabling neurological condition that affects about one-quarter of the adult population over age 25; more than half of patients still have poor outcomes, such as permanent functional dependence or even death, after the onset of acute stroke. The aim of this study is to investigate the efficacy of diffusion-weighted MRI modalities combining with structured health profile on predicting the functional outcome to facilitate early intervention. A deep fusion learning network is proposed with two-stage training: the first stage focuses on cross-modality representation learning and the second stage on classification. Supervised contrastive learning is exploited to learn discriminative features that separate the two classes of patients from embeddings of individual modalities and from the fused multimodal embedding. The network takes as the input DWI and ADC images, and structured health profile data. The outcome is the prediction of the patient needing long-term care at 3 months after the onset of stroke. Trained and evaluated with a dataset of 3297 patients, our proposed fusion model achieves 0.87, 0.80 and 80.45% for AUC, F1-score and accuracy, respectively, outperforming existing models that consolidate both imaging and structured data in the medical domain. If trained with comprehensive clinical variables, including NIHSS and comorbidities, the gain from images on making accurate prediction is not considered substantial, but significant. However, diffusion-weighted MRI can replace NIHSS to achieve comparable level of accuracy combining with other readily available clinical variables for better generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge