Chi-Han Lin

An Effective Context-Balanced Adaptation Approach for Long-Tailed Speech Recognition

Sep 10, 2024

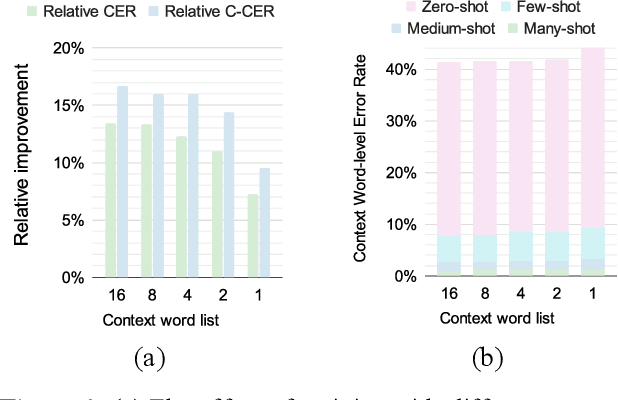

Abstract:End-to-end (E2E) automatic speech recognition (ASR) models have become standard practice for various commercial applications. However, in real-world scenarios, the long-tailed nature of word distribution often leads E2E ASR models to perform well on common words but fall short in recognizing uncommon ones. Recently, the notion of a contextual adapter (CA) was proposed to infuse external knowledge represented by a context word list into E2E ASR models. Although CA can improve recognition performance on rare words, two crucial data imbalance problems remain. First, when using low-frequency words as context words during training, since these words rarely occur in the utterance, CA becomes prone to overfit on attending to the <no-context> token due to higher-frequency words not being present in the context list. Second, the long-tailed distribution within the context list itself still causes the model to perform poorly on low-frequency context words. In light of this, we explore in-depth the impact of altering the context list to have words with different frequency distributions on model performance, and meanwhile extend CA with a simple yet effective context-balanced learning objective. A series of experiments conducted on the AISHELL-1 benchmark dataset suggests that using all vocabulary words from the training corpus as the context list and pairing them with our balanced objective yields the best performance, demonstrating a significant reduction in character error rate (CER) by up to 1.21% and a more pronounced 9.44% reduction in the error rate of zero-shot words.

DANCER: Entity Description Augmented Named Entity Corrector for Automatic Speech Recognition

Apr 11, 2024

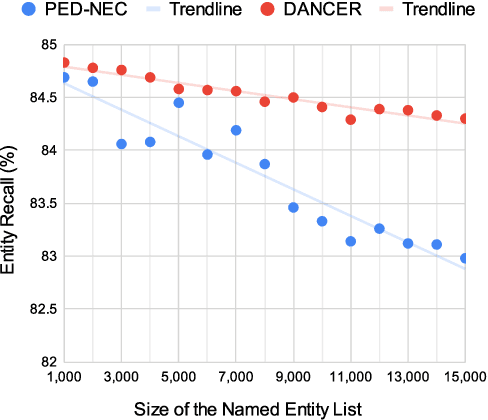

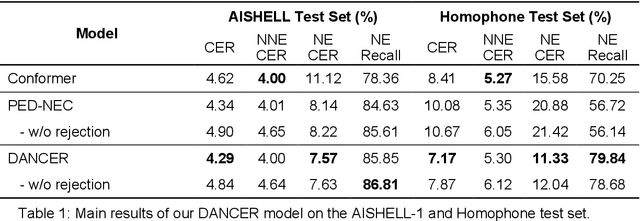

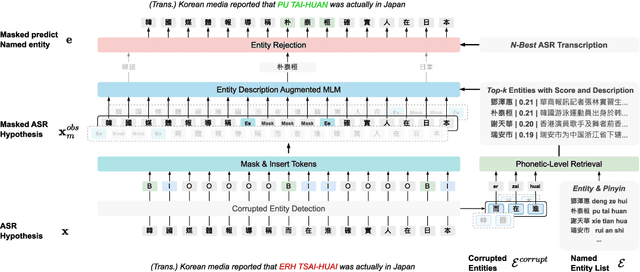

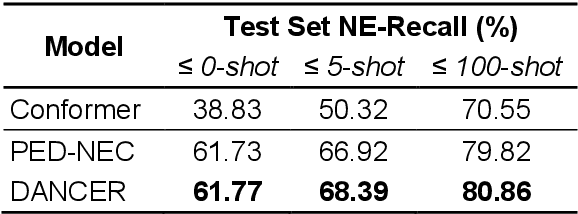

Abstract:End-to-end automatic speech recognition (E2E ASR) systems often suffer from mistranscription of domain-specific phrases, such as named entities, sometimes leading to catastrophic failures in downstream tasks. A family of fast and lightweight named entity correction (NEC) models for ASR have recently been proposed, which normally build on phonetic-level edit distance algorithms and have shown impressive NEC performance. However, as the named entity (NE) list grows, the problems of phonetic confusion in the NE list are exacerbated; for example, homophone ambiguities increase substantially. In view of this, we proposed a novel Description Augmented Named entity CorrEctoR (dubbed DANCER), which leverages entity descriptions to provide additional information to facilitate mitigation of phonetic confusion for NEC on ASR transcription. To this end, an efficient entity description augmented masked language model (EDA-MLM) comprised of a dense retrieval model is introduced, enabling MLM to adapt swiftly to domain-specific entities for the NEC task. A series of experiments conducted on the AISHELL-1 and Homophone datasets confirm the effectiveness of our modeling approach. DANCER outperforms a strong baseline, the phonetic edit-distance-based NEC model (PED-NEC), by a character error rate (CER) reduction of about 7% relatively on AISHELL-1 for named entities. More notably, when tested on Homophone that contain named entities of high phonetic confusion, DANCER offers a more pronounced CER reduction of 46% relatively over PED-NEC for named entities.

An Effective Mixture-Of-Experts Approach For Code-Switching Speech Recognition Leveraging Encoder Disentanglement

Feb 27, 2024

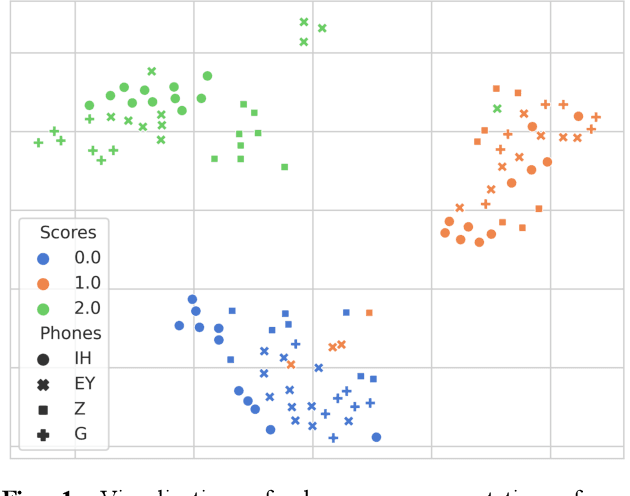

Abstract:With the massive developments of end-to-end (E2E) neural networks, recent years have witnessed unprecedented breakthroughs in automatic speech recognition (ASR). However, the codeswitching phenomenon remains a major obstacle that hinders ASR from perfection, as the lack of labeled data and the variations between languages often lead to degradation of ASR performance. In this paper, we focus exclusively on improving the acoustic encoder of E2E ASR to tackle the challenge caused by the codeswitching phenomenon. Our main contributions are threefold: First, we introduce a novel disentanglement loss to enable the lower-layer of the encoder to capture inter-lingual acoustic information while mitigating linguistic confusion at the higher-layer of the encoder. Second, through comprehensive experiments, we verify that our proposed method outperforms the prior-art methods using pretrained dual-encoders, meanwhile having access only to the codeswitching corpus and consuming half of the parameterization. Third, the apparent differentiation of the encoders' output features also corroborates the complementarity between the disentanglement loss and the mixture-of-experts (MoE) architecture.

Preserving Phonemic Distinctions for Ordinal Regression: A Novel Loss Function for Automatic Pronunciation Assessment

Oct 04, 2023

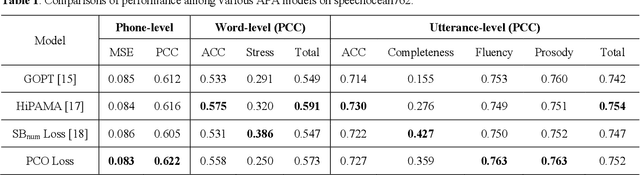

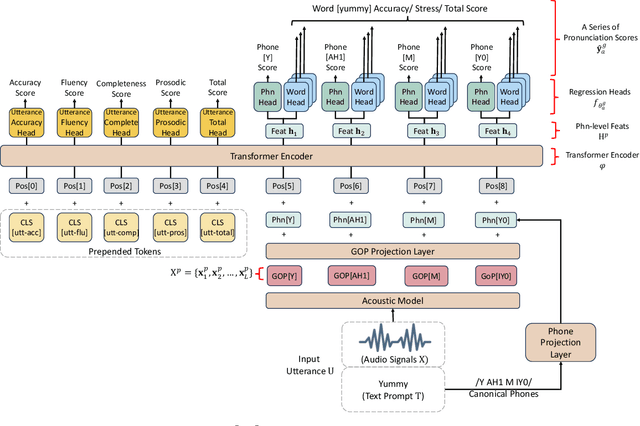

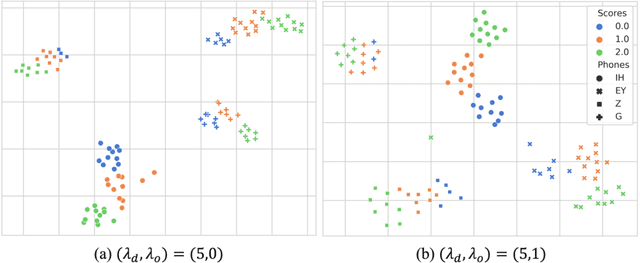

Abstract:Automatic pronunciation assessment (APA) manages to quantify the pronunciation proficiency of a second language (L2) learner in a language. Prevailing approaches to APA normally leverage neural models trained with a regression loss function, such as the mean-squared error (MSE) loss, for proficiency level prediction. Despite most regression models can effectively capture the ordinality of proficiency levels in the feature space, they are confronted with a primary obstacle that different phoneme categories with the same proficiency level are inevitably forced to be close to each other, retaining less phoneme-discriminative information. On account of this, we devise a phonemic contrast ordinal (PCO) loss for training regression-based APA models, which aims to preserve better phonemic distinctions between phoneme categories meanwhile considering ordinal relationships of the regression target output. Specifically, we introduce a phoneme-distinct regularizer into the MSE loss, which encourages feature representations of different phoneme categories to be far apart while simultaneously pulling closer the representations belonging to the same phoneme category by means of weighted distances. An extensive set of experiments carried out on the speechocean762 benchmark dataset suggest the feasibility and effectiveness of our model in relation to some existing state-of-the-art models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge