Chi Huang

NeRF-DetS: Enhancing Multi-View 3D Object Detection with Sampling-adaptive Network of Continuous NeRF-based Representation

Apr 22, 2024Abstract:As a preliminary work, NeRF-Det unifies the tasks of novel view synthesis and 3D perception, demonstrating that perceptual tasks can benefit from novel view synthesis methods like NeRF, significantly improving the performance of indoor multi-view 3D object detection. Using the geometry MLP of NeRF to direct the attention of detection head to crucial parts and incorporating self-supervised loss from novel view rendering contribute to the achieved improvement. To better leverage the notable advantages of the continuous representation through neural rendering in space, we introduce a novel 3D perception network structure, NeRF-DetS. The key component of NeRF-DetS is the Multi-level Sampling-Adaptive Network, making the sampling process adaptively from coarse to fine. Also, we propose a superior multi-view information fusion method, known as Multi-head Weighted Fusion. This fusion approach efficiently addresses the challenge of losing multi-view information when using arithmetic mean, while keeping low computational costs. NeRF-DetS outperforms competitive NeRF-Det on the ScanNetV2 dataset, by achieving +5.02% and +5.92% improvement in mAP@.25 and mAP@.50, respectively.

Does Head Label Help for Long-Tailed Multi-Label Text Classification

Jan 24, 2021

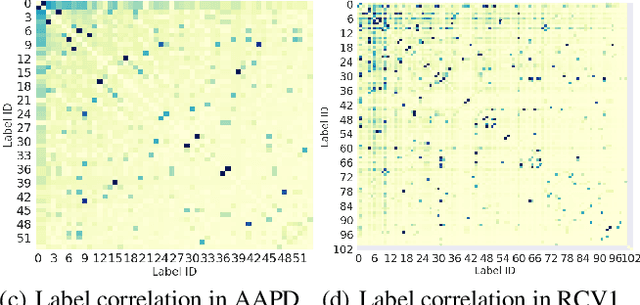

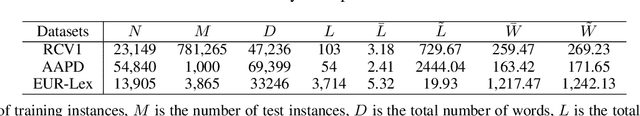

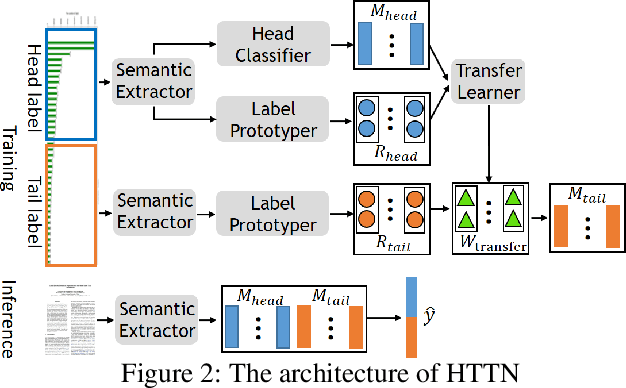

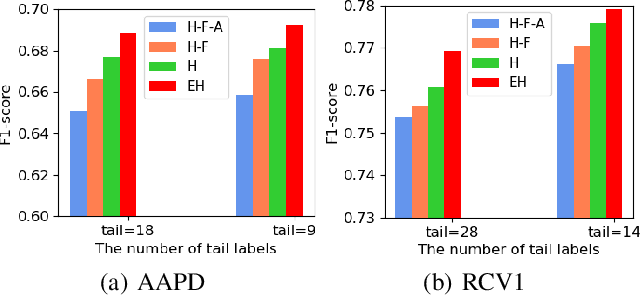

Abstract:Multi-label text classification (MLTC) aims to annotate documents with the most relevant labels from a number of candidate labels. In real applications, the distribution of label frequency often exhibits a long tail, i.e., a few labels are associated with a large number of documents (a.k.a. head labels), while a large fraction of labels are associated with a small number of documents (a.k.a. tail labels). To address the challenge of insufficient training data on tail label classification, we propose a Head-to-Tail Network (HTTN) to transfer the meta-knowledge from the data-rich head labels to data-poor tail labels. The meta-knowledge is the mapping from few-shot network parameters to many-shot network parameters, which aims to promote the generalizability of tail classifiers. Extensive experimental results on three benchmark datasets demonstrate that HTTN consistently outperforms the state-of-the-art methods. The code and hyper-parameter settings are released for reproducibility

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge