Chengwei Li

FreeKV: Boosting KV Cache Retrieval for Efficient LLM Inference

May 19, 2025Abstract:Large language models (LLMs) have been widely deployed with rapidly expanding context windows to support increasingly demanding applications. However, long contexts pose significant deployment challenges, primarily due to the KV cache whose size grows proportionally with context length. While KV cache compression methods are proposed to address this issue, KV dropping methods incur considerable accuracy loss, and KV retrieval methods suffer from significant efficiency bottlenecks. We propose FreeKV, an algorithm-system co-optimization framework to enhance KV retrieval efficiency while preserving accuracy. On the algorithm side, FreeKV introduces speculative retrieval to shift the KV selection and recall processes out of the critical path, combined with fine-grained correction to ensure accuracy. On the system side, FreeKV employs hybrid KV layouts across CPU and GPU memory to eliminate fragmented data transfers, and leverages double-buffered streamed recall to further improve efficiency. Experiments demonstrate that FreeKV achieves near-lossless accuracy across various scenarios and models, delivering up to 13$\times$ speedup compared to SOTA KV retrieval methods.

ClusterKV: Manipulating LLM KV Cache in Semantic Space for Recallable Compression

Dec 04, 2024

Abstract:Large Language Models (LLMs) have been widely deployed in a variety of applications, and the context length is rapidly increasing to handle tasks such as long-document QA and complex logical reasoning. However, long context poses significant challenges for inference efficiency, including high memory costs of key-value (KV) cache and increased latency due to extensive memory accesses. Recent works have proposed compressing KV cache to approximate computation, but these methods either evict tokens permanently, never recalling them for later inference, or recall previous tokens at the granularity of pages divided by textual positions. Both approaches degrade the model accuracy and output quality. To achieve efficient and accurate recallable KV cache compression, we introduce ClusterKV, which recalls tokens at the granularity of semantic clusters. We design and implement efficient algorithms and systems for clustering, selection, indexing and caching. Experiment results show that ClusterKV attains negligible accuracy loss across various tasks with 32k context lengths, using only a 1k to 2k KV cache budget, and achieves up to a 2$\times$ speedup in latency and a 2.5$\times$ improvement in decoding throughput. Compared to SoTA recallable KV compression methods, ClusterKV demonstrates higher model accuracy and output quality, while maintaining or exceeding inference efficiency.

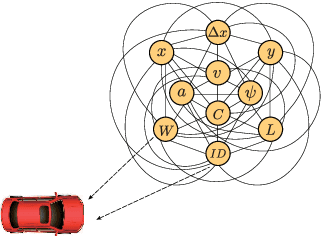

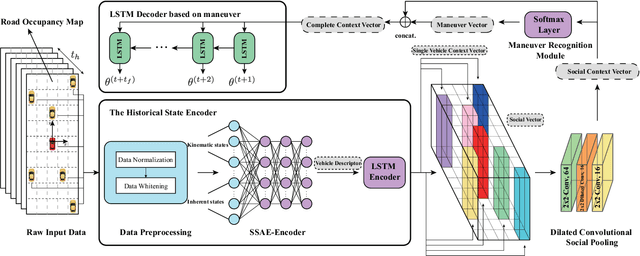

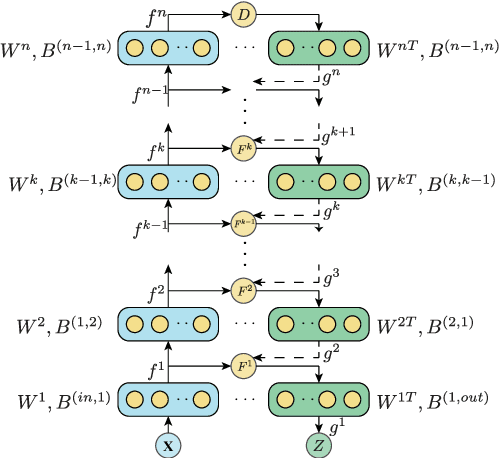

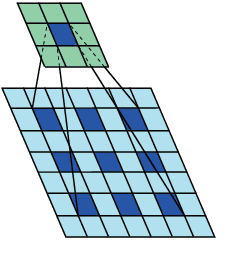

A Multi-Modal States based Vehicle Descriptor and Dilated Convolutional Social Pooling for Vehicle Trajectory Prediction

Mar 07, 2020

Abstract:Precise trajectory prediction of surrounding vehicles is critical for decision-making of autonomous vehicles and learning-based approaches are well recognized for the robustness. However, state-of-the-art learning-based methods ignore 1) the feasibility of the vehicle's multi-modal state information for prediction and 2) the mutual exclusive relationship between the global traffic scene receptive fields and the local position resolution when modeling vehicles' interactions, which may influence prediction accuracy. Therefore, we propose a vehicle-descriptor based LSTM model with the dilated convolutional social pooling (VD+DCS-LSTM) to cope with the above issues. First, each vehicle's multi-modal state information is employed as our model's input and a new vehicle descriptor encoded by stacked sparse auto-encoders is proposed to reflect the deep interactive relationships between various states, achieving the optimal feature extraction and effective use of multi-modal inputs. Secondly, the LSTM encoder is used to encode the historical sequences composed of the vehicle descriptor and a novel dilated convolutional social pooling is proposed to improve modeling vehicles' spatial interactions. Thirdly, the LSTM decoder is used to predict the probability distribution of future trajectories based on maneuvers. The validity of the overall model was verified over the NGSIM US-101 and I-80 datasets and our method outperforms the latest benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge