Chau Yi Li

On the limits of perceptual quality measures for enhanced underwater images

Jul 12, 2022

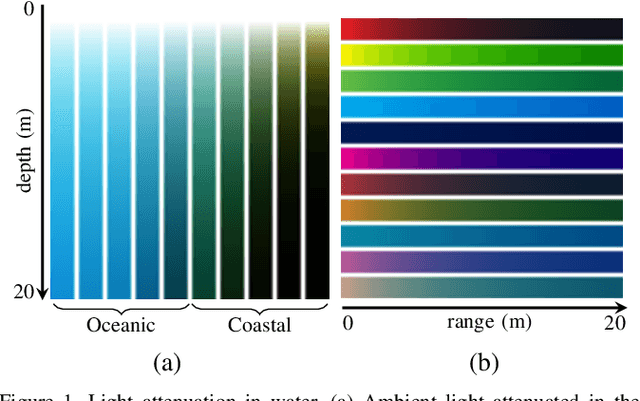

Abstract:The appearance of objects in underwater images is degraded by the selective attenuation of light, which reduces contrast and causes a colour cast. This degradation depends on the water environment, and increases with depth and with the distance of the object from the camera. Despite an increasing volume of works in underwater image enhancement and restoration, the lack of a commonly accepted evaluation measure is hindering the progress as it is difficult to compare methods. In this paper, we review commonly used colour accuracy measures, such as colour reproduction error and CIEDE2000, and no-reference image quality measures, such as UIQM, UCIQE and CCF, which have not yet been systematically validated. We show that none of the no-reference quality measures satisfactorily rates the quality of enhanced underwater images and discuss their main shortcomings. Images and results are available at https://puiqe.eecs.qmul.ac.uk.

On the reversibility of adversarial attacks

Jun 01, 2022

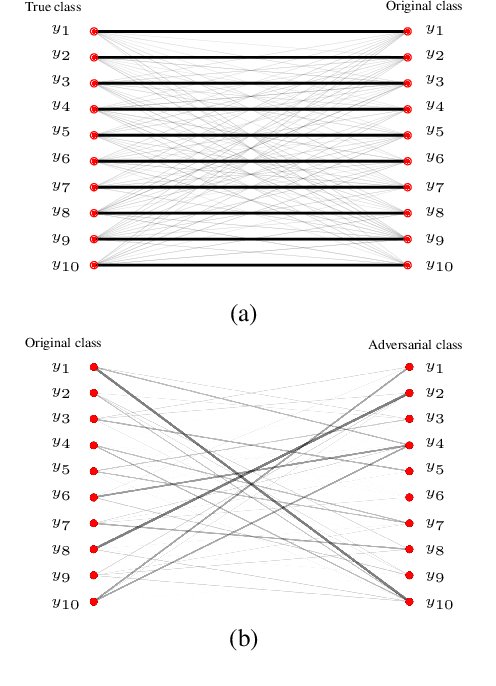

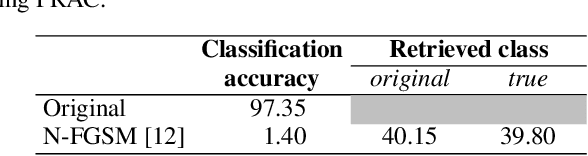

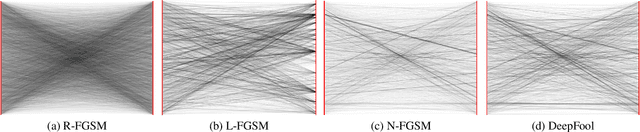

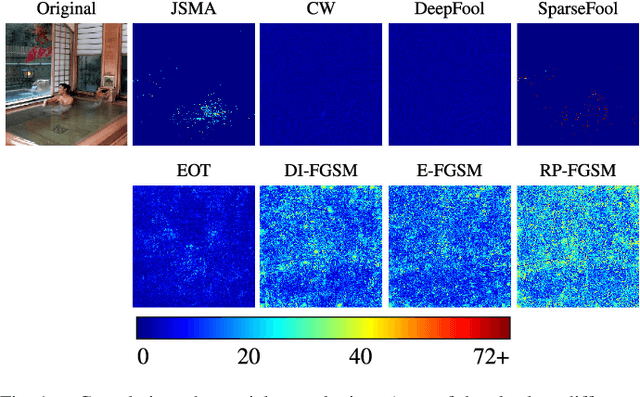

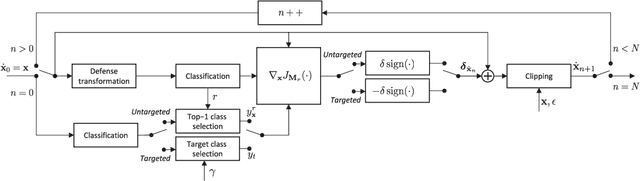

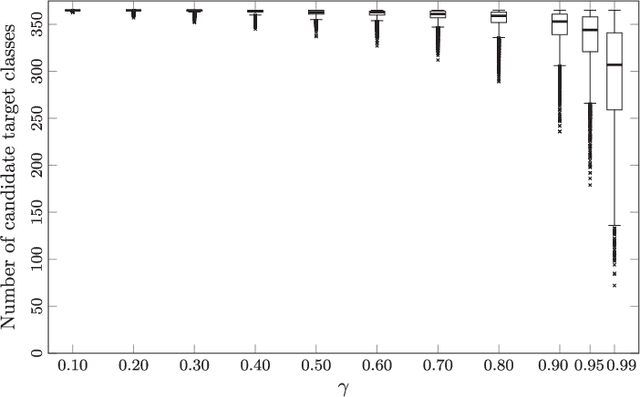

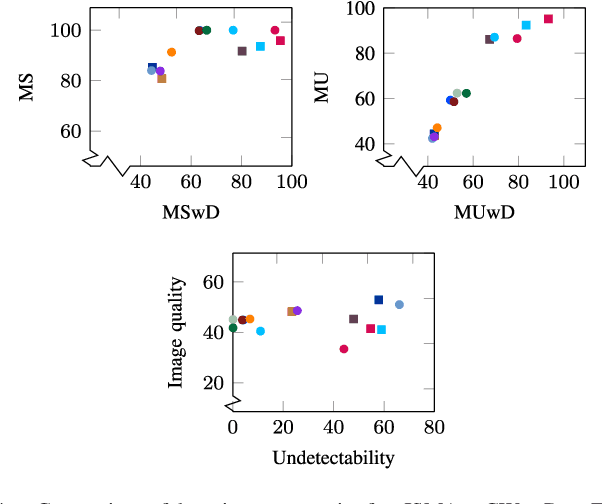

Abstract:Adversarial attacks modify images with perturbations that change the prediction of classifiers. These modified images, known as adversarial examples, expose the vulnerabilities of deep neural network classifiers. In this paper, we investigate the predictability of the mapping between the classes predicted for original images and for their corresponding adversarial examples. This predictability relates to the possibility of retrieving the original predictions and hence reversing the induced misclassification. We refer to this property as the reversibility of an adversarial attack, and quantify reversibility as the accuracy in retrieving the original class or the true class of an adversarial example. We present an approach that reverses the effect of an adversarial attack on a classifier using a prior set of classification results. We analyse the reversibility of state-of-the-art adversarial attacks on benchmark classifiers and discuss the factors that affect the reversibility.

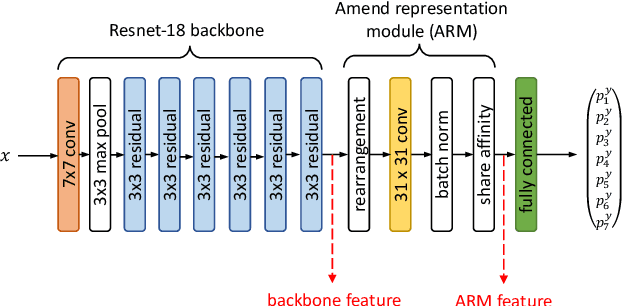

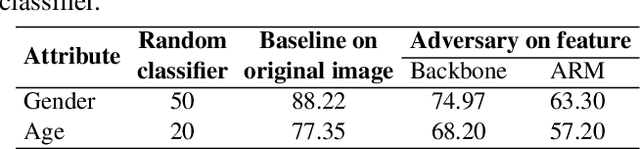

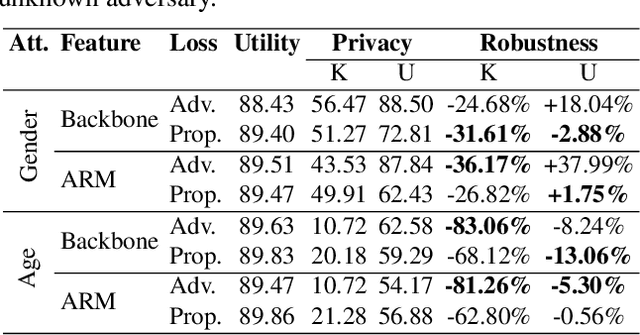

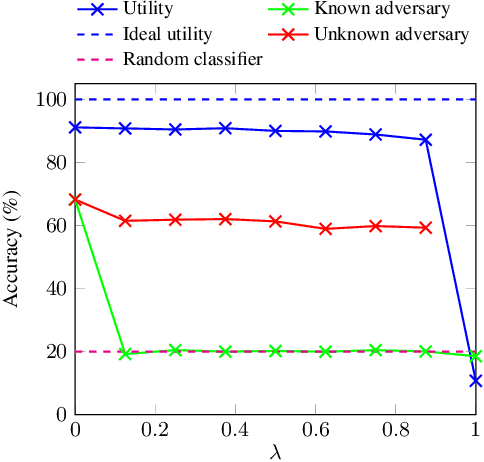

Training privacy-preserving video analytics pipelines by suppressing features that reveal information about private attributes

Mar 05, 2022

Abstract:Deep neural networks are increasingly deployed for scene analytics, including to evaluate the attention and reaction of people exposed to out-of-home advertisements. However, the features extracted by a deep neural network that was trained to predict a specific, consensual attribute (e.g. emotion) may also encode and thus reveal information about private, protected attributes (e.g. age or gender). In this work, we focus on such leakage of private information at inference time. We consider an adversary with access to the features extracted by the layers of a deployed neural network and use these features to predict private attributes. To prevent the success of such an attack, we modify the training of the network using a confusion loss that encourages the extraction of features that make it difficult for the adversary to accurately predict private attributes. We validate this training approach on image-based tasks using a publicly available dataset. Results show that, compared to the original network, the proposed PrivateNet can reduce the leakage of private information of a state-of-the-art emotion recognition classifier by 2.88% for gender and by 13.06% for age group, with a minimal effect on task accuracy.

Underwater image filtering: methods, datasets and evaluation

Dec 22, 2020

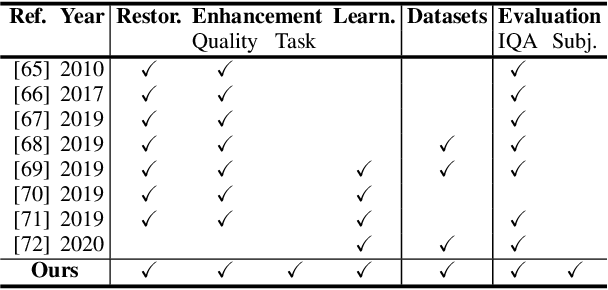

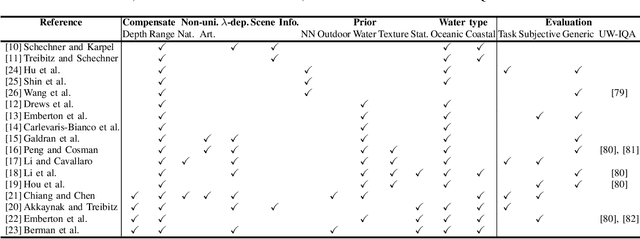

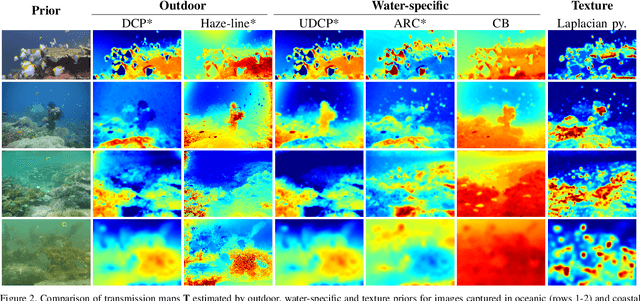

Abstract:Underwater images are degraded by the selective attenuation of light that distorts colours and reduces contrast. The degradation extent depends on the water type, the distance between an object and the camera, and the depth under the water surface the object is at. Underwater image filtering aims to restore or to enhance the appearance of objects captured in an underwater image. Restoration methods compensate for the actual degradation, whereas enhancement methods improve either the perceived image quality or the performance of computer vision algorithms. The growing interest in underwater image filtering methods--including learning-based approaches used for both restoration and enhancement--and the associated challenges call for a comprehensive review of the state of the art. In this paper, we review the design principles of filtering methods and revisit the oceanology background that is fundamental to identify the degradation causes. We discuss image formation models and the results of restoration methods in various water types. Furthermore, we present task-dependent enhancement methods and categorise datasets for training neural networks and for method evaluation. Finally, we discuss evaluation strategies, including subjective tests and quality assessment measures. We complement this survey with a platform ( https://puiqe.eecs.qmul.ac.uk/ ), which hosts state-of-the-art underwater filtering methods and facilitates comparisons.

Exploiting vulnerabilities of deep neural networks for privacy protection

Jul 19, 2020

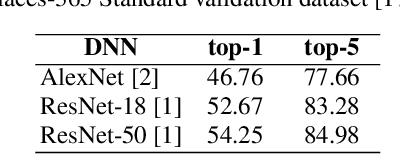

Abstract:Adversarial perturbations can be added to images to protect their content from unwanted inferences. These perturbations may, however, be ineffective against classifiers that were not {seen} during the generation of the perturbation, or against defenses {based on re-quantization, median filtering or JPEG compression. To address these limitations, we present an adversarial attack {that is} specifically designed to protect visual content against { unseen} classifiers and known defenses. We craft perturbations using an iterative process that is based on the Fast Gradient Signed Method and {that} randomly selects a classifier and a defense, at each iteration}. This randomization prevents an undesirable overfitting to a specific classifier or defense. We validate the proposed attack in both targeted and untargeted settings on the private classes of the Places365-Standard dataset. Using ResNet18, ResNet50, AlexNet and DenseNet161 {as classifiers}, the performance of the proposed attack exceeds that of eleven state-of-the-art attacks. The implementation is available at https://github.com/smartcameras/RP-FGSM/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge