Charles O'Donnell

Evaluating Collaborative Autonomy in Opposed Environments using Maritime Capture-the-Flag Competitions

Apr 25, 2024

Abstract:The objective of this work is to evaluate multi-agent artificial intelligence methods when deployed on teams of unmanned surface vehicles (USV) in an adversarial environment. Autonomous agents were evaluated in real-world scenarios using the Aquaticus test-bed, which is a Capture-the-Flag (CTF) style competition involving teams of USV systems. Cooperative teaming algorithms of various foundations in behavior-based optimization and deep reinforcement learning (RL) were deployed on these USV systems in two versus two teams and tested against each other during a competition period in the fall of 2023. Deep reinforcement learning applied to USV agents was achieved via the Pyquaticus test bed, a lightweight gymnasium environment that allows simulated CTF training in a low-level environment. The results of the experiment demonstrate that rule-based cooperation for behavior-based agents outperformed those trained in Deep-reinforcement learning paradigms as implemented in these competitions. Further integration of the Pyquaticus gymnasium environment for RL with MOOS-IvP in terms of configuration and control schema will allow for more competitive CTF games in future studies. As the development of experimental deep RL methods continues, the authors expect that the competitive gap between behavior-based autonomy and deep RL will be reduced. As such, this report outlines the overall competition, methods, and results with an emphasis on future works such as reward shaping and sim-to-real methodologies and extending rule-based cooperation among agents to react to safety and security events in accordance with human experts intent/rules for executing safety and security processes.

Dynamic and Distributed Optimization for the Allocation of Aerial Swarm Vehicles

Nov 30, 2022

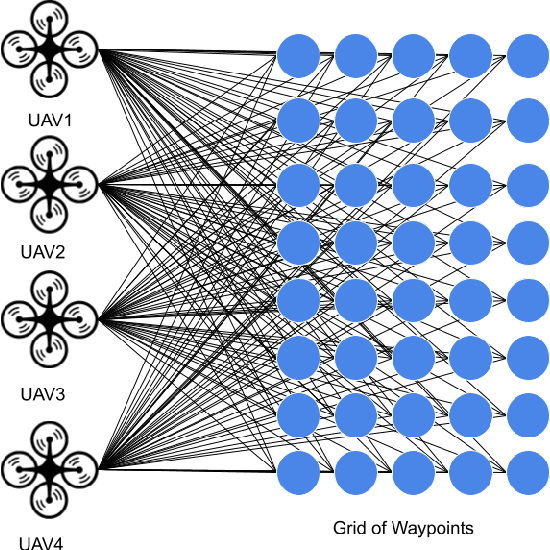

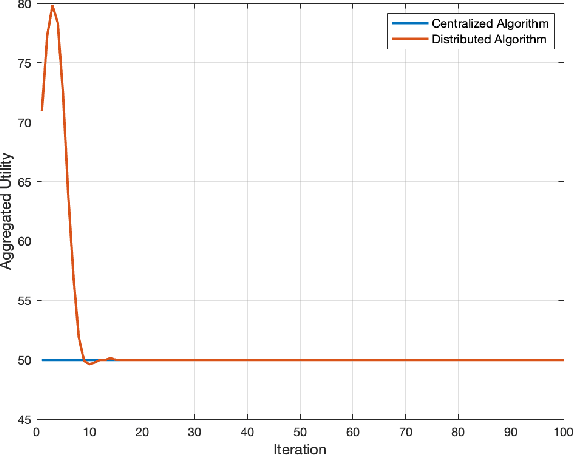

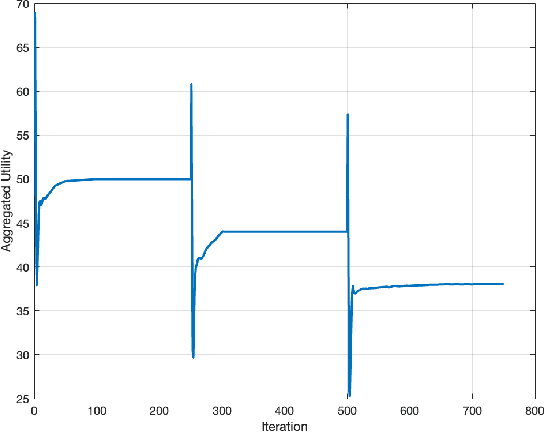

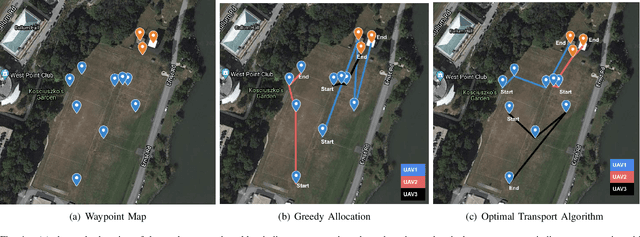

Abstract:Optimal transport (OT) is a framework that can guide the design of efficient resource allocation strategies in a network of multiple sources and targets. This paper applies discrete OT to a swarm of UAVs in a novel way to achieve appropriate task allocation and execution. Drone swarm deployments already operate in multiple domains where sensors are used to gain knowledge of an environment [1]. Use cases such as, chemical and radiation detection, and thermal and RGB imaging create a specific need for an algorithm that considers parameters on both the UAV and waypoint side and allows for updating the matching scheme as the swarm gains information from the environment. Additionally, the need for a centralized planner can be removed by using a distributed algorithm that can dynamically update based on changes in the swarm network or parameters. To this end, we develop a dynamic and distributed OT algorithm that matches a UAV to the optimal waypoint based on one parameter at the UAV and another parameter at the waypoint. We show the convergence and allocation of the algorithm through a case study and test the algorithm's effectiveness against a greedy assignment algorithm in simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge