Charles E. Leisersen

EvolveGCN: Evolving Graph Convolutional Networks for Dynamic Graphs

Feb 26, 2019

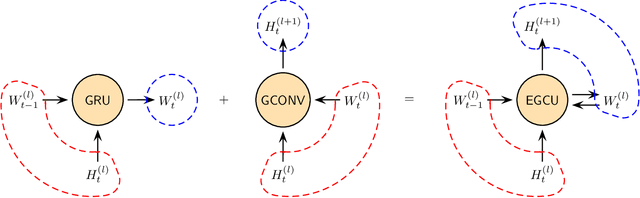

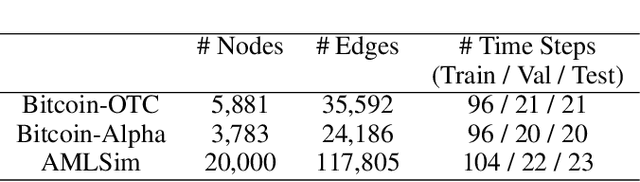

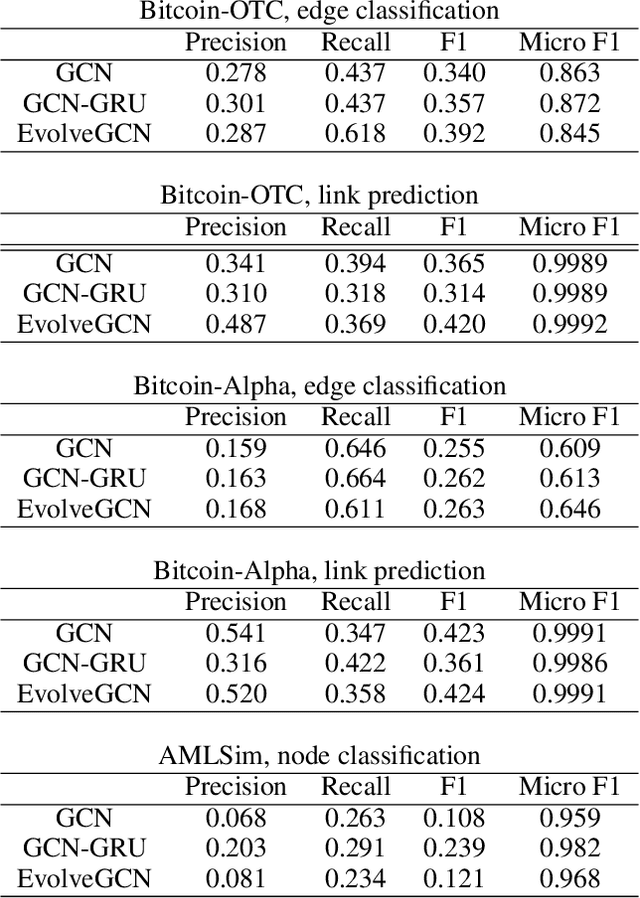

Abstract:Graph representation learning resurges as a trending research subject owing to the widespread use of deep learning for Euclidean data, which inspire various creative designs of neural networks in the non-Euclidean domain, particularly graphs. With the success of these graph neural networks (GNN) in the static setting, we approach further practical scenarios where the graph dynamically evolves. For this case, combining the GNN with a recurrent neural network (RNN, broadly speaking) is a natural idea. Existing approaches typically learn one single graph model for all the graphs, by using the RNN to capture the dynamism of the output node embeddings and to implicitly regulate the graph model. In this work, we propose a different approach, coined EvolveGCN, that uses the RNN to evolve the graph model itself over time. This model adaptation approach is model oriented rather than node oriented, and hence is advantageous in the flexibility on the input. For example, in the extreme case, the model can handle at a new time step, a completely new set of nodes whose historical information is unknown, because the dynamism has been carried over to the GNN parameters. We evaluate the proposed approach on tasks including node classification, edge classification, and link prediction. The experimental results indicate a generally higher performance of EvolveGCN compared with related approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge