Charilaos Kanatsoulis

RelBench v2: A Large-Scale Benchmark and Repository for Relational Data

Feb 13, 2026Abstract:Relational deep learning (RDL) has emerged as a powerful paradigm for learning directly on relational databases by modeling entities and their relationships across multiple interconnected tables. As this paradigm evolves toward larger models and relational foundation models, scalable and realistic benchmarks are essential for enabling systematic evaluation and progress. In this paper, we introduce RelBench v2, a major expansion of the RelBench benchmark for RDL. RelBench v2 adds four large-scale relational datasets spanning scholarly publications, enterprise resource planning, consumer platforms, and clinical records, increasing the benchmark to 11 datasets comprising over 22 million rows across 29 tables. We further introduce autocomplete tasks, a new class of predictive objectives that require models to infer missing attribute values directly within relational tables while respecting temporal constraints, expanding beyond traditional forecasting tasks constructed via SQL queries. In addition, RelBench v2 expands beyond its native datasets by integrating external benchmarks and evaluation frameworks: we translate event streams from the Temporal Graph Benchmark into relational schemas for unified relational-temporal evaluation, interface with ReDeLEx to provide uniform access to 70+ real-world databases suitable for pretraining, and incorporate 4DBInfer datasets and tasks to broaden multi-table prediction coverage. Experimental results demonstrate that RDL models consistently outperform single-table baselines across autocomplete, forecasting, and recommendation tasks, highlighting the importance of modeling relational structure explicitly.

RelGNN: Composite Message Passing for Relational Deep Learning

Feb 10, 2025

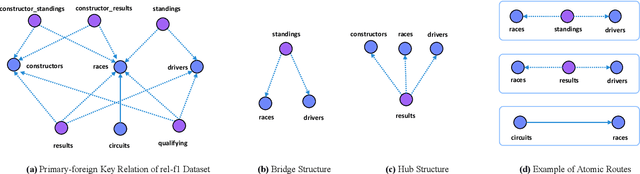

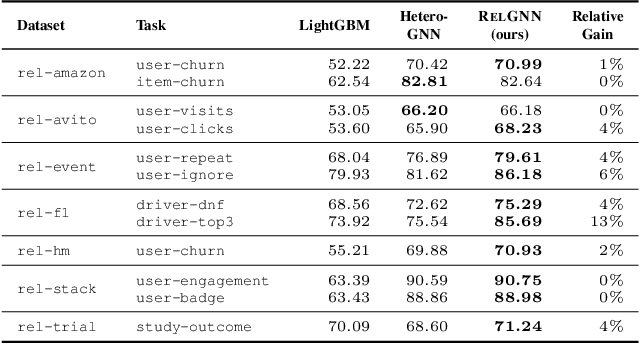

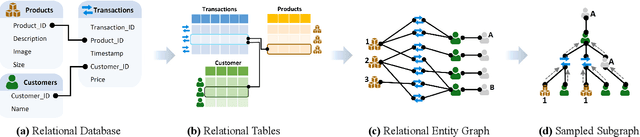

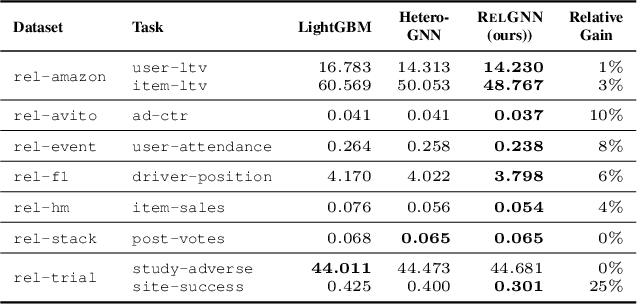

Abstract:Predictive tasks on relational databases are critical in real-world applications spanning e-commerce, healthcare, and social media. To address these tasks effectively, Relational Deep Learning (RDL) encodes relational data as graphs, enabling Graph Neural Networks (GNNs) to exploit relational structures for improved predictions. However, existing heterogeneous GNNs often overlook the intrinsic structural properties of relational databases, leading to modeling inefficiencies. Here we introduce RelGNN, a novel GNN framework specifically designed to capture the unique characteristics of relational databases. At the core of our approach is the introduction of atomic routes, which are sequences of nodes forming high-order tripartite structures. Building upon these atomic routes, RelGNN designs new composite message passing mechanisms between heterogeneous nodes, allowing direct single-hop interactions between them. This approach avoids redundant aggregations and mitigates information entanglement, ultimately leading to more efficient and accurate predictive modeling. RelGNN is evaluated on 30 diverse real-world tasks from RelBench (Fey et al., 2024), and consistently achieves state-of-the-art accuracy with up to 25% improvement.

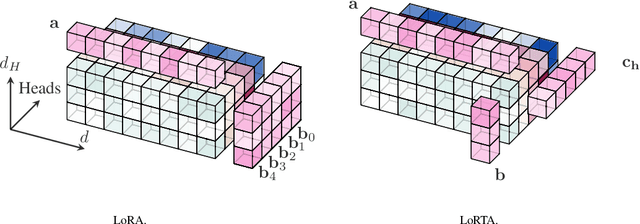

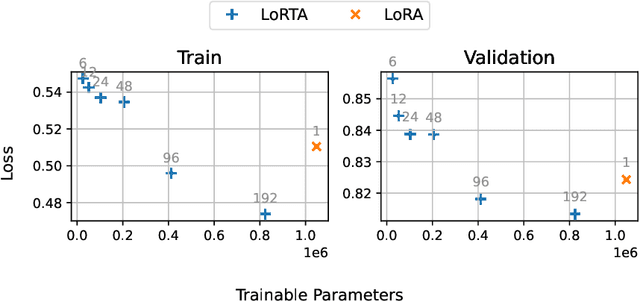

LoRTA: Low Rank Tensor Adaptation of Large Language Models

Oct 15, 2024

Abstract:Low Rank Adaptation (LoRA) is a popular Parameter Efficient Fine Tuning (PEFT) method that effectively adapts large pre-trained models for downstream tasks. LoRA parameterizes model updates using low-rank matrices at each layer, significantly reducing the number of trainable parameters and, consequently, resource requirements during fine-tuning. However, the lower bound on the number of trainable parameters remains high due to the use of the low-rank matrix model. In this paper, we address this limitation by proposing a novel approach that employs a low rank tensor parametrization for model updates. The proposed low rank tensor model can significantly reduce the number of trainable parameters, while also allowing for finer-grained control over adapter size. Our experiments on Natural Language Understanding, Instruction Tuning, Preference Optimization and Protein Folding benchmarks demonstrate that our method is both efficient and effective for fine-tuning large language models, achieving a substantial reduction in the number of parameters while maintaining comparable performance.

Space-Time Graph Neural Networks with Stochastic Graph Perturbations

Oct 28, 2022

Abstract:Space-time graph neural networks (ST-GNNs) are recently developed architectures that learn efficient graph representations of time-varying data. ST-GNNs are particularly useful in multi-agent systems, due to their stability properties and their ability to respect communication delays between the agents. In this paper we revisit the stability properties of ST-GNNs and prove that they are stable to stochastic graph perturbations. Our analysis suggests that ST-GNNs are suitable for transfer learning on time-varying graphs and enables the design of generalized convolutional architectures that jointly process time-varying graphs and time-varying signals. Numerical experiments on decentralized control systems validate our theoretical results and showcase the benefits of traditional and generalized ST-GNN architectures.

Deep Convolutional Neural Networks for Multi-Target Tracking: A Transfer Learning Approach

Oct 28, 2022

Abstract:Multi-target tracking (MTT) is a traditional signal processing task, where the goal is to estimate the states of an unknown number of moving targets from noisy sensor measurements. In this paper, we revisit MTT from a deep learning perspective and propose convolutional neural network (CNN) architectures to tackle it. We represent the target states and sensor measurements as images. Thereby we recast the problem as a image-to-image prediction task for which we train a fully convolutional model. This architecture is motivated by a novel theoretical bound on the transferability error of CNN. The proposed CNN architecture outperforms a GM-PHD filter on the MTT task with 10 targets. The CNN performance transfers without re-training to a larger MTT task with 250 targets with only a $13\%$ increase in average OSPA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge