Chaojun Ouyang

Physics-embedded Fourier Neural Network for Partial Differential Equations

Jul 15, 2024

Abstract:We consider solving complex spatiotemporal dynamical systems governed by partial differential equations (PDEs) using frequency domain-based discrete learning approaches, such as Fourier neural operators. Despite their widespread use for approximating nonlinear PDEs, the majority of these methods neglect fundamental physical laws and lack interpretability. We address these shortcomings by introducing Physics-embedded Fourier Neural Networks (PeFNN) with flexible and explainable error control. PeFNN is designed to enforce momentum conservation and yields interpretable nonlinear expressions by utilizing unique multi-scale momentum-conserving Fourier (MC-Fourier) layers and an element-wise product operation. The MC-Fourier layer is by design translation- and rotation-invariant in the frequency domain, serving as a plug-and-play module that adheres to the laws of momentum conservation. PeFNN establishes a new state-of-the-art in solving widely employed spatiotemporal PDEs and generalizes well across input resolutions. Further, we demonstrate its outstanding performance for challenging real-world applications such as large-scale flood simulations.

Large-scale flood modeling and forecasting with FloodCast

Mar 18, 2024

Abstract:Large-scale hydrodynamic models generally rely on fixed-resolution spatial grids and model parameters as well as incurring a high computational cost. This limits their ability to accurately forecast flood crests and issue time-critical hazard warnings. In this work, we build a fast, stable, accurate, resolution-invariant, and geometry-adaptative flood modeling and forecasting framework that can perform at large scales, namely FloodCast. The framework comprises two main modules: multi-satellite observation and hydrodynamic modeling. In the multi-satellite observation module, a real-time unsupervised change detection method and a rainfall processing and analysis tool are proposed to harness the full potential of multi-satellite observations in large-scale flood prediction. In the hydrodynamic modeling module, a geometry-adaptive physics-informed neural solver (GeoPINS) is introduced, benefiting from the absence of a requirement for training data in physics-informed neural networks and featuring a fast, accurate, and resolution-invariant architecture with Fourier neural operators. GeoPINS demonstrates impressive performance on popular PDEs across regular and irregular domains. Building upon GeoPINS, we propose a sequence-to-sequence GeoPINS model to handle long-term temporal series and extensive spatial domains in large-scale flood modeling. Next, we establish a benchmark dataset in the 2022 Pakistan flood to assess various flood prediction methods. Finally, we validate the model in three dimensions - flood inundation range, depth, and transferability of spatiotemporal downscaling. Traditional hydrodynamics and sequence-to-sequence GeoPINS exhibit exceptional agreement during high water levels, while comparative assessments with SAR-based flood depth data show that sequence-to-sequence GeoPINS outperforms traditional hydrodynamics, with smaller prediction errors.

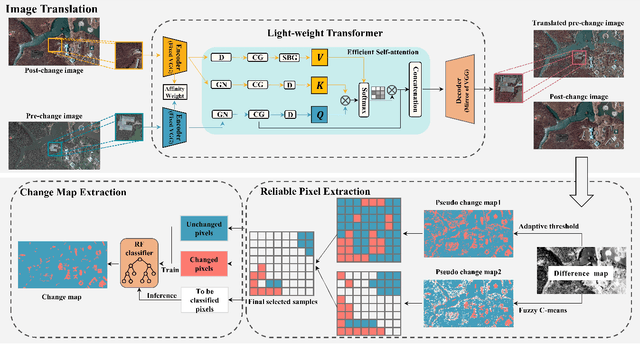

UCDFormer: Unsupervised Change Detection Using a Transformer-driven Image Translation

Aug 02, 2023

Abstract:Change detection (CD) by comparing two bi-temporal images is a crucial task in remote sensing. With the advantages of requiring no cumbersome labeled change information, unsupervised CD has attracted extensive attention in the community. However, existing unsupervised CD approaches rarely consider the seasonal and style differences incurred by the illumination and atmospheric conditions in multi-temporal images. To this end, we propose a change detection with domain shift setting for remote sensing images. Furthermore, we present a novel unsupervised CD method using a light-weight transformer, called UCDFormer. Specifically, a transformer-driven image translation composed of a light-weight transformer and a domain-specific affinity weight is first proposed to mitigate domain shift between two images with real-time efficiency. After image translation, we can generate the difference map between the translated before-event image and the original after-event image. Then, a novel reliable pixel extraction module is proposed to select significantly changed/unchanged pixel positions by fusing the pseudo change maps of fuzzy c-means clustering and adaptive threshold. Finally, a binary change map is obtained based on these selected pixel pairs and a binary classifier. Experimental results on different unsupervised CD tasks with seasonal and style changes demonstrate the effectiveness of the proposed UCDFormer. For example, compared with several other related methods, UCDFormer improves performance on the Kappa coefficient by more than 12\%. In addition, UCDFormer achieves excellent performance for earthquake-induced landslide detection when considering large-scale applications. The code is available at \url{https://github.com/zhu-xlab/UCDFormer}

Spatial--spectral FFPNet: Attention-Based Pyramid Network for Segmentation and Classification of Remote Sensing Images

Aug 20, 2020

Abstract:We consider the problem of segmentation and classification of high-resolution and hyperspectral remote sensing images. Unlike conventional natural (RGB) images, the inherent large scale and complex structures of remote sensing images pose major challenges such as spatial object distribution diversity and spectral information extraction when existing models are directly applied for image classification. In this study, we develop an attention-based pyramid network for segmentation and classification of remote sensing datasets. Attention mechanisms are used to develop the following modules: i) a novel and robust attention-based multi-scale fusion method effectively fuses useful spatial or spectral information at different and same scales; ii) a region pyramid attention mechanism using region-based attention addresses the target geometric size diversity in large-scale remote sensing images; and iii cross-scale attention} in our adaptive atrous spatial pyramid pooling network adapts to varied contents in a feature-embedded space. Different forms of feature fusion pyramid frameworks are established by combining these attention-based modules. First, a novel segmentation framework, called the heavy-weight spatial feature fusion pyramid network (FFPNet), is proposed to address the spatial problem of high-resolution remote sensing images. Second, an end-to-end spatial--spectral FFPNet is presented for classifying hyperspectral images. Experiments conducted on ISPRS Vaihingen and ISPRS Potsdam high-resolution datasets demonstrate the competitive segmentation accuracy achieved by the proposed heavy-weight spatial FFPNet. Furthermore, experiments on the Indian Pines and the University of Pavia hyperspectral datasets indicate that the proposed spatial--spectral FFPNet outperforms the current state-of-the-art methods in hyperspectral image classification.

DFPENet-geology: A Deep Learning Framework for High Precision Recognition and Segmentation of Co-seismic Landslides

Aug 28, 2019

Abstract:This paper develops a robust model, Dense Feature Pyramid with Encoder-decoder Network (DFPENet), to understand and fuse the multi-scale features of objects in remote sensing images. The proposed method achieves a competitive segmentation accuracy on the public ISPRS 2D Semantic. Furthermore, a comprehensive and widely-used scheme is proposed for co-seismic landslide recognition, which integrates image features extracted from the DFPENet model, geologic features, temporal resolution, landslide spatial analysis, and transfer learning, while only RGB images are used. To corroborate its feasibility and applicability, the proposed scheme is applied to two earthquake-triggered landslides in Jiuzhaigou (China) and Hokkaido (Japan), using available pre- and post-earthquake remote sensing images. The experiments show that the proposed scheme presents a new state-of-the-art performance in regional landslide identification, and performs well in different seismic landslide recognition tasks, though landslide boundary error is not considered. The proposed scheme demonstrates a competitive performance for high-precision, high-efficiency and cross-scene recognition of earthquake disasters, which may serve as a starting point for the application of deep learning methods in co-seismic landslide recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge