Changhee Won

TSDF-Sampling: Efficient Sampling for Neural Surface Field using Truncated Signed Distance Field

Nov 29, 2023Abstract:Multi-view neural surface reconstruction has exhibited impressive results. However, a notable limitation is the prohibitively slow inference time when compared to traditional techniques, primarily attributed to the dense sampling, required to maintain the rendering quality. This paper introduces a novel approach that substantially reduces the number of samplings by incorporating the Truncated Signed Distance Field (TSDF) of the scene. While prior works have proposed importance sampling, their dependence on initial uniform samples over the entire space makes them unable to avoid performance degradation when trying to use less number of samples. In contrast, our method leverages the TSDF volume generated only by the trained views, and it proves to provide a reasonable bound on the sampling from upcoming novel views. As a result, we achieve high rendering quality by fully exploiting the continuous neural SDF estimation within the bounds given by the TSDF volume. Notably, our method is the first approach that can be robustly plug-and-play into a diverse array of neural surface field models, as long as they use the volume rendering technique. Our empirical results show an 11-fold increase in inference speed without compromising performance. The result videos are available at our project page: https://tsdf-sampling.github.io/

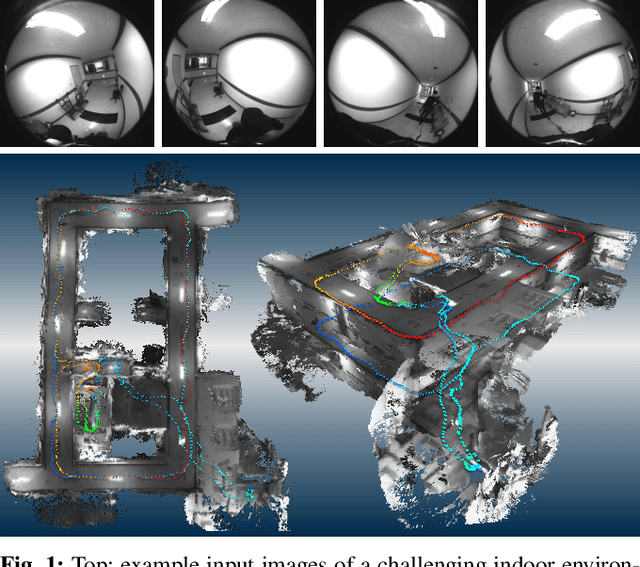

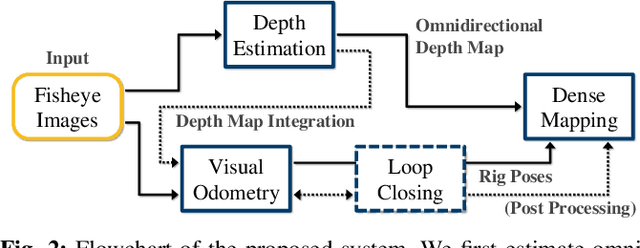

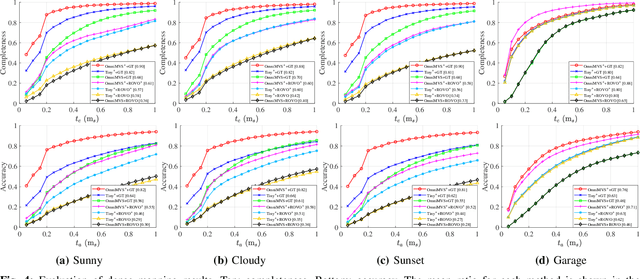

OmniSLAM: Omnidirectional Localization and Dense Mapping for Wide-baseline Multi-camera Systems

Mar 18, 2020

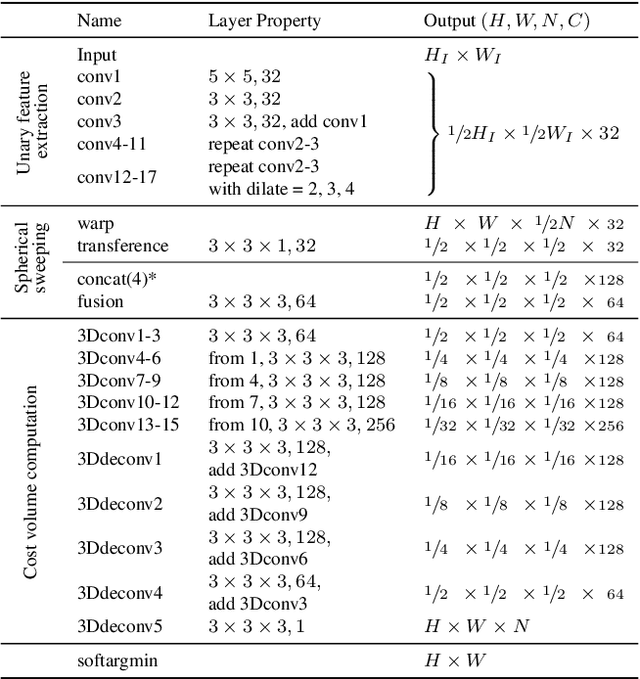

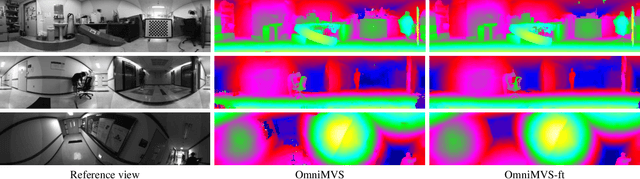

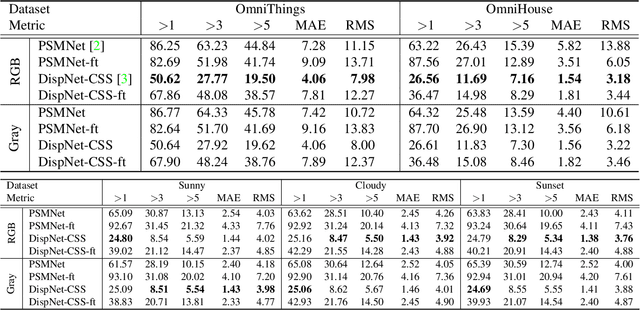

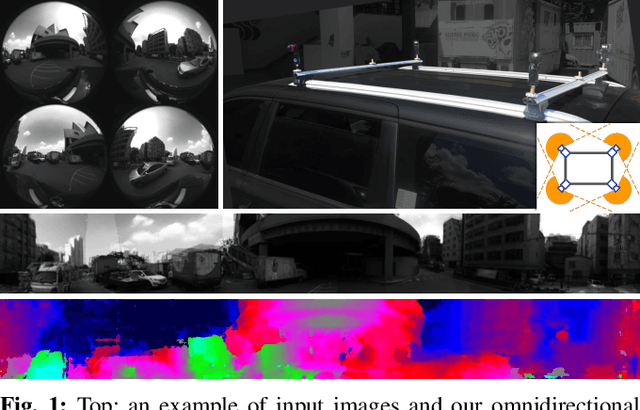

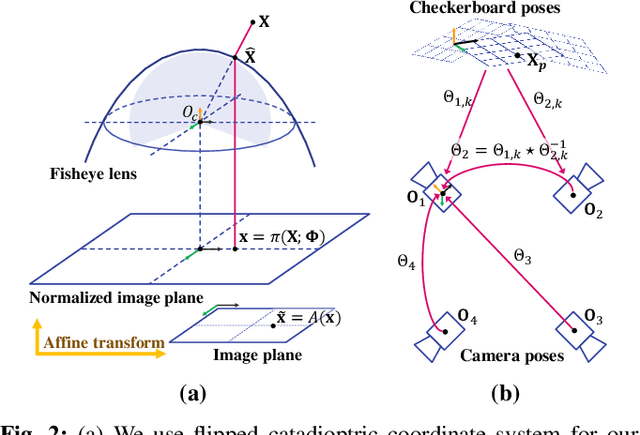

Abstract:In this paper, we present an omnidirectional localization and dense mapping system for a wide-baseline multiview stereo setup with ultra-wide field-of-view (FOV) fisheye cameras, which has a 360 degrees coverage of stereo observations of the environment. For more practical and accurate reconstruction, we first introduce improved and light-weighted deep neural networks for the omnidirectional depth estimation, which are faster and more accurate than the existing networks. Second, we integrate our omnidirectional depth estimates into the visual odometry (VO) and add a loop closing module for global consistency. Using the estimated depth map, we reproject keypoints onto each other view, which leads to a better and more efficient feature matching process. Finally, we fuse the omnidirectional depth maps and the estimated rig poses into the truncated signed distance function (TSDF) volume to acquire a 3D map. We evaluate our method on synthetic datasets with ground-truth and real-world sequences of challenging environments, and the extensive experiments show that the proposed system generates excellent reconstruction results in both synthetic and real-world environments.

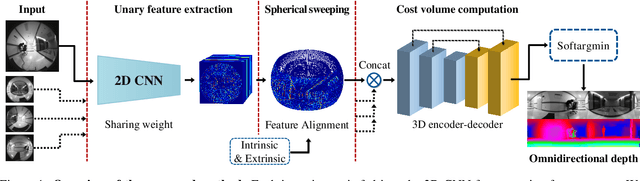

OmniMVS: End-to-End Learning for Omnidirectional Stereo Matching

Aug 17, 2019

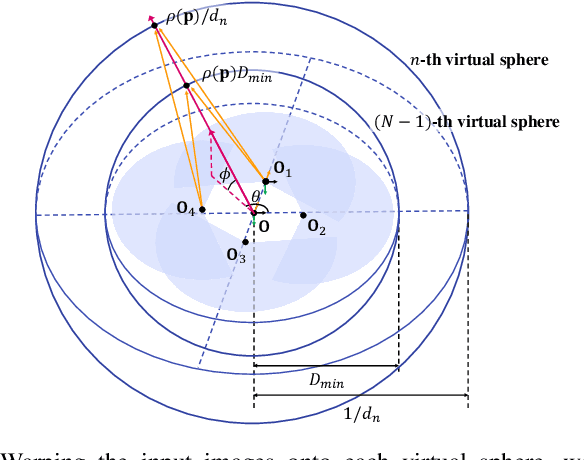

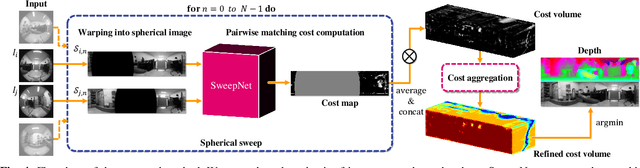

Abstract:In this paper, we propose a novel end-to-end deep neural network model for omnidirectional depth estimation from a wide-baseline multi-view stereo setup. The images captured with ultra wide field-of-view (FOV) cameras on an omnidirectional rig are processed by the feature extraction module, and then the deep feature maps are warped onto the concentric spheres swept through all candidate depths using the calibrated camera parameters. The 3D encoder-decoder block takes the aligned feature volume to produce the omnidirectional depth estimate with regularization on uncertain regions utilizing the global context information. In addition, we present large-scale synthetic datasets for training and testing omnidirectional multi-view stereo algorithms. Our datasets consist of 11K ground-truth depth maps and 45K fisheye images in four orthogonal directions with various objects and environments. Experimental results show that the proposed method generates excellent results in both synthetic and real-world environments, and it outperforms the prior art and the omnidirectional versions of the state-of-the-art conventional stereo algorithms.

SweepNet: Wide-baseline Omnidirectional Depth Estimation

Feb 28, 2019

Abstract:Omnidirectional depth sensing has its advantage over the conventional stereo systems since it enables us to recognize the objects of interest in all directions without any blind regions. In this paper, we propose a novel wide-baseline omnidirectional stereo algorithm which computes the dense depth estimate from the fisheye images using a deep convolutional neural network. The capture system consists of multiple cameras mounted on a wide-baseline rig with ultrawide field of view (FOV) lenses, and we present the calibration algorithm for the extrinsic parameters based on the bundle adjustment. Instead of estimating depth maps from multiple sets of rectified images and stitching them, our approach directly generates one dense omnidirectional depth map with full 360-degree coverage at the rig global coordinate system. To this end, the proposed neural network is designed to output the cost volume from the warped images in the sphere sweeping method, and the final depth map is estimated by taking the minimum cost indices of the aggregated cost volume by SGM. For training the deep neural network and testing the entire system, realistic synthetic urban datasets are rendered using Blender. The experiments using the synthetic and real-world datasets show that our algorithm outperforms the conventional depth estimation methods and generate highly accurate depth maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge