Hochang Seok

OmniSLAM: Omnidirectional Localization and Dense Mapping for Wide-baseline Multi-camera Systems

Mar 18, 2020

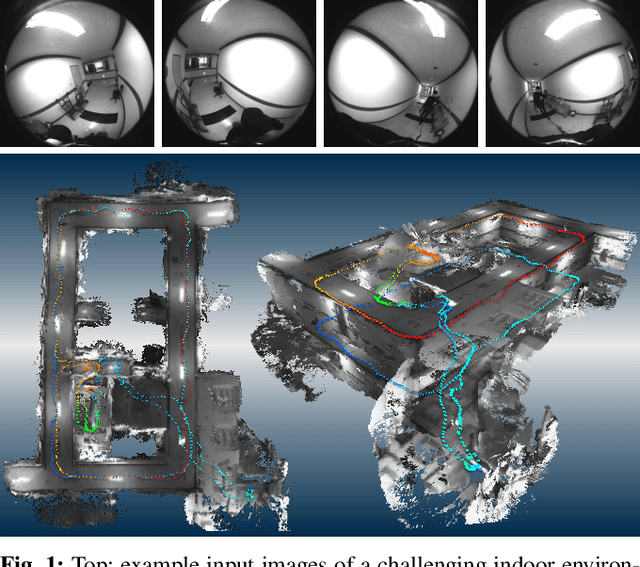

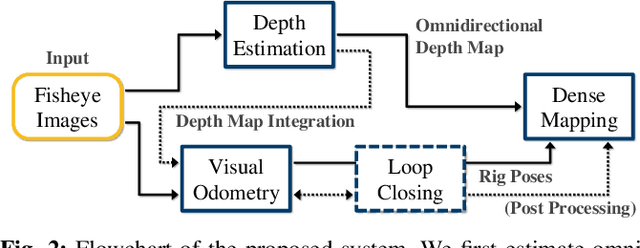

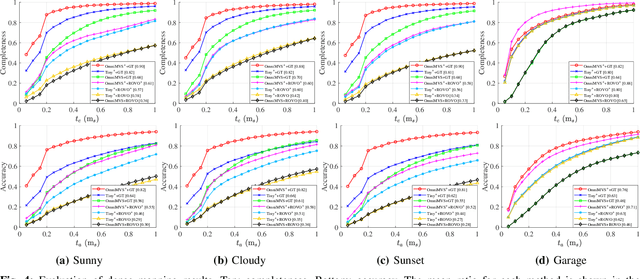

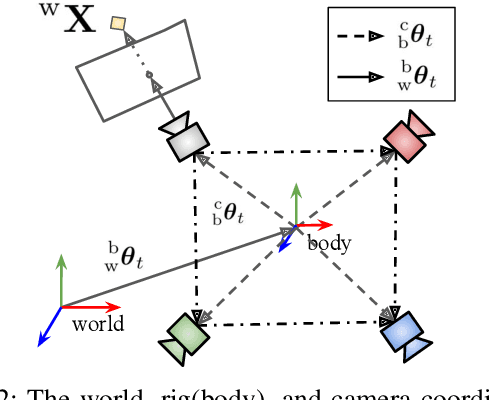

Abstract:In this paper, we present an omnidirectional localization and dense mapping system for a wide-baseline multiview stereo setup with ultra-wide field-of-view (FOV) fisheye cameras, which has a 360 degrees coverage of stereo observations of the environment. For more practical and accurate reconstruction, we first introduce improved and light-weighted deep neural networks for the omnidirectional depth estimation, which are faster and more accurate than the existing networks. Second, we integrate our omnidirectional depth estimates into the visual odometry (VO) and add a loop closing module for global consistency. Using the estimated depth map, we reproject keypoints onto each other view, which leads to a better and more efficient feature matching process. Finally, we fuse the omnidirectional depth maps and the estimated rig poses into the truncated signed distance function (TSDF) volume to acquire a 3D map. We evaluate our method on synthetic datasets with ground-truth and real-world sequences of challenging environments, and the extensive experiments show that the proposed system generates excellent reconstruction results in both synthetic and real-world environments.

ROVO: Robust Omnidirectional Visual Odometry for Wide-baseline Wide-FOV Camera Systems

Mar 01, 2019

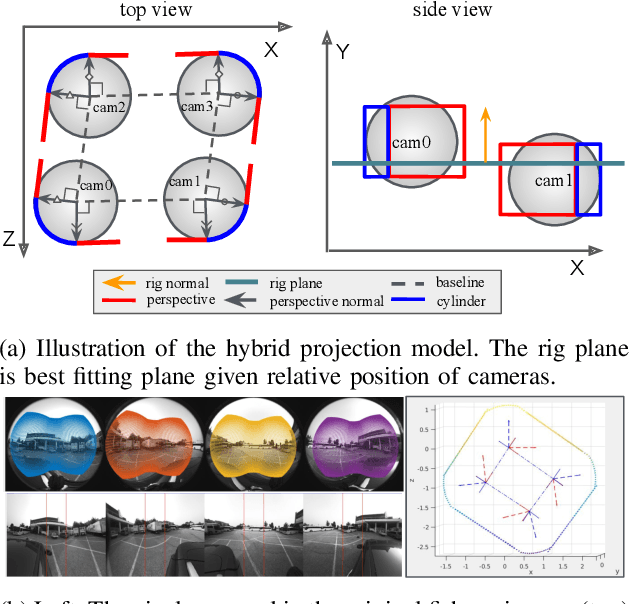

Abstract:In this paper we propose a robust visual odometry system for a wide-baseline camera rig with wide field-of-view (FOV) fisheye lenses, which provides full omnidirectional stereo observations of the environment. For more robust and accurate ego-motion estimation we adds three components to the standard VO pipeline, 1) the hybrid projection model for improved feature matching, 2) multi-view P3P RANSAC algorithm for pose estimation, and 3) online update of rig extrinsic parameters. The hybrid projection model combines the perspective and cylindrical projection to maximize the overlap between views and minimize the image distortion that degrades feature matching performance. The multi-view P3P RANSAC algorithm extends the conventional P3P RANSAC to multi-view images so that all feature matches in all views are considered in the inlier counting for robust pose estimation. Finally the online extrinsic calibration is seamlessly integrated in the backend optimization framework so that the changes in camera poses due to shocks or vibrations can be corrected automatically. The proposed system is extensively evaluated with synthetic datasets with ground-truth and real sequences of highly dynamic environment, and its superior performance is demonstrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge