Carlos A. Reyes-García

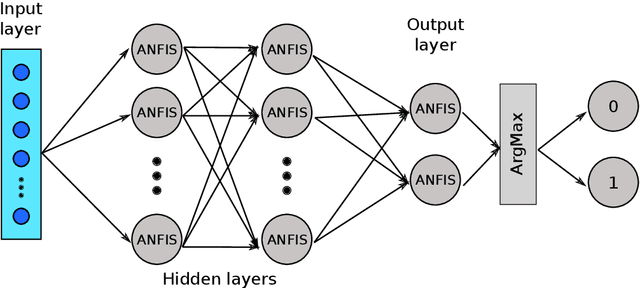

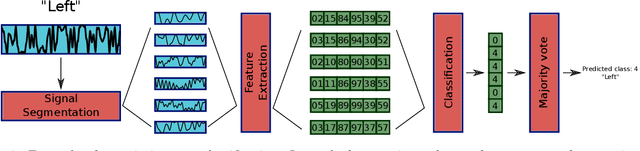

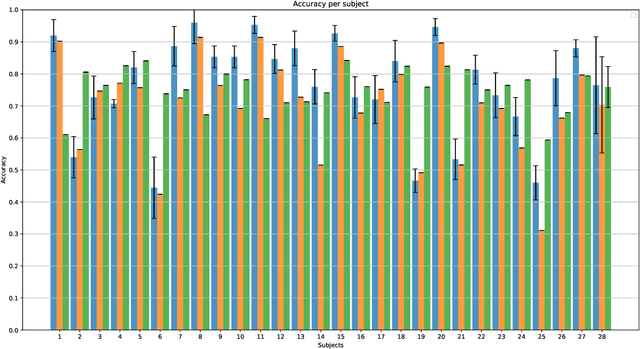

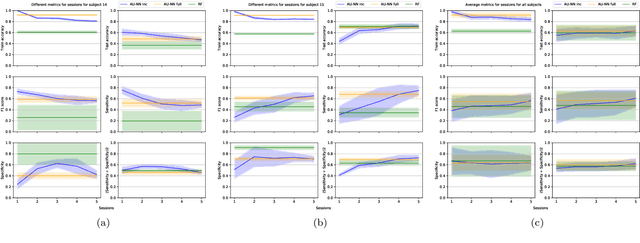

AU-NN: ANFIS Unit Neural Network

Apr 21, 2022

Abstract:In this paper is described the ANFIS Unit Neural Network, a deep neural network where each neuron is an independent ANFIS. Two use cases of this network are shown to test the capability of the network. (i) Classification of five imagined words. (ii) Incremental learning in the task of detecting Imagined Word Segments vs. Idle State Segments. In both cases, the proposed network outperforms the conventional methods. Additionally, is described a process of classification where instead of taking the whole instance as one example, each instance is decomposed into a set of smaller instances, and the classification is done by a majority vote over all the predictions of the set. The codes to build the AU-NN used in this paper, are available on the github repository https://github.com/tonahdztoro/AU_NN.

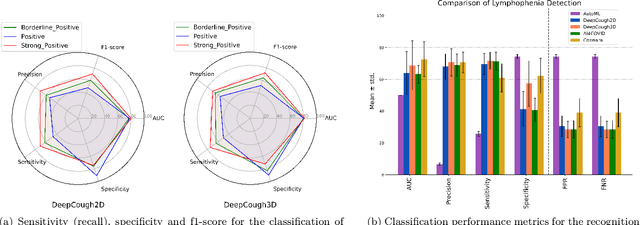

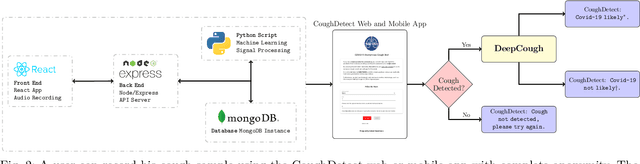

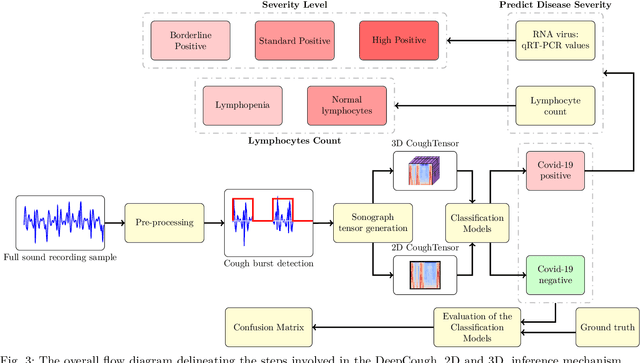

A Generic Deep Learning Based Cough Analysis System from Clinically Validated Samples for Point-of-Need Covid-19 Test and Severity Levels

Nov 10, 2021

Abstract:We seek to evaluate the detection performance of a rapid primary screening tool of Covid-19 solely based on the cough sound from 8,380 clinically validated samples with laboratory molecular-test (2,339 Covid-19 positives and 6,041 Covid-19 negatives). Samples were clinically labeled according to the results and severity based on quantitative RT-PCR (qRT-PCR) analysis, cycle threshold, and lymphocytes count from the patients. Our proposed generic method is an algorithm based on Empirical Mode Decomposition (EMD) with subsequent classification based on a tensor of audio features and a deep artificial neural network classifier with convolutional layers called DeepCough'. Two different versions of DeepCough based on the number of tensor dimensions, i.e. DeepCough2D and DeepCough3D, have been investigated. These methods have been deployed in a multi-platform proof-of-concept Web App CoughDetect to administer this test anonymously. Covid-19 recognition results rates achieved a promising AUC (Area Under Curve) of 98.800.83%, sensitivity of 96.431.85%, and specificity of 96.201.74%, and 81.08%5.05% AUC for the recognition of three severity levels. Our proposed web tool and underpinning algorithm for the robust, fast, point-of-need identification of Covid-19 facilitates the rapid detection of the infection. We believe that it has the potential to significantly hamper the Covid-19 pandemic across the world.

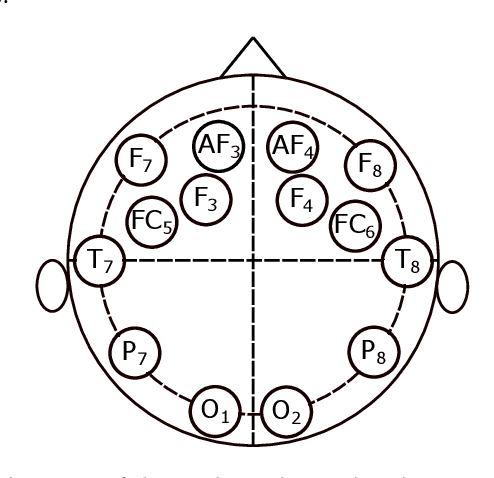

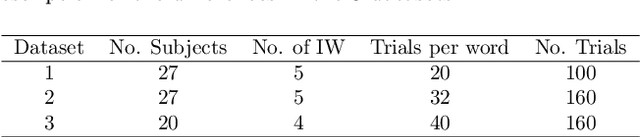

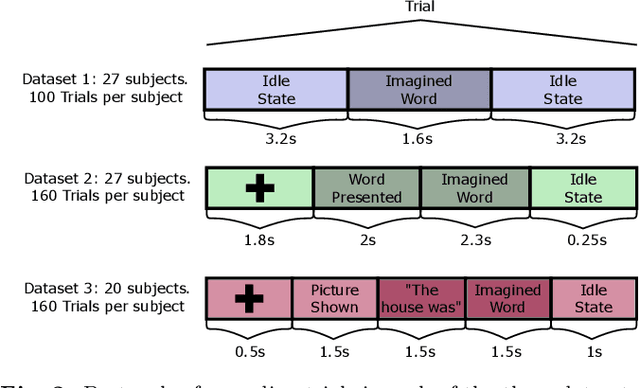

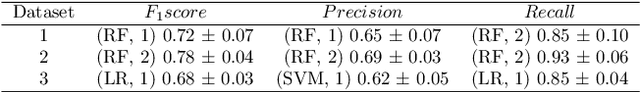

Toward asynchronous EEG-based BCI: Detecting imagined words segments in continuous EEG signals

Apr 13, 2021

Abstract:An asynchronous Brain--Computer Interface (BCI) based on imagined speech is a tool that allows to control an external device or to emit a message at the moment the user desires to by decoding EEG signals of imagined speech. In order to correctly implement these types of BCI, we must be able to detect from a continuous signal, when the subject starts to imagine words. In this work, five methods of feature extraction based on wavelet decomposition, empirical mode decomposition, frequency energies, fractal dimension and chaos theory features are presented to solve the task of detecting imagined words segments from continuous EEG signals as a preliminary study for a latter implementation of an asynchronous BCI based on imagined speech. These methods are tested in three datasets using four different classifiers and the higher F1 scores obtained are 0.73, 0.79, and 0.68 for each dataset, respectively. This results are promising to build a system that automatizes the segmentation of imagined words segments for latter classification.

* 10 pages, 14 figures

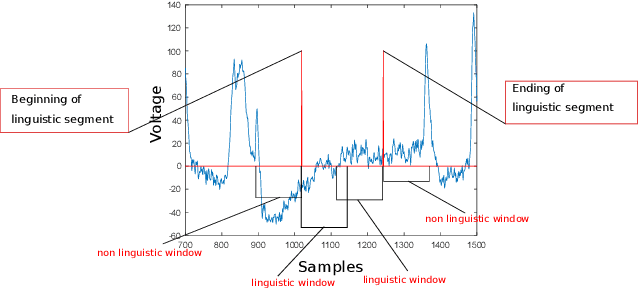

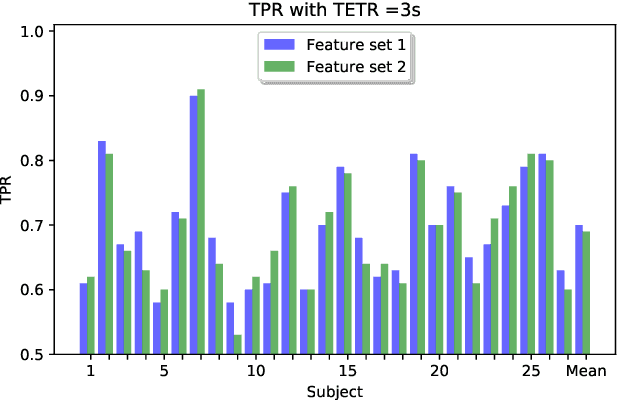

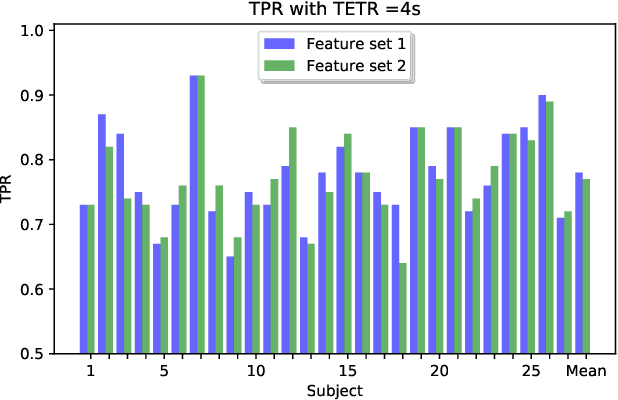

An algorithm for onset detection of linguistic segments in continuous electroencephalogram signals

Dec 11, 2020

Abstract:A Brain Computer Interface based on imagined words can decode the word a subject is thinking on through brain signals to control an external device. In order to build a fully asynchronous Brain Computer Interface based on imagined words in electroencephalogram signals as source, we need to solve the problem of detecting the onset of the imagined words. Although there has been some research in this field, the problem has not been fully solved. In this paper we present an approach to solve this problem by using values from statistics, information theory and chaos theory as features to correctly identify the onset of imagined words in a continuous signal. On detecting the onsets of imagined words, the highest True Positive Rate achieved by our approach was obtained using features based on the generalized Hurst exponent, this True Positive Rate was 0.69 and 0.77 with a timing error tolerance region of 3 and 4 seconds respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge