Toward asynchronous EEG-based BCI: Detecting imagined words segments in continuous EEG signals

Paper and Code

Apr 13, 2021

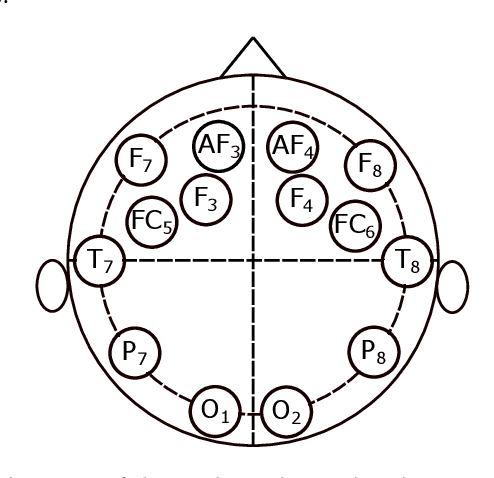

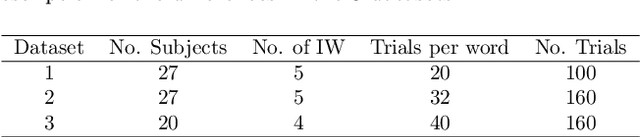

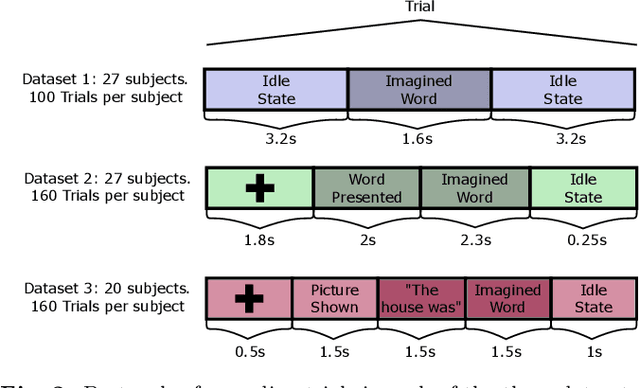

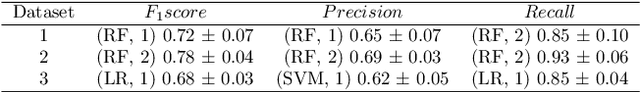

An asynchronous Brain--Computer Interface (BCI) based on imagined speech is a tool that allows to control an external device or to emit a message at the moment the user desires to by decoding EEG signals of imagined speech. In order to correctly implement these types of BCI, we must be able to detect from a continuous signal, when the subject starts to imagine words. In this work, five methods of feature extraction based on wavelet decomposition, empirical mode decomposition, frequency energies, fractal dimension and chaos theory features are presented to solve the task of detecting imagined words segments from continuous EEG signals as a preliminary study for a latter implementation of an asynchronous BCI based on imagined speech. These methods are tested in three datasets using four different classifiers and the higher F1 scores obtained are 0.73, 0.79, and 0.68 for each dataset, respectively. This results are promising to build a system that automatizes the segmentation of imagined words segments for latter classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge