Caixia Zhou

Generative Edge Detection with Stable Diffusion

Oct 04, 2024

Abstract:Edge detection is typically viewed as a pixel-level classification problem mainly addressed by discriminative methods. Recently, generative edge detection methods, especially diffusion model based solutions, are initialized in the edge detection task. Despite great potential, the retraining of task-specific designed modules and multi-step denoising inference limits their broader applications. Upon closer investigation, we speculate that part of the reason is the under-exploration of the rich discriminative information encoded in extensively pre-trained large models (\eg, stable diffusion models). Thus motivated, we propose a novel approach, named Generative Edge Detector (GED), by fully utilizing the potential of the pre-trained stable diffusion model. Our model can be trained and inferred efficiently without specific network design due to the rich high-level and low-level prior knowledge empowered by the pre-trained stable diffusion. Specifically, we propose to finetune the denoising U-Net and predict latent edge maps directly, by taking the latent image feature maps as input. Additionally, due to the subjectivity and ambiguity of the edges, we also incorporate the granularity of the edges into the denoising U-Net model as one of the conditions to achieve controllable and diverse predictions. Furthermore, we devise a granularity regularization to ensure the relative granularity relationship of the multiple predictions. We conduct extensive experiments on multiple datasets and achieve competitive performance (\eg, 0.870 and 0.880 in terms of ODS and OIS on the BSDS test dataset).

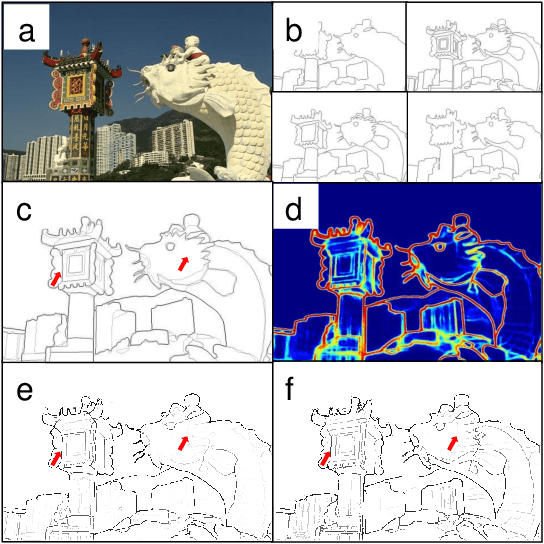

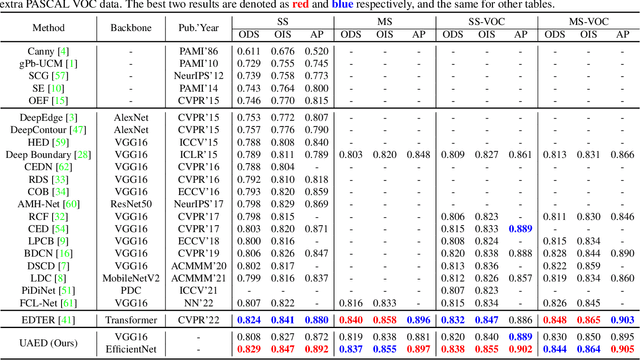

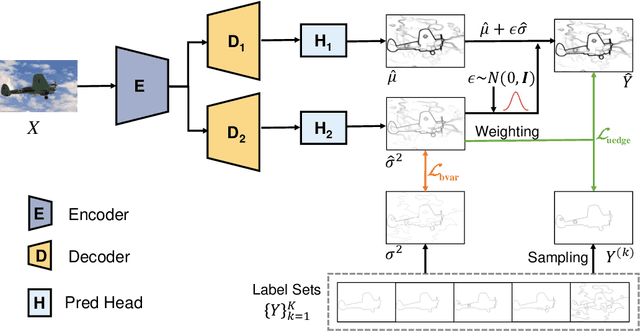

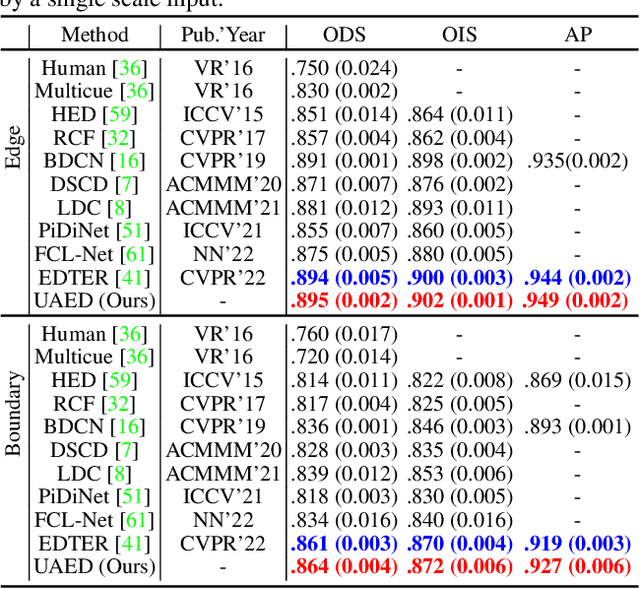

The Treasure Beneath Multiple Annotations: An Uncertainty-aware Edge Detector

Mar 21, 2023

Abstract:Deep learning-based edge detectors heavily rely on pixel-wise labels which are often provided by multiple annotators. Existing methods fuse multiple annotations using a simple voting process, ignoring the inherent ambiguity of edges and labeling bias of annotators. In this paper, we propose a novel uncertainty-aware edge detector (UAED), which employs uncertainty to investigate the subjectivity and ambiguity of diverse annotations. Specifically, we first convert the deterministic label space into a learnable Gaussian distribution, whose variance measures the degree of ambiguity among different annotations. Then we regard the learned variance as the estimated uncertainty of the predicted edge maps, and pixels with higher uncertainty are likely to be hard samples for edge detection. Therefore we design an adaptive weighting loss to emphasize the learning from those pixels with high uncertainty, which helps the network to gradually concentrate on the important pixels. UAED can be combined with various encoder-decoder backbones, and the extensive experiments demonstrate that UAED achieves superior performance consistently across multiple edge detection benchmarks. The source code is available at \url{https://github.com/ZhouCX117/UAED}

Uncertainty-Driven Action Quality Assessment

Jul 29, 2022

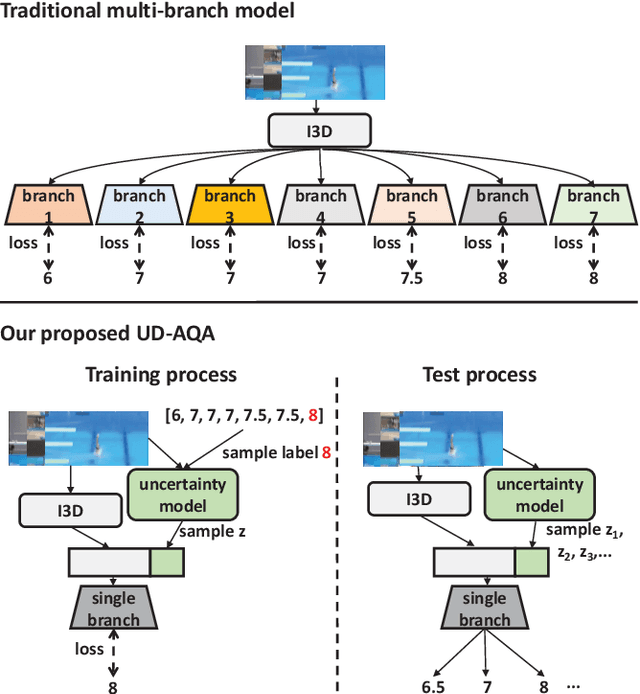

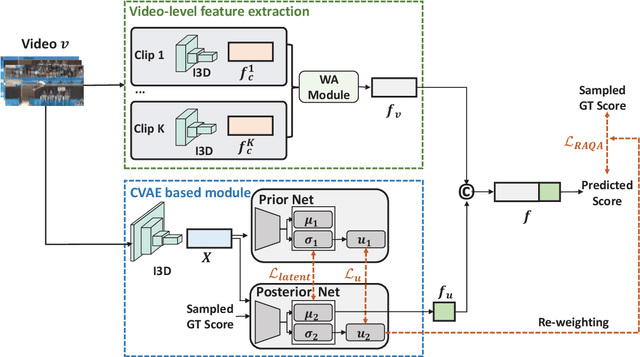

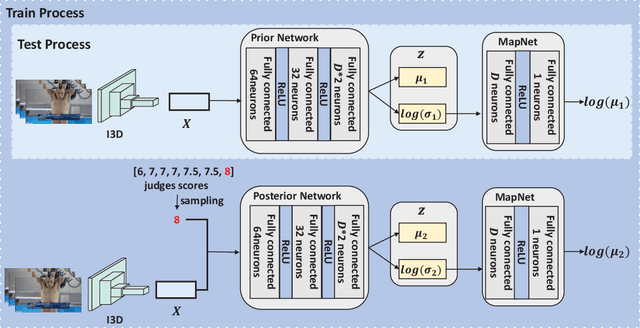

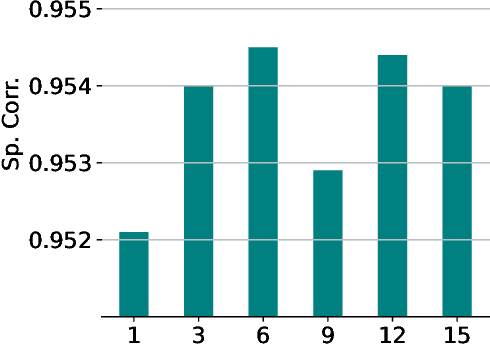

Abstract:Automatic action quality assessment (AQA) has attracted more interests due to its wide applications. However, existing AQA methods usually employ the multi-branch models to generate multiple scores, which is not flexible for dealing with a variable number of judges. In this paper, we propose a novel Uncertainty-Driven AQA (UD-AQA) model to generate multiple predictions only using one single branch. Specifically, we design a CVAE (Conditional Variational Auto-Encoder) based module to encode the uncertainty, where multiple scores can be produced by sampling from the learned latent space multiple times. Moreover, we output the estimation of uncertainty and utilize the predicted uncertainty to re-weight AQA regression loss, which can reduce the contributions of uncertain samples for training. We further design an uncertainty-guided training strategy to dynamically adjust the learning order of the samples from low uncertainty to high uncertainty. The experiments show that our proposed method achieves new state-of-the-art results on the Olympic events MTL-AQA and surgical skill JIGSAWS datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge