Bryan Min

Structured Generation and Exploration of Design Space with Large Language Models for Human-AI Co-Creation

Oct 23, 2023

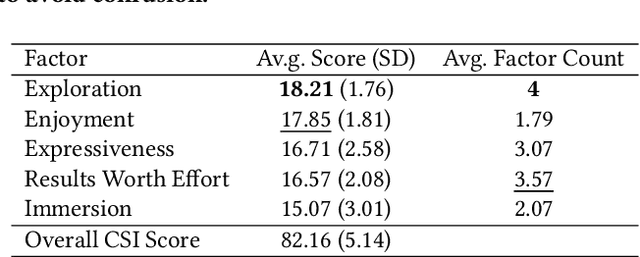

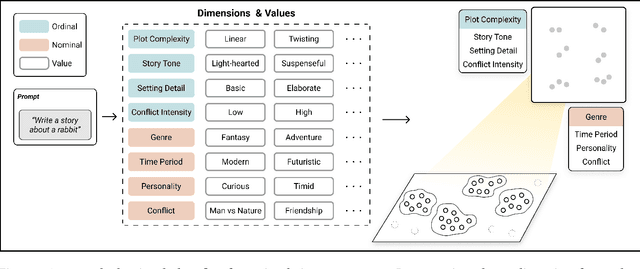

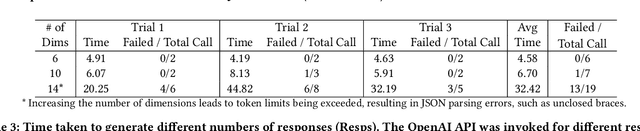

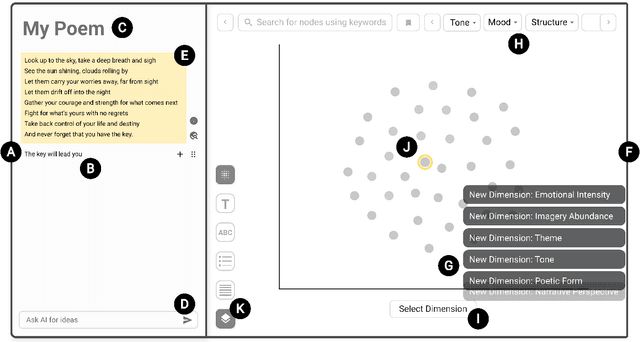

Abstract:Thanks to their generative capabilities, large language models (LLMs) have become an invaluable tool for creative processes. These models have the capacity to produce hundreds and thousands of visual and textual outputs, offering abundant inspiration for creative endeavors. But are we harnessing their full potential? We argue that current interaction paradigms fall short, guiding users towards rapid convergence on a limited set of ideas, rather than empowering them to explore the vast latent design space in generative models. To address this limitation, we propose a framework that facilitates the structured generation of design space in which users can seamlessly explore, evaluate, and synthesize a multitude of responses. We demonstrate the feasibility and usefulness of this framework through the design and development of an interactive system, Luminate, and a user study with 8 professional writers. Our work advances how we interact with LLMs for creative tasks, introducing a way to harness the creative potential of LLMs.

ChoiceMates: Supporting Unfamiliar Online Decision-Making with Multi-Agent Conversational Interactions

Oct 02, 2023

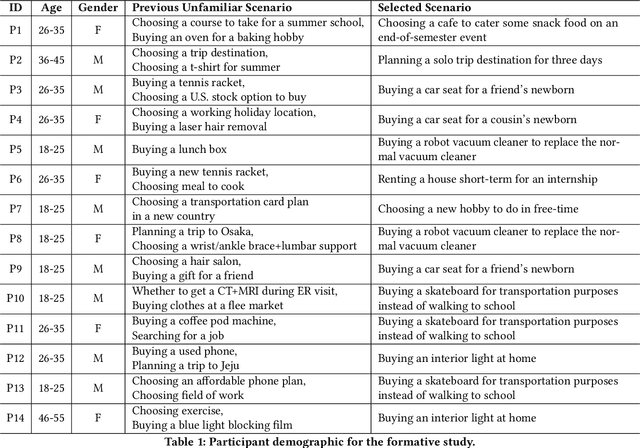

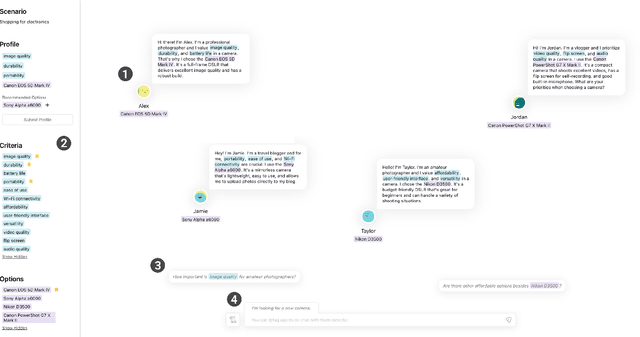

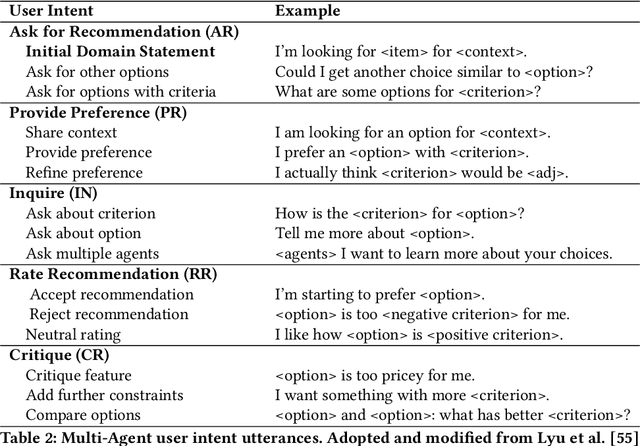

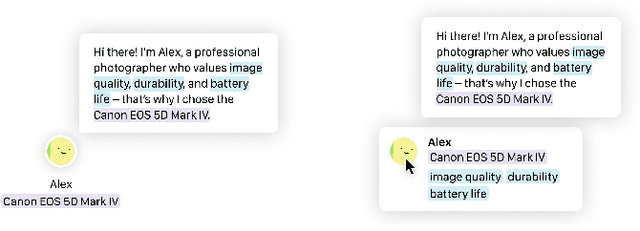

Abstract:Unfamiliar decisions -- decisions where people lack adequate domain knowledge or expertise -- specifically increase the complexity and uncertainty of the process of searching for, understanding, and making decisions with online information. Through our formative study (n=14), we observed users' challenges in accessing diverse perspectives, identifying relevant information, and deciding the right moment to make the final decision. We present ChoiceMates, a system that enables conversations with a dynamic set of LLM-powered agents for a holistic domain understanding and efficient discovery and management of information to make decisions. Agents, as opinionated personas, flexibly join the conversation, not only providing responses but also conversing among themselves to elicit each agent's preferences. Our between-subjects study (n=36) comparing ChoiceMates to conventional web search and single-agent showed that ChoiceMates was more helpful in discovering, diving deeper, and managing information compared to Web with higher confidence. We also describe how participants utilized multi-agent conversations in their decision-making process.

Sensecape: Enabling Multilevel Exploration and Sensemaking with Large Language Models

May 19, 2023

Abstract:People are increasingly turning to large language models (LLMs) for complex information tasks like academic research or planning a move to another city. However, while they often require working in a nonlinear manner - e.g., to arrange information spatially to organize and make sense of it, current interfaces for interacting with LLMs are generally linear to support conversational interaction. To address this limitation and explore how we can support LLM-powered exploration and sensemaking, we developed Sensecape, an interactive system designed to support complex information tasks with an LLM by enabling users to (1) manage the complexity of information through multilevel abstraction and (2) seamlessly switch between foraging and sensemaking. Our within-subject user study reveals that Sensecape empowers users to explore more topics and structure their knowledge hierarchically. We contribute implications for LLM-based workflows and interfaces for information tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge