Bruno Gašperov

A New Angle: On Evolving Rotation Symmetric Boolean Functions

Nov 20, 2023

Abstract:Rotation symmetric Boolean functions represent an interesting class of Boolean functions as they are relatively rare compared to general Boolean functions. At the same time, the functions in this class can have excellent properties, making them interesting for various practical applications. The usage of metaheuristics to construct rotation symmetric Boolean functions is a direction that has been explored for almost twenty years. Despite that, there are very few results considering evolutionary computation methods. This paper uses several evolutionary algorithms to evolve rotation symmetric Boolean functions with different properties. Despite using generic metaheuristics, we obtain results that are competitive with prior work relying on customized heuristics. Surprisingly, we find that bitstring and floating point encodings work better than the tree encoding. Moreover, evolving highly nonlinear general Boolean functions is easier than rotation symmetric ones.

Deep Reinforcement Learning for Robust Goal-Based Wealth Management

Jul 25, 2023Abstract:Goal-based investing is an approach to wealth management that prioritizes achieving specific financial goals. It is naturally formulated as a sequential decision-making problem as it requires choosing the appropriate investment until a goal is achieved. Consequently, reinforcement learning, a machine learning technique appropriate for sequential decision-making, offers a promising path for optimizing these investment strategies. In this paper, a novel approach for robust goal-based wealth management based on deep reinforcement learning is proposed. The experimental results indicate its superiority over several goal-based wealth management benchmarks on both simulated and historical market data.

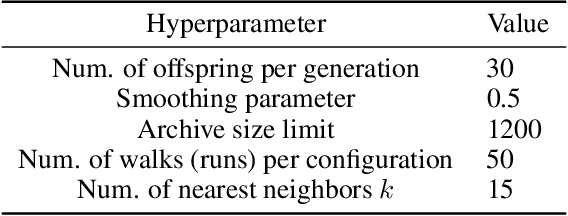

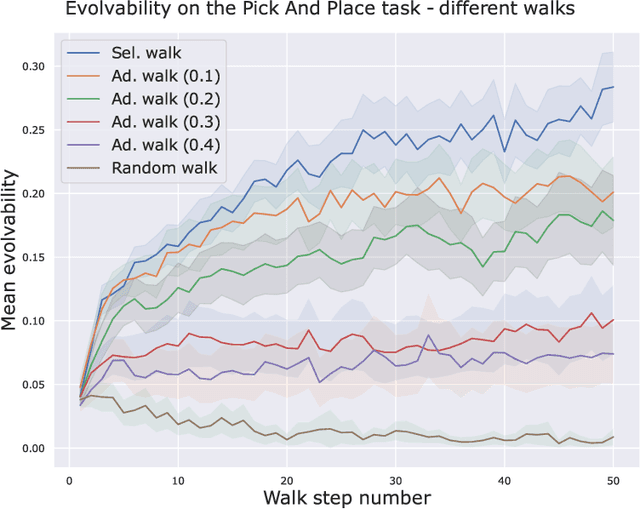

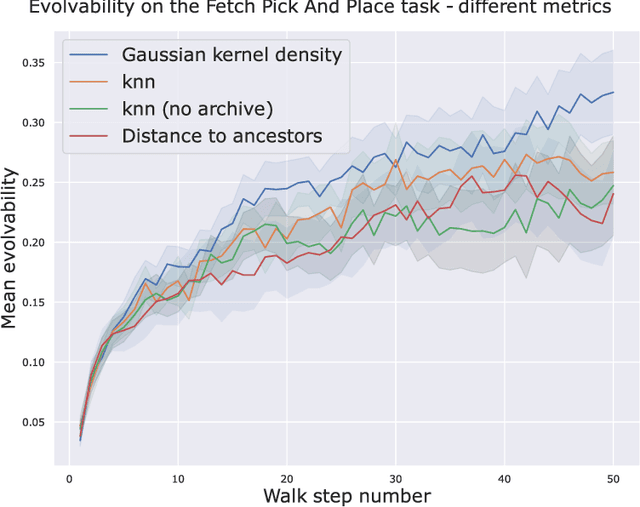

On Evolvability and Behavior Landscapes in Neuroevolutionary Divergent Search

Jun 16, 2023

Abstract:Evolvability refers to the ability of an individual genotype (solution) to produce offspring with mutually diverse phenotypes. Recent research has demonstrated that divergent search methods, particularly novelty search, promote evolvability by implicitly creating selective pressure for it. The main objective of this paper is to provide a novel perspective on the relationship between neuroevolutionary divergent search and evolvability. In order to achieve this, several types of walks from the literature on fitness landscape analysis are first adapted to this context. Subsequently, the interplay between neuroevolutionary divergent search and evolvability under varying amounts of evolutionary pressure and under different diversity metrics is investigated. To this end, experiments are performed on Fetch Pick and Place, a robotic arm task. Moreover, the performed study in particular sheds light on the structure of the genotype-phenotype mapping (the behavior landscape). Finally, a novel definition of evolvability that takes into account the evolvability of offspring and is appropriate for use with discretized behavior spaces is proposed, together with a Markov-chain-based estimation method for it.

A Search for Nonlinear Balanced Boolean Functions by Leveraging Phenotypic Properties

Jun 15, 2023

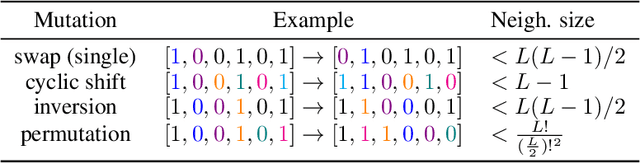

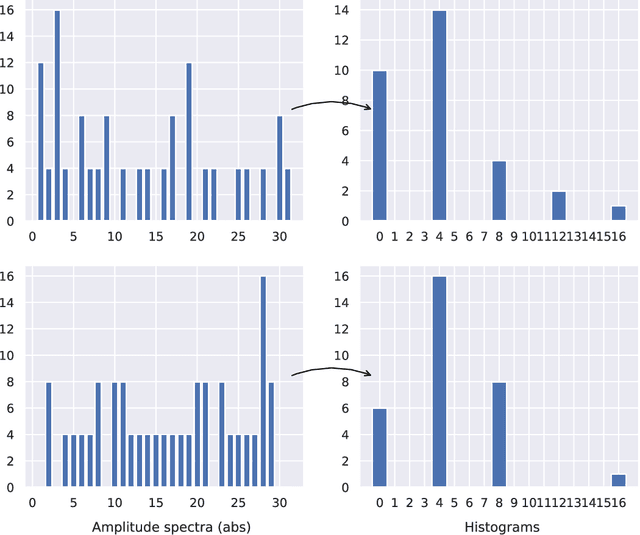

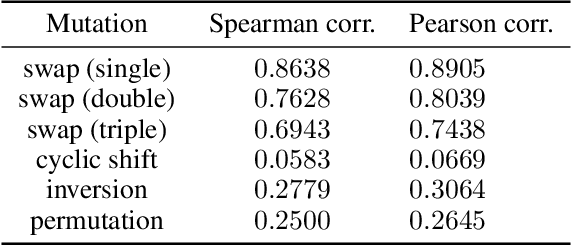

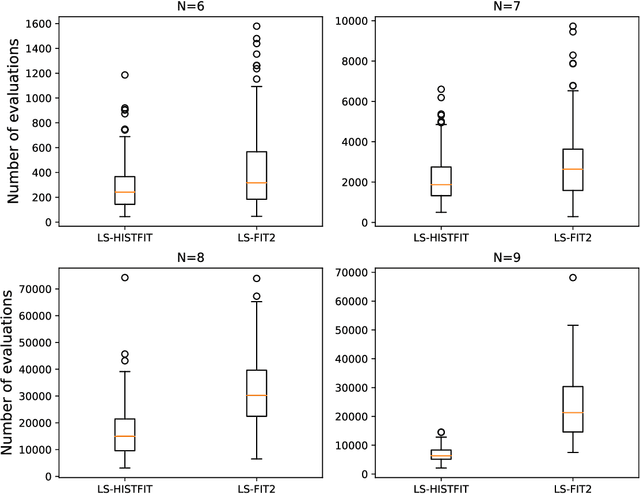

Abstract:In this paper, we consider the problem of finding perfectly balanced Boolean functions with high non-linearity values. Such functions have extensive applications in domains such as cryptography and error-correcting coding theory. We provide an approach for finding such functions by a local search method that exploits the structure of the underlying problem. Previous attempts in this vein typically focused on using the properties of the fitness landscape to guide the search. We opt for a different path in which we leverage the phenotype landscape (the mapping from genotypes to phenotypes) instead. In the context of the underlying problem, the phenotypes are represented by Walsh-Hadamard spectra of the candidate solutions (Boolean functions). We propose a novel selection criterion, under which the phenotypes are compared directly, and test whether its use increases the convergence speed (measured by the number of required spectra calculations) when compared to a competitive fitness function used in the literature. The results reveal promising convergence speed improvements for Boolean functions of sizes $N=6$ to $N=9$.

Deep Reinforcement Learning for Market Making Under a Hawkes Process-Based Limit Order Book Model

Jul 20, 2022

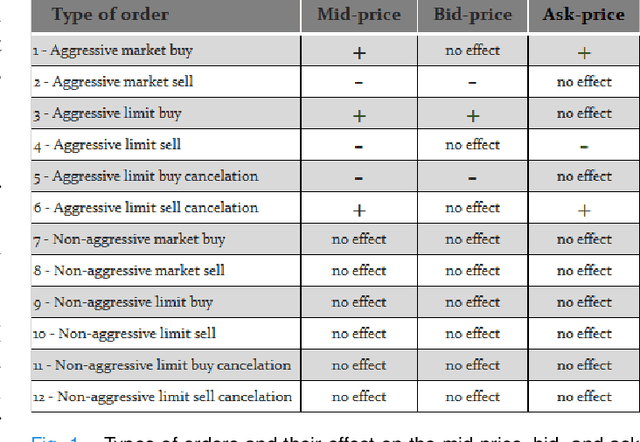

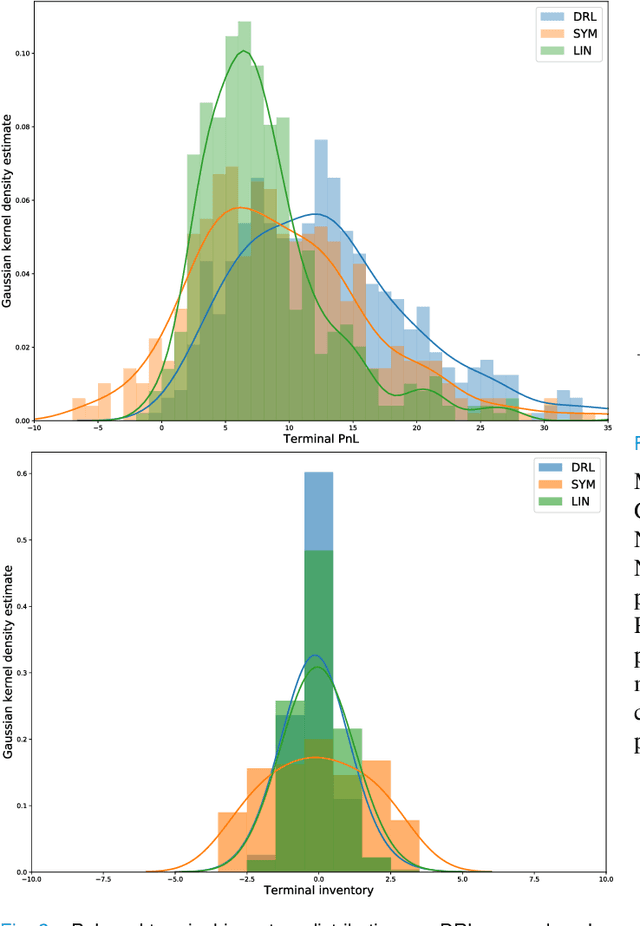

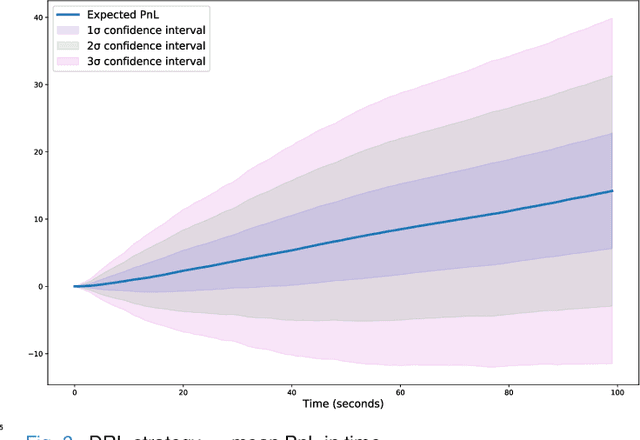

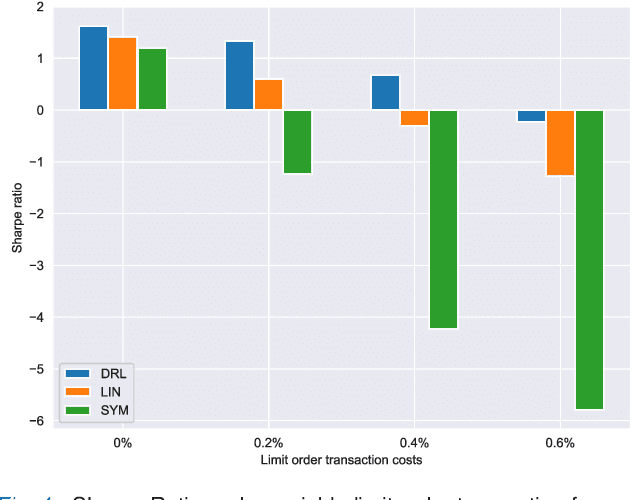

Abstract:The stochastic control problem of optimal market making is among the central problems in quantitative finance. In this paper, a deep reinforcement learning-based controller is trained on a weakly consistent, multivariate Hawkes process-based limit order book simulator to obtain market making controls. The proposed approach leverages the advantages of Monte Carlo backtesting and contributes to the line of research on market making under weakly consistent limit order book models. The ensuing deep reinforcement learning controller is compared to multiple market making benchmarks, with the results indicating its superior performance with respect to various risk-reward metrics, even under significant transaction costs.

* 6 pages, 4 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge