Bruno De Man

Photon-counting CT using a Conditional Diffusion Model for Super-resolution and Texture-preservation

Feb 25, 2024

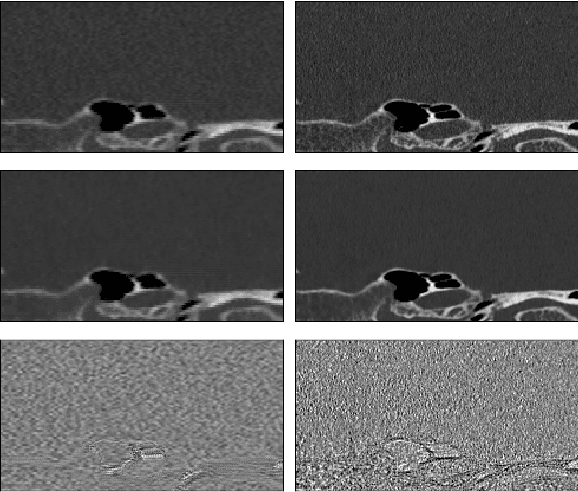

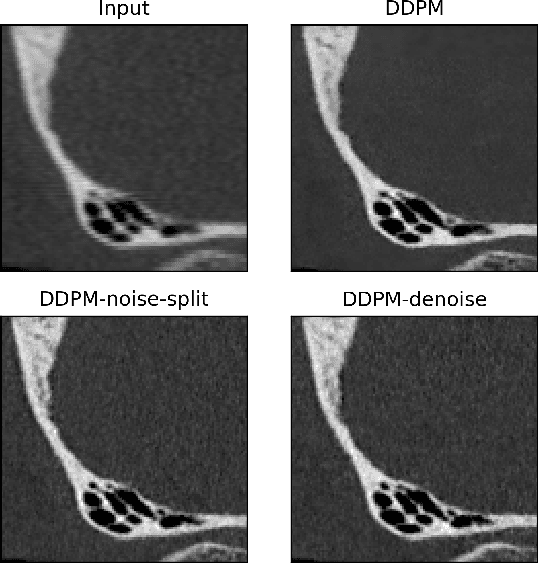

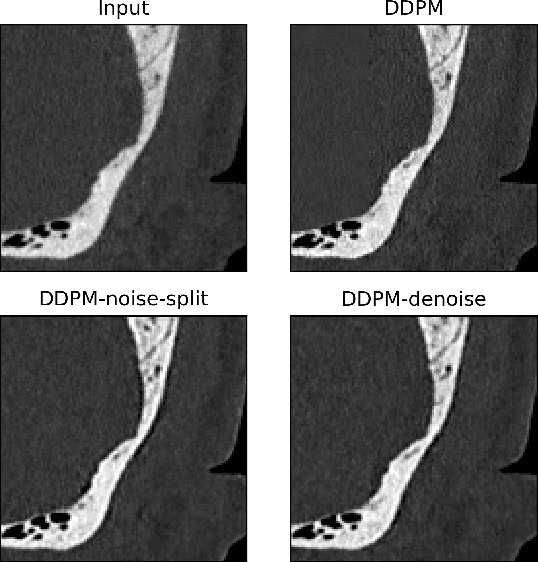

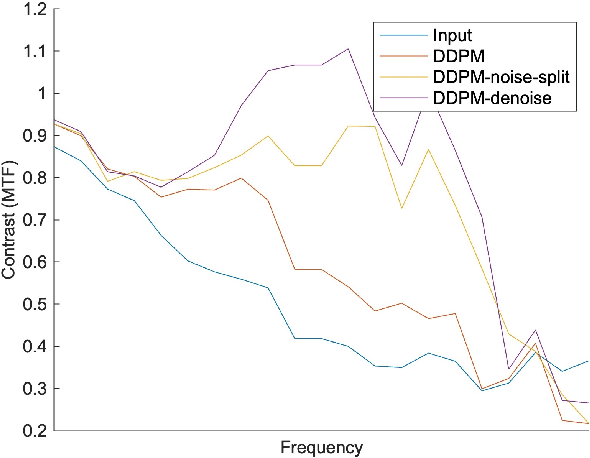

Abstract:Ultra-high resolution images are desirable in photon counting CT (PCCT), but resolution is physically limited by interactions such as charge sharing. Deep learning is a possible method for super-resolution (SR), but sourcing paired training data that adequately models the target task is difficult. Additionally, SR algorithms can distort noise texture, which is an important in many clinical diagnostic scenarios. Here, we train conditional denoising diffusion probabilistic models (DDPMs) for PCCT super-resolution, with the objective to retain textural characteristics of local noise. PCCT simulation methods are used to synthesize realistic resolution degradation. To preserve noise texture, we explore decoupling the noise and signal image inputs and outputs via deep denoisers, explicitly mapping to each during the SR process. Our experimental results indicate that our DDPM trained on simulated data can improve sharpness in real PCCT images. Additionally, the disentanglement of noise from the original image allows our model more faithfully preserve noise texture.

Coronary Atherosclerotic Plaque Characterization with Photon-counting CT: a Simulation-based Feasibility Study

Dec 04, 2023

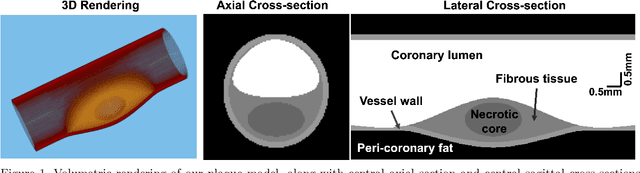

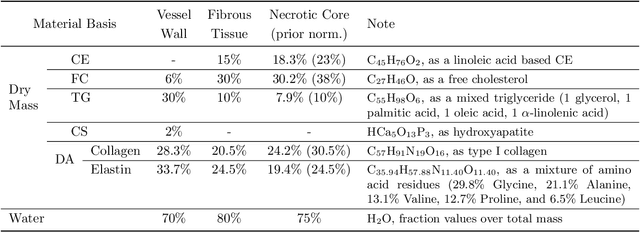

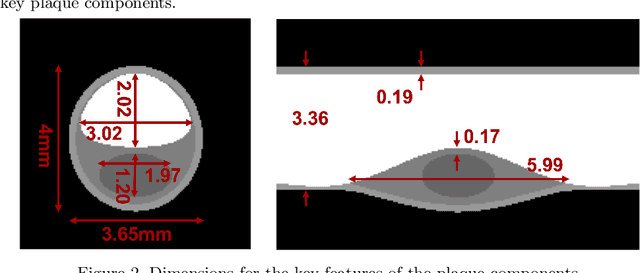

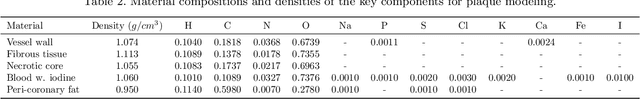

Abstract:Recent development of photon-counting CT (PCCT) brings great opportunities for plaque characterization with much-improved spatial resolution and spectral imaging capability. While existing coronary plaque PCCT imaging results are based on detectors made of CZT or CdTe materials, deep-silicon photon-counting detectors have unique performance characteristics and promise distinct imaging capabilities. In this work, we report a systematic simulation study of a deep-silicon PCCT scanner with a new clinically-relevant digital plaque phantom with realistic geometrical parameters and chemical compositions. This work investigates the effects of spatial resolution, noise, motion artifacts, radiation dose, and spectral characterization. Our simulation results suggest that the deep-silicon PCCT design provides adequate spatial resolution for visualizing a necrotic core and quantitation of key plaque features. Advanced denoising techniques and aggressive bowtie filter designs can keep image noise to acceptable levels at this resolution while keeping radiation dose comparable to that of a conventional CT scan. The ultrahigh resolution of PCCT also means an elevated sensitivity to motion artifacts. It is found that a tolerance of less than 0.4 mm residual movement range requires the application of accurate motion correction methods for best plaque imaging quality with PCCT.

A hierarchical approach to deep learning and its application to tomographic reconstruction

Dec 16, 2019

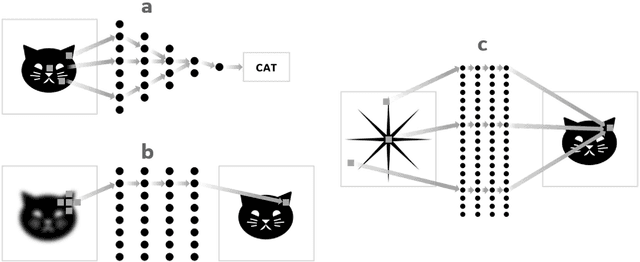

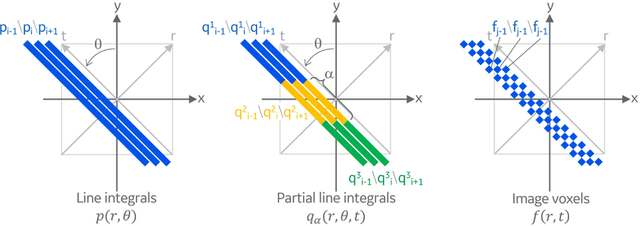

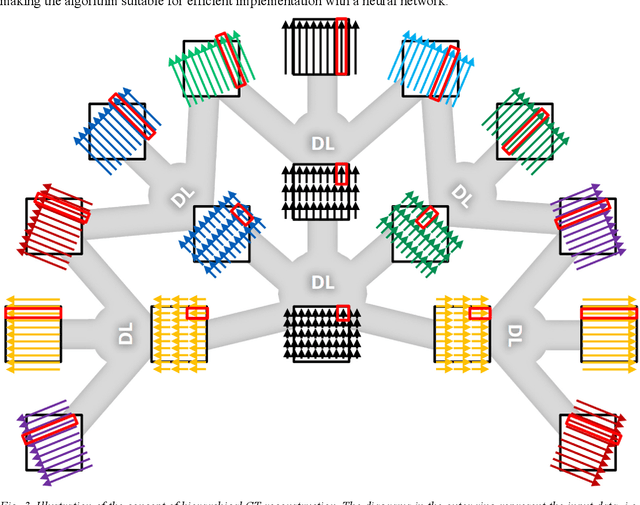

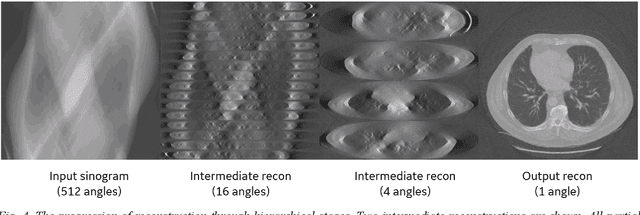

Abstract:Deep learning (DL) has shown unprecedented performance for many image analysis and image enhancement tasks. Yet, solving large-scale inverse problems like tomographic reconstruction remains challenging for DL. These problems involve non-local and space-variant integral transforms between the input and output domains, for which no efficient neural network models have been found. A prior attempt to solve such problems with supervised learning relied on a brute-force fully connected network and applied it to reconstruction for a $128^4$ system matrix size. This cannot practically scale to realistic data sizes such as $512^4$ and $512^6$ for three-dimensional data sets. Here we present a novel framework to solve such problems with deep learning by casting the original problem as a continuum of intermediate representations between the input and output data. The original problem is broken down into a sequence of simpler transformations that can be well mapped onto an efficient hierarchical network architecture, with exponentially fewer parameters than a generic network would need. We applied the approach to computed tomography (CT) image reconstruction for a $512^4$ system matrix size. To our knowledge, this enabled the first data-driven DL solver for full-size CT reconstruction without relying on the structure of direct (analytical) or iterative (numerical) inversion techniques. The proposed approach is applicable to other imaging problems such as emission and magnetic resonance reconstruction. More broadly, hierarchical DL opens the door to a new class of solvers for general inverse problems, which could potentially lead to improved signal-to-noise ratio, spatial resolution and computational efficiency in various areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge