Brian Caulfield

Machine Vision-Enabled Sports Performance Analysis

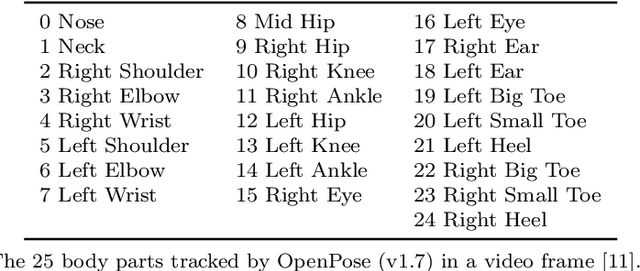

Dec 18, 2023Abstract:$\textbf{Goal:}$ This study investigates the feasibility of monocular 2D markerless motion capture (MMC) using a single smartphone to measure jump height, velocity, flight time, contact time, and range of motion (ROM) during motor tasks. $\textbf{Methods:}$ Sixteen healthy adults performed three repetitions of selected tests while their body movements were recorded using force plates, optical motion capture (OMC), and a smartphone camera. MMC was then performed on the smartphone videos using OpenPose v1.7.0. $\textbf{Results:}$ MMC demonstrated excellent agreement with ground truth for jump height and velocity measurements. However, MMC's performance varied from poor to moderate for flight time, contact time, ROM, and angular velocity measurements. $\textbf{Conclusions:}$ These findings suggest that monocular 2D MMC may be a viable alternative to OMC or force plates for assessing sports performance during jumps and velocity-based tests. Additionally, MMC could provide valuable visual feedback for flight time, contact time, ROM, and angular velocity measurements.

An Examination of Wearable Sensors and Video Data Capture for Human Exercise Classification

Jul 10, 2023

Abstract:Wearable sensors such as Inertial Measurement Units (IMUs) are often used to assess the performance of human exercise. Common approaches use handcrafted features based on domain expertise or automatically extracted features using time series analysis. Multiple sensors are required to achieve high classification accuracy, which is not very practical. These sensors require calibration and synchronization and may lead to discomfort over longer time periods. Recent work utilizing computer vision techniques has shown similar performance using video, without the need for manual feature engineering, and avoiding some pitfalls such as sensor calibration and placement on the body. In this paper, we compare the performance of IMUs to a video-based approach for human exercise classification on two real-world datasets consisting of Military Press and Rowing exercises. We compare the performance using a single camera that captures video in the frontal view versus using 5 IMUs placed on different parts of the body. We observe that an approach based on a single camera can outperform a single IMU by 10 percentage points on average. Additionally, a minimum of 3 IMUs are required to outperform a single camera. We observe that working with the raw data using multivariate time series classifiers outperforms traditional approaches based on handcrafted or automatically extracted features. Finally, we show that an ensemble model combining the data from a single camera with a single IMU outperforms either data modality. Our work opens up new and more realistic avenues for this application, where a video captured using a readily available smartphone camera, combined with a single sensor, can be used for effective human exercise classification.

Quantifying Jump Height Using Markerless Motion Capture with a Single Smartphone

Feb 21, 2023

Abstract:Goal: The countermovement jump (CMJ) is commonly used to measure the explosive power of the lower body. This study evaluates how accurately markerless motion capture (MMC) with a single smartphone can measure bilateral and unilateral CMJ jump height. Methods: First, three repetitions each of bilateral and unilateral CMJ were performed by sixteen healthy adults (mean age: 30.87 $\pm$ 7.24 years; mean BMI: 23.14 $\pm$ 2.55 $kg/m^2$) on force plates and simultaneously captured using optical motion capture (OMC) and one smartphone camera. Next, MMC was performed on the smartphone videos using OpenPose. Then, we evaluated MMC in quantifying jump height using the force plate and OMC as ground truths. Results: MMC quantifies jump heights with MAE between 1.47 and 2.82 cm, and ICC between 0.84 and 0.99 without manual segmentation and camera calibration. Conclusions: Our results suggest that using a single smartphone for markerless motion capture is feasible. Index Terms - Countermovement jump, Markerless motion capture, Optical motion capture, Jump height. Impact Statement - Countermovement jump height can be accurately quantified using markerless motion capture with a single smartphone, with a simple setup that requires neither camera calibration nor manual segmentation.

Fast and Robust Video-Based Exercise Classification via Body Pose Tracking and Scalable Multivariate Time Series Classifiers

Oct 02, 2022

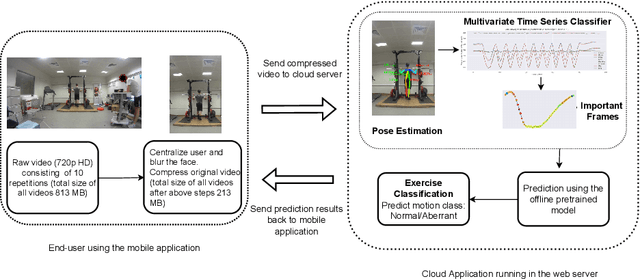

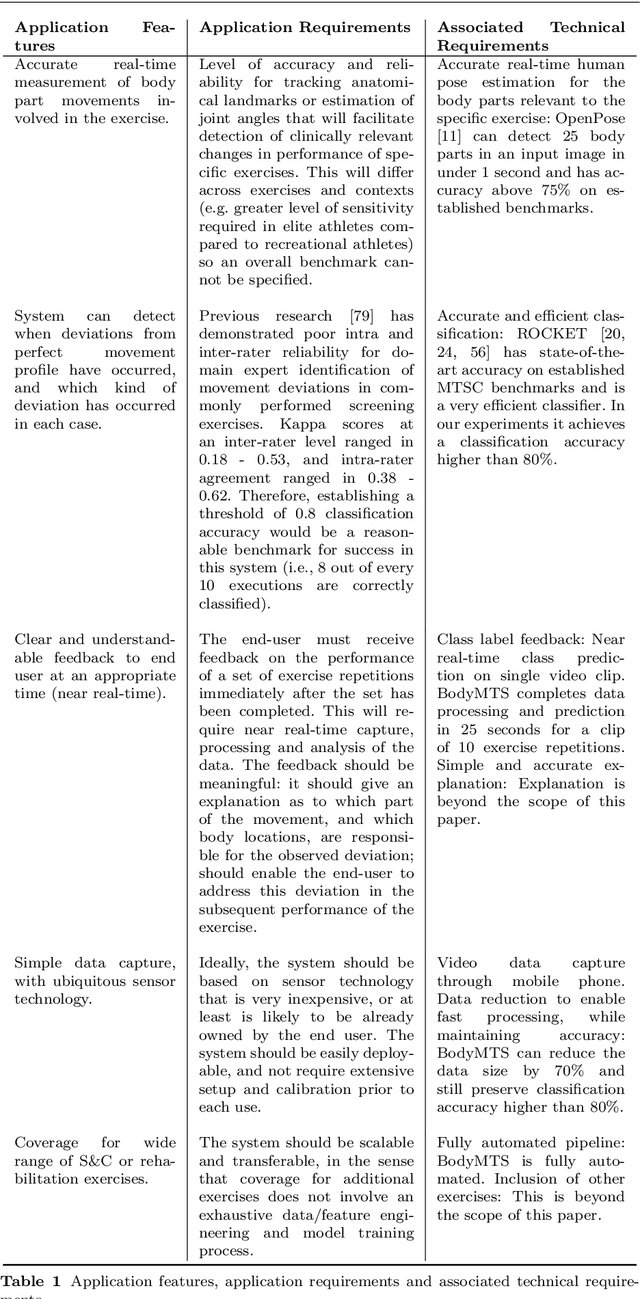

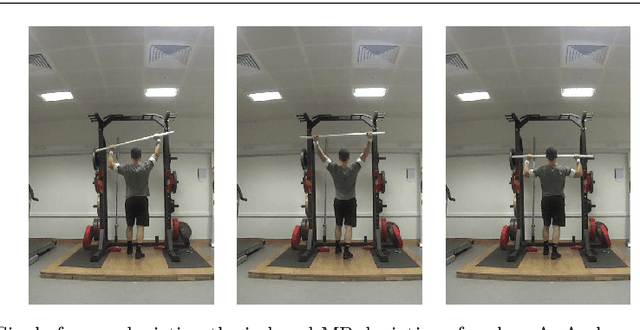

Abstract:Technological advancements have spurred the usage of machine learning based applications in sports science. Physiotherapists, sports coaches and athletes actively look to incorporate the latest technologies in order to further improve performance and avoid injuries. While wearable sensors are very popular, their use is hindered by constraints on battery power and sensor calibration, especially for use cases which require multiple sensors to be placed on the body. Hence, there is renewed interest in video-based data capture and analysis for sports science. In this paper, we present the application of classifying S\&C exercises using video. We focus on the popular Military Press exercise, where the execution is captured with a video-camera using a mobile device, such as a mobile phone, and the goal is to classify the execution into different types. Since video recordings need a lot of storage and computation, this use case requires data reduction, while preserving the classification accuracy and enabling fast prediction. To this end, we propose an approach named BodyMTS to turn video into time series by employing body pose tracking, followed by training and prediction using multivariate time series classifiers. We analyze the accuracy and robustness of BodyMTS and show that it is robust to different types of noise caused by either video quality or pose estimation factors. We compare BodyMTS to state-of-the-art deep learning methods which classify human activity directly from videos and show that BodyMTS achieves similar accuracy, but with reduced running time and model engineering effort. Finally, we discuss some of the practical aspects of employing BodyMTS in this application in terms of accuracy and robustness under reduced data quality and size. We show that BodyMTS achieves an average accuracy of 87\%, which is significantly higher than the accuracy of human domain experts.

Automated Mobility Context Detection with Inertial Signals

May 16, 2022

Abstract:Remote monitoring of motor functions is a powerful approach for health assessment, especially among the elderly population or among subjects affected by pathologies that negatively impact their walking capabilities. This is further supported by the continuous development of wearable sensor devices, which are getting progressively smaller, cheaper, and more energy efficient. The external environment and mobility context have an impact on walking performance, hence one of the biggest challenges when remotely analysing gait episodes is the ability to detect the context within which those episodes occurred. The primary goal of this paper is the investigation of context detection for remote monitoring of daily motor functions. We aim to understand whether inertial signals sampled with wearable accelerometers, provide reliable information to classify gait-related activities as either indoor or outdoor. We explore two different approaches to this task: (1) using gait descriptors and features extracted from the input inertial signals sampled during walking episodes, together with classic machine learning algorithms, and (2) treating the input inertial signals as time series data and leveraging end-to-end state-of-the-art time series classifiers. We directly compare the two approaches through a set of experiments based on data collected from 9 healthy individuals. Our results indicate that the indoor/outdoor context can be successfully derived from inertial data streams. We also observe that time series classification models achieve better accuracy than any other feature-based models, while preserving efficiency and ease of use.

Human Activity Recognition with Convolutional Neural Netowrks

Jun 05, 2019

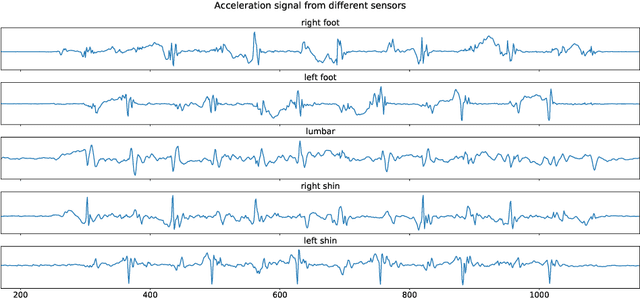

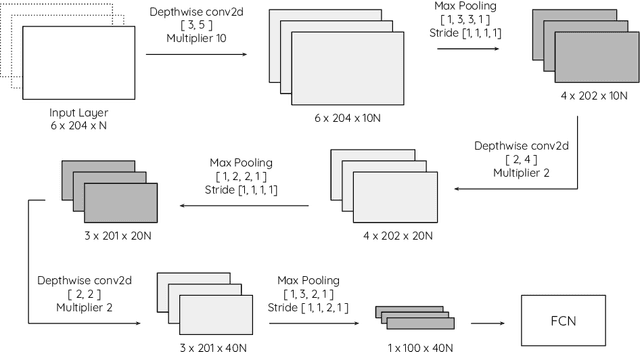

Abstract:The problem of automatic identification of physical activities performed by human subjects is referred to as Human Activity Recognition (HAR). There exist several techniques to measure motion characteristics during these physical activities, such as Inertial Measurement Units (IMUs). IMUs have a cornerstone position in this context, and are characterized by usage flexibility, low cost, and reduced privacy impact. With the use of inertial sensors, it is possible to sample some measures such as acceleration and angular velocity of a body, and use them to learn models that are capable of correctly classifying activities to their corresponding classes. In this paper, we propose to use Convolutional Neural Networks (CNNs) to classify human activities. Our models use raw data obtained from a set of inertial sensors. We explore several combinations of activities and sensors, showing how motion signals can be adapted to be fed into CNNs by using different network architectures. We also compare the performance of different groups of sensors, investigating the classification potential of single, double and triple sensor systems. The experimental results obtained on a dataset of 16 lower-limb activities, collected from a group of participants with the use of five different sensors, are very promising.

Automatic Classification of Knee Rehabilitation Exercises Using a Single Inertial Sensor: a Case Study

Dec 10, 2018

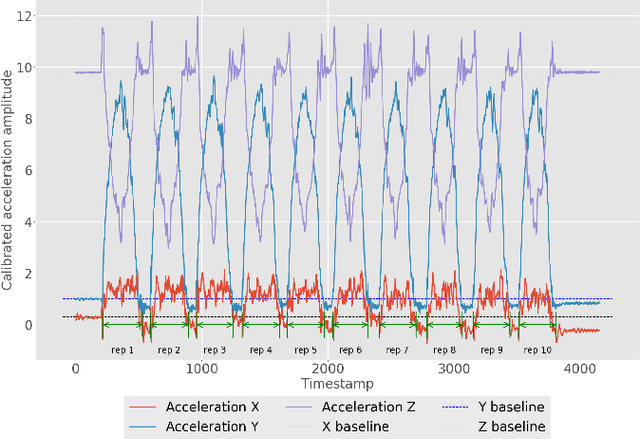

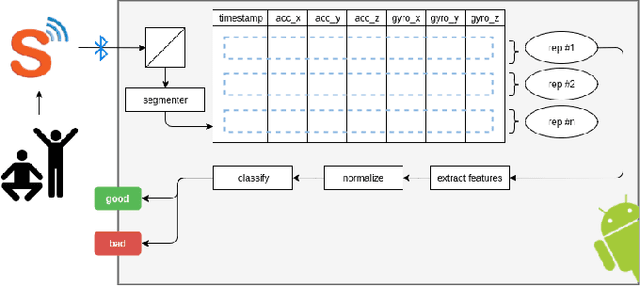

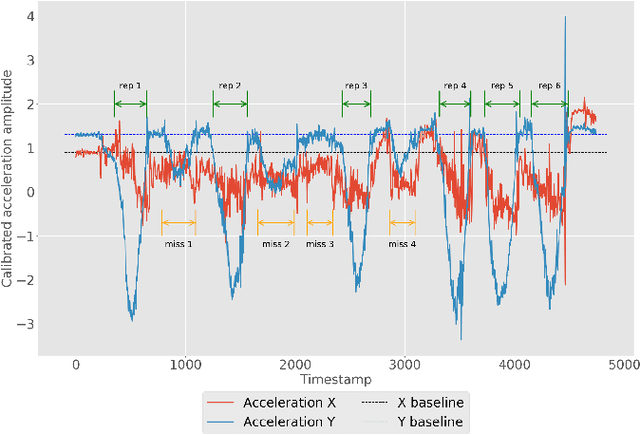

Abstract:Inertial measurement units have the ability to accurately record the acceleration and angular velocity of human limb segments during discrete joint movements. These movements are commonly used in exercise rehabilitation programmes following orthopaedic surgery such as total knee replacement. This provides the potential for a biofeedback system with data mining technique for patients undertaking exercises at home without physician supervision. We propose to use machine learning techniques to automatically analyse inertial measurement unit data collected during these exercises, and then assess whether each repetition of the exercise was executed correctly or not. Our approach consists of two main phases: signal segmentation, and segment classification. Accurate pre-processing and feature extraction are paramount topics in order for the technique to work. In this paper, we present a classification method for unsupervised rehabilitation exercises, based on a segmentation process that extracts repetitions from a longer signal activity. The results obtained from experimental datasets of both clinical and healthy subjects, for a set of 4 knee exercises commonly used in rehabilitation, are very promising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge