Brendan Whelan

Image Reconstruction with B0 Inhomogeneity using an Interpretable Deep Unrolled Network on an Open-bore MRI-Linac

Apr 15, 2024

Abstract:MRI-Linac systems require fast image reconstruction with high geometric fidelity to localize and track tumours for radiotherapy treatments. However, B0 field inhomogeneity distortions and slow MR acquisition potentially limit the quality of the image guidance and tumour treatments. In this study, we develop an interpretable unrolled network, referred to as RebinNet, to reconstruct distortion-free images from B0 inhomogeneity-corrupted k-space for fast MRI-guided radiotherapy applications. RebinNet includes convolutional neural network (CNN) blocks to perform image regularizations and nonuniform fast Fourier Transform (NUFFT) modules to incorporate B0 inhomogeneity information. The RebinNet was trained on a publicly available MR dataset from eleven healthy volunteers for both fully sampled and subsampled acquisitions. Grid phantom and human brain images acquired from an open-bore 1T MRI-Linac scanner were used to evaluate the performance of the proposed network. The RebinNet was compared with the conventional regularization algorithm and our recently developed UnUNet method in terms of root mean squared error (RMSE), structural similarity (SSIM), residual distortions, and computation time. Imaging results demonstrated that the RebinNet reconstructed images with lowest RMSE (<0.05) and highest SSIM (>0.92) at four-time acceleration for simulated brain images. The RebinNet could better preserve structural details and substantially improve the computational efficiency (ten-fold faster) compared to the conventional regularization methods, and had better generalization ability than the UnUNet method. The proposed RebinNet can achieve rapid image reconstruction and overcome the B0 inhomogeneity distortions simultaneously, which would facilitate accurate and fast image guidance in radiotherapy treatments.

Distortion-Corrected Image Reconstruction with Deep Learning on an MRI-Linac

May 23, 2022

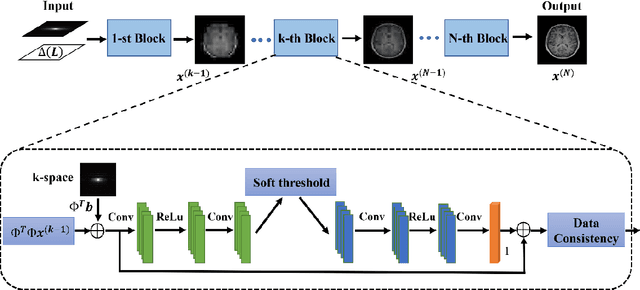

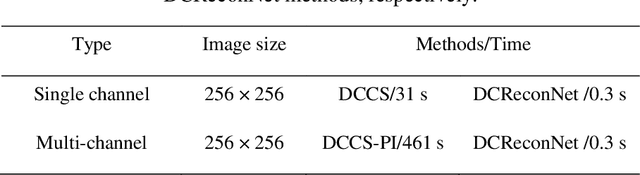

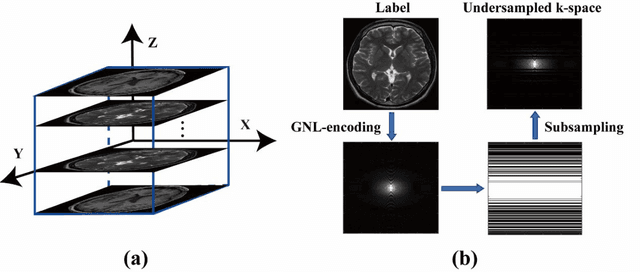

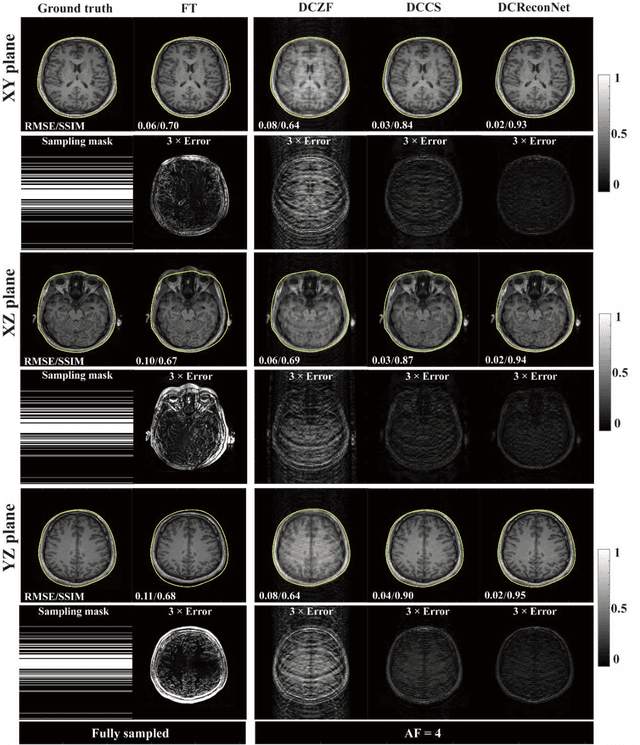

Abstract:Magnetic resonance imaging (MRI) is increasingly utilized for image-guided radiotherapy due to its outstanding soft-tissue contrast and lack of ionizing radiation. However, geometric distortions caused by gradient nonlinearity (GNL) limit anatomical accuracy, potentially compromising the quality of tumour treatments. In addition, slow MR acquisition and reconstruction limit the potential for real-time image guidance. Here, we demonstrate a deep learning-based method that rapidly reconstructs distortion-corrected images from raw k-space data for real-time MR-guided radiotherapy applications. We leverage recent advances in interpretable unrolling networks to develop a Distortion-Corrected Reconstruction Network (DCReconNet) that applies convolutional neural networks (CNNs) to learn effective regularizations and nonuniform fast Fourier transforms for GNL-encoding. DCReconNet was trained on a public MR brain dataset from eleven healthy volunteers for fully sampled and accelerated techniques including parallel imaging (PI) and compressed sensing (CS). The performance of DCReconNet was tested on phantom and volunteer brain data acquired on a 1.0T MRI-Linac. The DCReconNet, CS- and PI-based reconstructed image quality was measured by structural similarity (SSIM) and root-mean-squared error (RMSE) for numerical comparisons. The computation time for each method was also reported. Phantom and volunteer results demonstrated that DCReconNet better preserves image structure when compared to CS- and PI-based reconstruction methods. DCReconNet resulted in highest SSIM (0.95 median value) and lowest RMSE (<0.04) on simulated brain images with four times acceleration. DCReconNet is over 100-times faster than iterative, regularized reconstruction methods. DCReconNet provides fast and geometrically accurate image reconstruction and has potential for real-time MRI-guided radiotherapy applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge