Boheng Zhang

Multi-level Domain Adaptation for Lane Detection

Jun 21, 2022

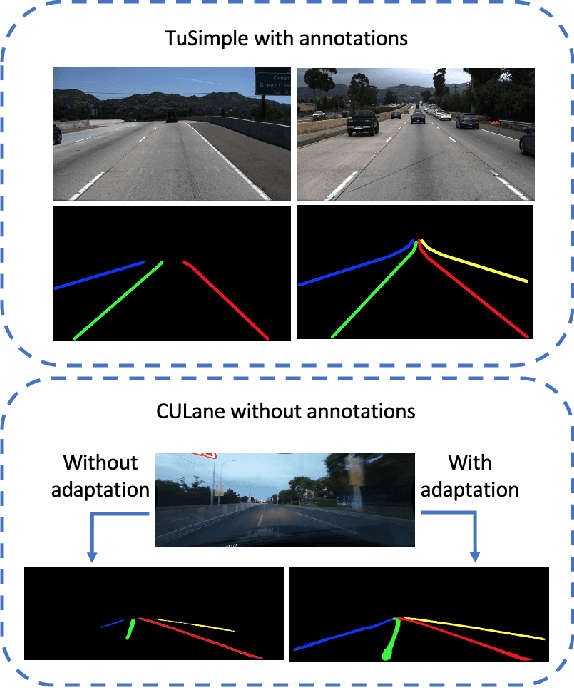

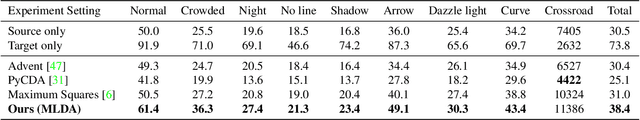

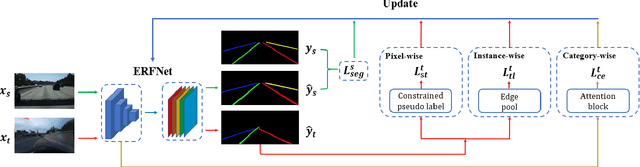

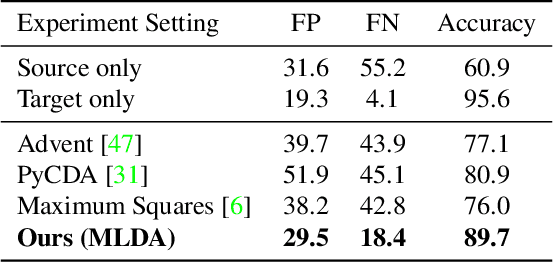

Abstract:We focus on bridging domain discrepancy in lane detection among different scenarios to greatly reduce extra annotation and re-training costs for autonomous driving. Critical factors hinder the performance improvement of cross-domain lane detection that conventional methods only focus on pixel-wise loss while ignoring shape and position priors of lanes. To address the issue, we propose the Multi-level Domain Adaptation (MLDA) framework, a new perspective to handle cross-domain lane detection at three complementary semantic levels of pixel, instance and category. Specifically, at pixel level, we propose to apply cross-class confidence constraints in self-training to tackle the imbalanced confidence distribution of lane and background. At instance level, we go beyond pixels to treat segmented lanes as instances and facilitate discriminative features in target domain with triplet learning, which effectively rebuilds the semantic context of lanes and contributes to alleviating the feature confusion. At category level, we propose an adaptive inter-domain embedding module to utilize the position prior of lanes during adaptation. In two challenging datasets, ie TuSimple and CULane, our approach improves lane detection performance by a large margin with gains of 8.8% on accuracy and 7.4% on F1-score respectively, compared with state-of-the-art domain adaptation algorithms.

Multi-scale Neural Networks for Retinal Blood Vessels Segmentation

Apr 11, 2018

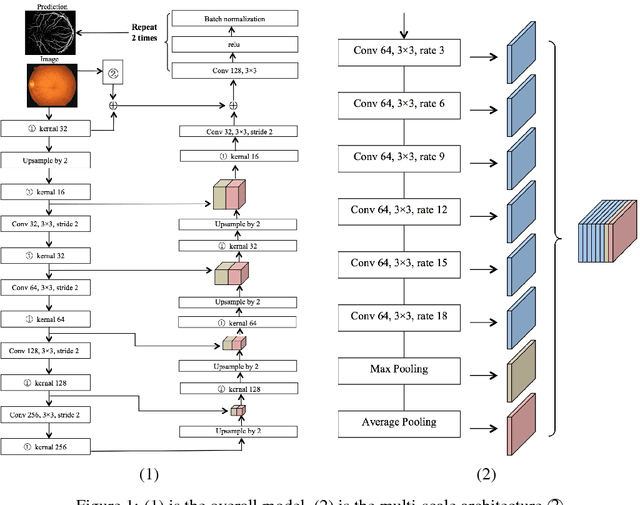

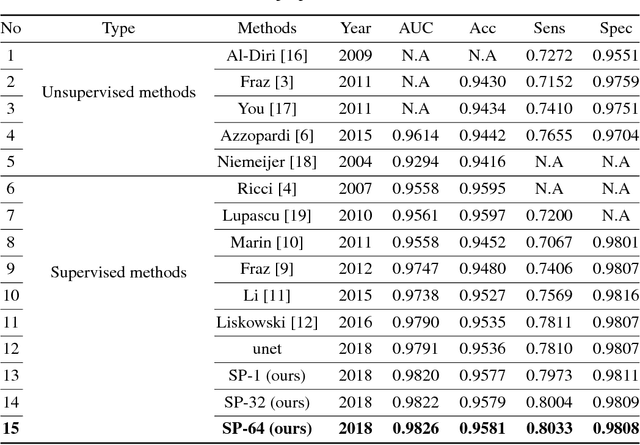

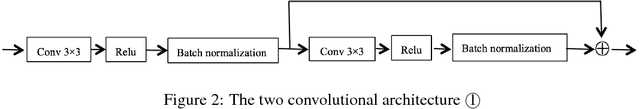

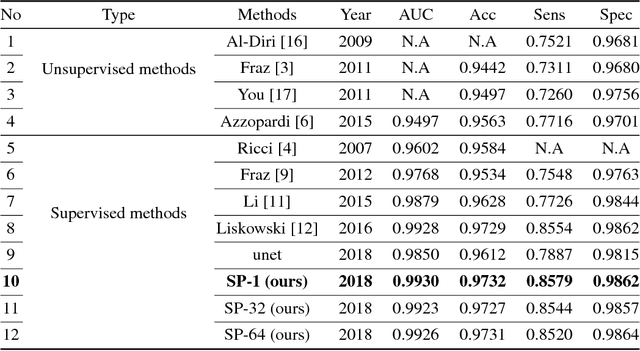

Abstract:Existing supervised approaches didn't make use of the low-level features which are actually effective to this task. And another deficiency is that they didn't consider the relation between pixels, which means effective features are not extracted. In this paper, we proposed a novel convolutional neural network which make sufficient use of low-level features together with high-level features and involves atrous convolution to get multi-scale features which should be considered as effective features. Our model is tested on three standard benchmarks - DRIVE, STARE, and CHASE databases. The results presents that our model significantly outperforms existing approaches in terms of accuracy, sensitivity, specificity, the area under the ROC curve and the highest prediction speed. Our work provides evidence of the power of wide and deep neural networks in retinal blood vessels segmentation task which could be applied on other medical images tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge