Bo Göransson

Federated Learning Using Three-Operator ADMM

Nov 10, 2022

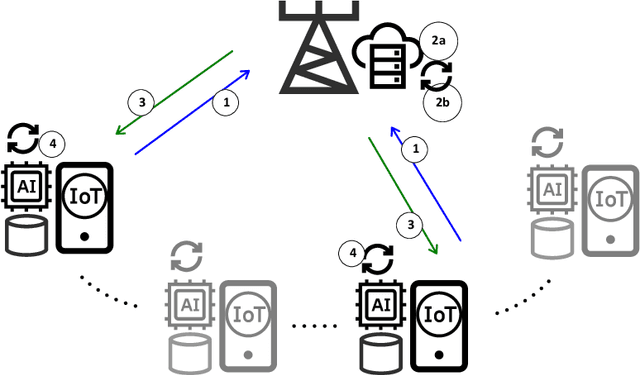

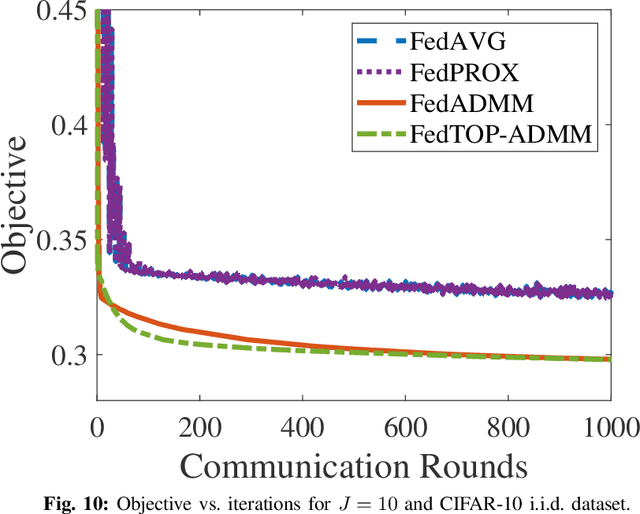

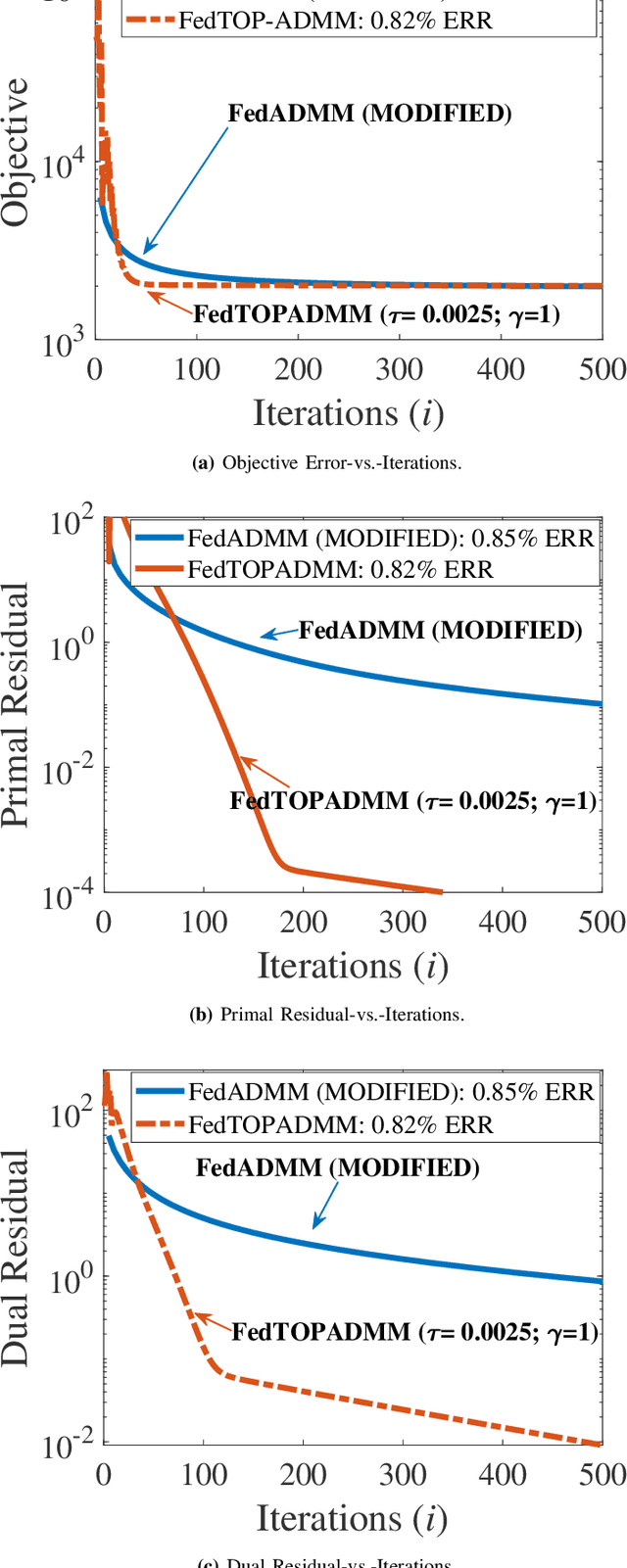

Abstract:Federated learning (FL) has emerged as an instance of distributed machine learning paradigm that avoids the transmission of data generated on the users' side. Although data are not transmitted, edge devices have to deal with limited communication bandwidths, data heterogeneity, and straggler effects due to the limited computational resources of users' devices. A prominent approach to overcome such difficulties is FedADMM, which is based on the classical two-operator consensus alternating direction method of multipliers (ADMM). The common assumption of FL algorithms, including FedADMM, is that they learn a global model using data only on the users' side and not on the edge server. However, in edge learning, the server is expected to be near the base station and have direct access to rich datasets. In this paper, we argue that leveraging the rich data on the edge server is much more beneficial than utilizing only user datasets. Specifically, we show that the mere application of FL with an additional virtual user node representing the data on the edge server is inefficient. We propose FedTOP-ADMM, which generalizes FedADMM and is based on a three-operator ADMM-type technique that exploits a smooth cost function on the edge server to learn a global model parallel to the edge devices. Our numerical experiments indicate that FedTOP-ADMM has substantial gain up to 33\% in communication efficiency to reach a desired test accuracy with respect to FedADMM, including a virtual user on the edge server.

EVM Mitigation with PAPR and ACLR Constraints in Large-Scale MIMO-OFDM Using TOP-ADMM

May 25, 2022

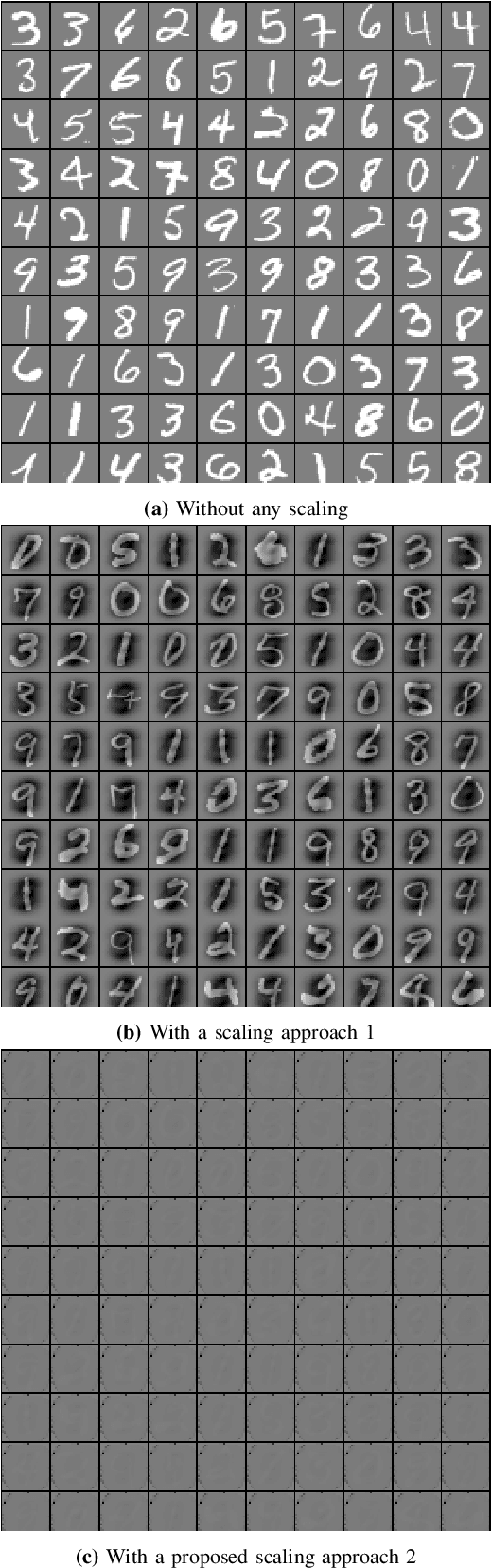

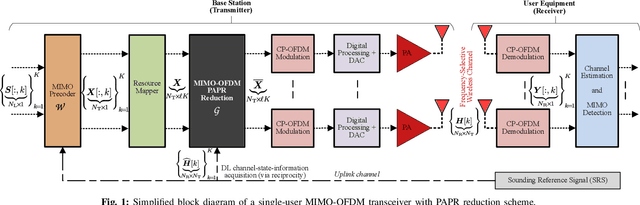

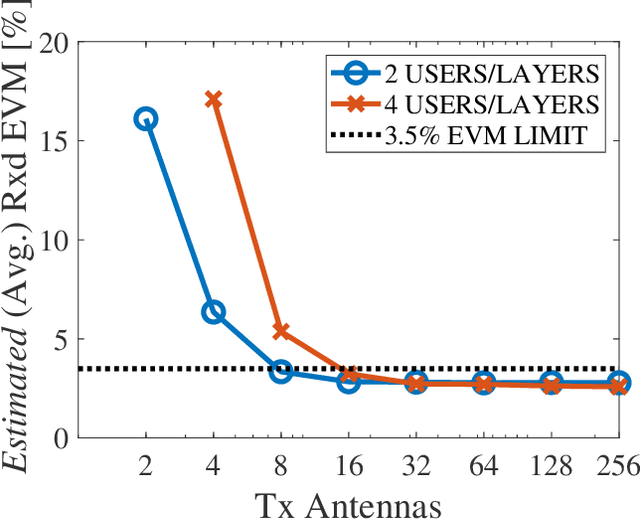

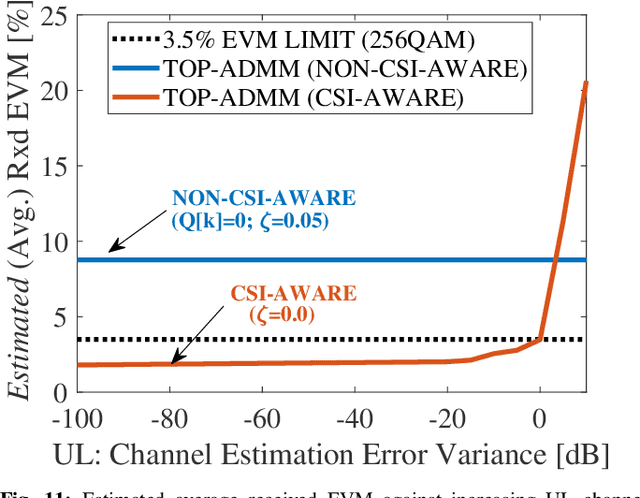

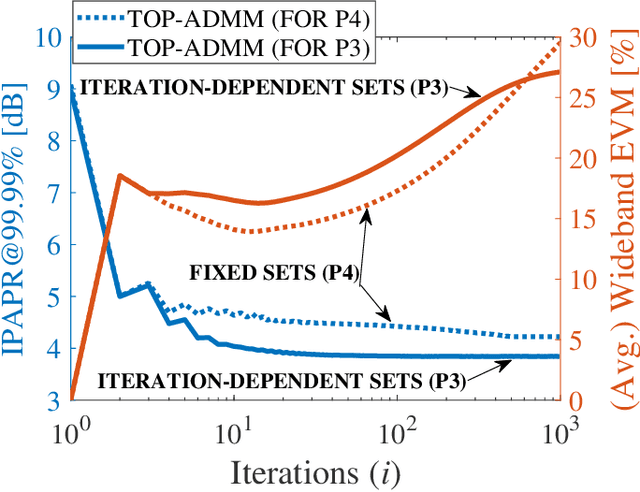

Abstract:Although signal distortion-based peak-to-average power ratio (PAPR) reduction is a feasible candidate for orthogonal frequency division multiplexing (OFDM) to meet standard/regulatory requirements, the error vector magnitude (EVM) stemming from the PAPR reduction has a deleterious impact on the performance of high data-rate achieving multiple-input multiple-output (MIMO) systems. Moreover, these systems must constrain the adjacent channel leakage ratio (ACLR) to comply with regulatory requirements. Several recent works have investigated the mitigation of the EVM seen at the receivers by capitalizing on the excess spatial dimensions inherent in the large-scale MIMO that assume the availability of perfect channel state information (CSI) with spatially uncorrelated wireless channels. Unfortunately, practical systems operate with erroneous CSI and spatially correlated channels. Additionally, most standards support user-specific/CSI-aware beamformed and cell-specific/non-CSI-aware broadcasting channels. Hence, we formulate a robust EVM mitigation problem under channel uncertainty with nonconvex PAPR and ACLR constraints catering to beamforming/broadcasting. To solve this formidable problem, we develop an efficient scheme using our recently proposed three-operator alternating direction method of multipliers (TOP-ADMM) algorithm and benchmark it against two three-operator algorithms previously presented for machine learning purposes. Numerical results show the efficacy of the proposed algorithm under imperfect CSI and spatially correlated channels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge