Blake Camp

Deep Active Learning Using Barlow Twins

Dec 30, 2022

Abstract:The generalisation performance of a convolutional neural networks (CNN) is majorly predisposed by the quantity, quality, and diversity of the training images. All the training data needs to be annotated in-hand before, in many real-world applications data is easy to acquire but expensive and time-consuming to label. The goal of the Active learning for the task is to draw most informative samples from the unlabeled pool which can used for training after annotation. With total different objective, self-supervised learning which have been gaining meteoric popularity by closing the gap in performance with supervised methods on large computer vision benchmarks. self-supervised learning (SSL) these days have shown to produce low-level representations that are invariant to distortions of the input sample and can encode invariance to artificially created distortions, e.g. rotation, solarization, cropping etc. self-supervised learning (SSL) approaches rely on simpler and more scalable frameworks for learning. In this paper, we unify these two families of approaches from the angle of active learning using self-supervised learning mainfold and propose Deep Active Learning using BarlowTwins(DALBT), an active learning method for all the datasets using combination of classifier trained along with self-supervised loss framework of Barlow Twins to a setting where the model can encode the invariance of artificially created distortions, e.g. rotation, solarization, cropping etc.

Continual Learning with Deep Artificial Neurons

Nov 13, 2020

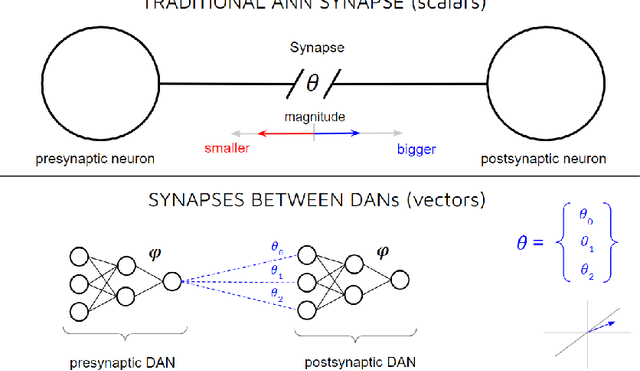

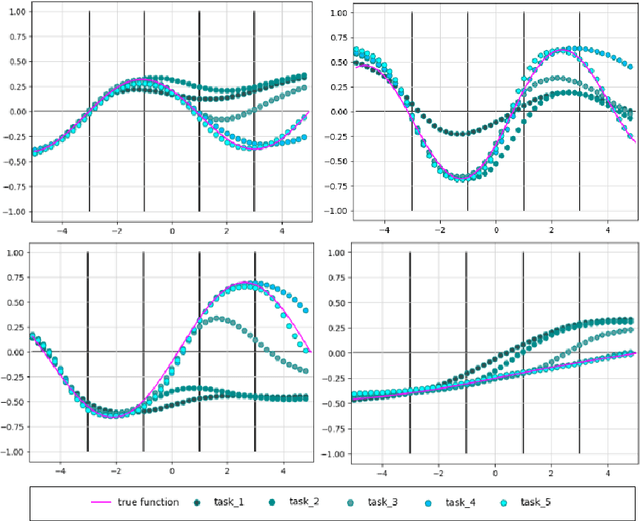

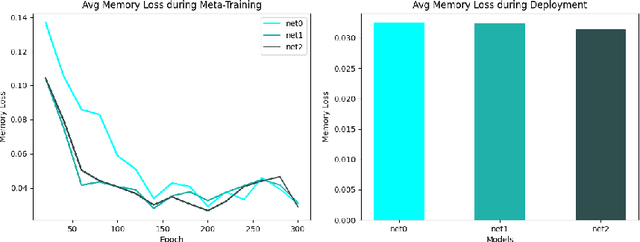

Abstract:Neurons in real brains are enormously complex computational units. Among other things, they're responsible for transforming inbound electro-chemical vectors into outbound action potentials, updating the strengths of intermediate synapses, regulating their own internal states, and modulating the behavior of other nearby neurons. One could argue that these cells are the only things exhibiting any semblance of real intelligence. It is odd, therefore, that the machine learning community has, for so long, relied upon the assumption that this complexity can be reduced to a simple sum and fire operation. We ask, might there be some benefit to substantially increasing the computational power of individual neurons in artificial systems? To answer this question, we introduce Deep Artificial Neurons (DANs), which are themselves realized as deep neural networks. Conceptually, we embed DANs inside each node of a traditional neural network, and we connect these neurons at multiple synaptic sites, thereby vectorizing the connections between pairs of cells. We demonstrate that it is possible to meta-learn a single parameter vector, which we dub a neuronal phenotype, shared by all DANs in the network, which facilitates a meta-objective during deployment. Here, we isolate continual learning as our meta-objective, and we show that a suitable neuronal phenotype can endow a single network with an innate ability to update its synapses with minimal forgetting, using standard backpropagation, without experience replay, nor separate wake/sleep phases. We demonstrate this ability on sequential non-linear regression tasks.

Deep Active Learning via Open Set Recognition

Jul 14, 2020

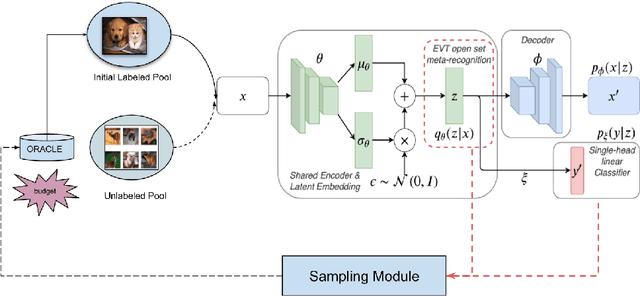

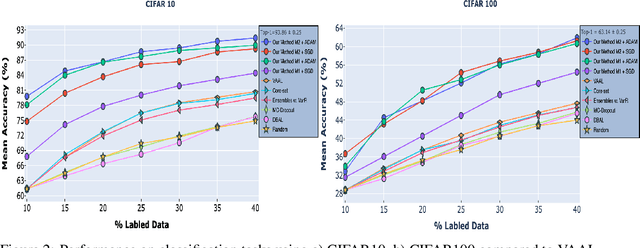

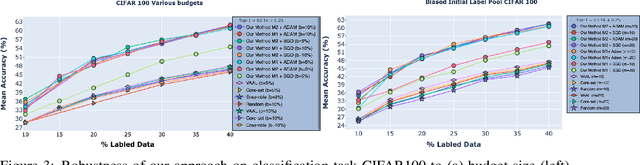

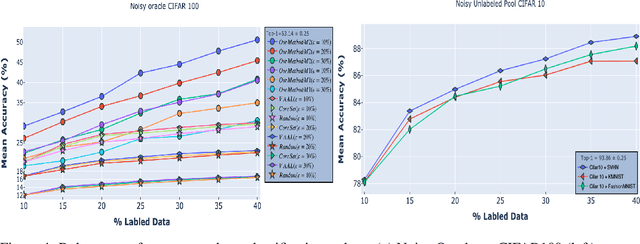

Abstract:In many applications, data is easy to acquire but expensive and time consuming to label prominent examples include medical imaging and NLP. This disparity has only grown in recent years as our ability to collect data improves. Under these constraints, it makes sense to select only the most informative instances from the unlabeled pool and request an oracle (e.g a human expert) to provide labels for those samples. The goal of active learning is to infer the informativeness of unlabeled samples so as to minimize the number of requests to the oracle. Here, we formulate active learning as an open-set recognition problem. In this latter paradigm, only some of the inputs belong to known classes; the classifier must identify the rest as unknown.More specifically, we leverage variational neuralnetworks (VNNs), which produce high-confidence (i.e., low-entropy) predictions only for inputs that closely resemble the training data. We use the inverse of this confidence measure to select the samples that the oracle should label. Intuitively, unlabeled samples that the VNN is uncertain about are more informative for future training. We carried out an extensive evaluation of our novel, probabilistic formulation of active learning, achieving state-of-the-art results on CIFAR-10 andCIFAR-100. In addition, unlike current active learning methods, our algorithm can learn tasks with non i.i.d distribution, without the need for task labels. As our experiments show, when the unlabeled pool consists of a mixture of samples from multiple tasks, our approach can automatically distinguish between samples from seen vs. unseen tasks.

A Scalable Approach to Multi-Context Continual Learning via Lifelong Skill Encoding

May 25, 2018

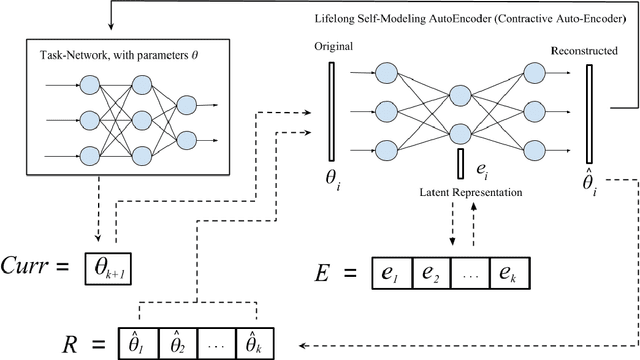

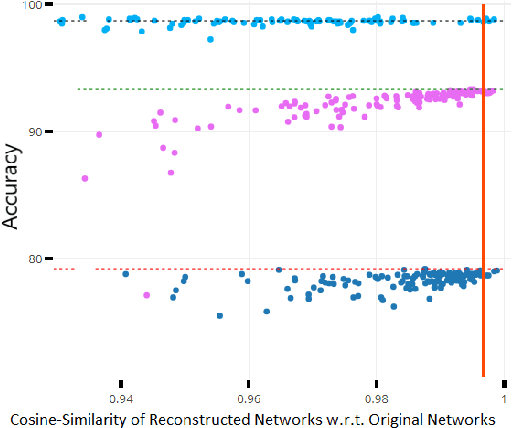

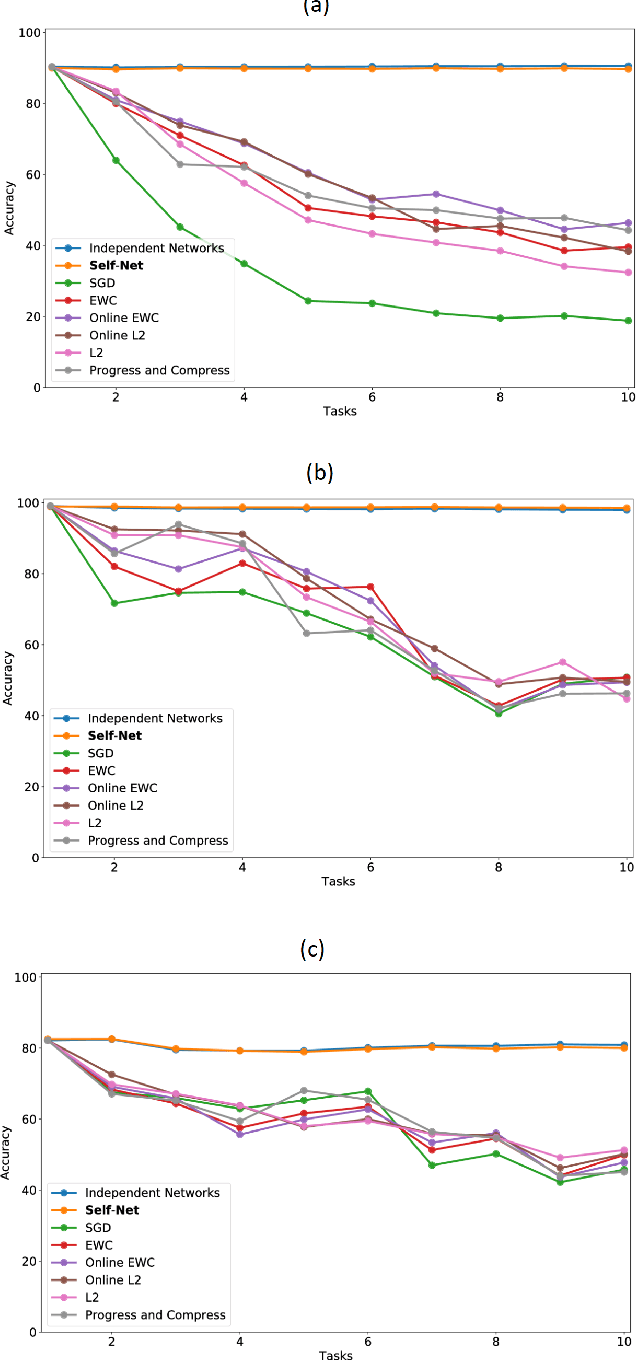

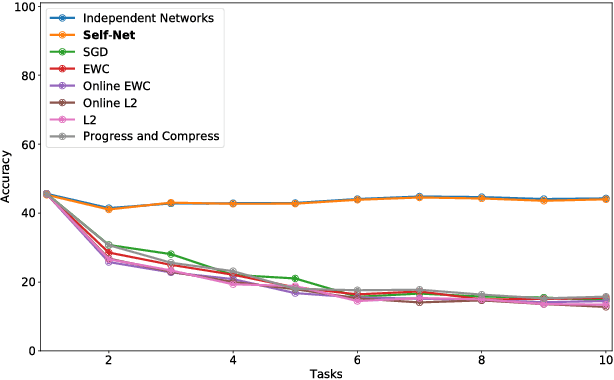

Abstract:Continual or lifelong learning (CL) is one of the most challenging problems in machine learning. In this paradigm, a system must learn new tasks, contexts, or data without forgetting previously learned information. We present a scalable approach to multi-context continual learning (MCCL) in which we decouple how a system learns to solve new tasks (i.e., acquires skills) from how it stores them. Our approach leverages two types of artificial networks: (1) a set of reusable, \textit{task-specific networks} (TN) that can be trained as needed to learn new skills, and (2) a lifelong, \textit{autoencoder network} (EN) that stores all learned skills in a compact, latent space. To learn a new skill, we first train a TN using conventional backpropagation, thus placing no restrictions on the system's ability to encode the new task. We then incorporate the newly learned skill into the latent space by first recalling previously learned skills using our EN and then retraining it on both the new and recalled skills. Our approach can efficiently store an arbitrary number of skills without compromising previously learned information because each skill is stored as a separate latent vector. Whenever a particular skill is needed, we recall the necessary weights using our EN and then load them into the corresponding TN. Experiments on the MNIST and CIFAR datasets show that we can continually learn new skills without compromising the performance of existing skills. To the best of our knowledge, we are the first to demonstrate the feasibility of encoding entire networks in order to facilitate efficient continual learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge