Bingqing Yu

"Are you sure?": Preliminary Insights from Scaling Product Comparisons to Multiple Shops

Jul 08, 2021

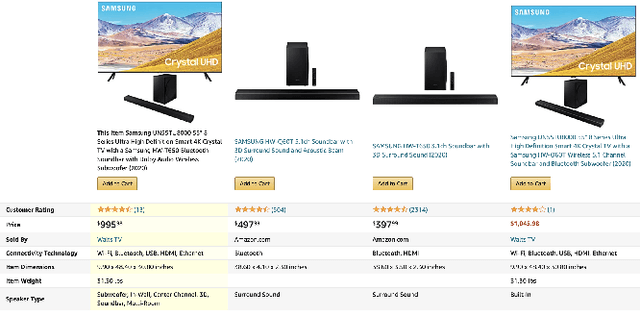

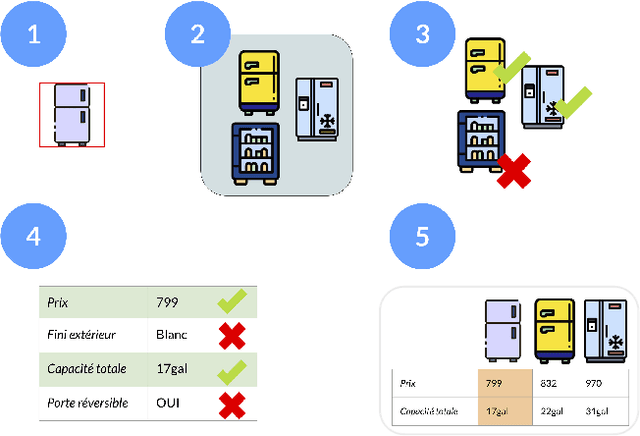

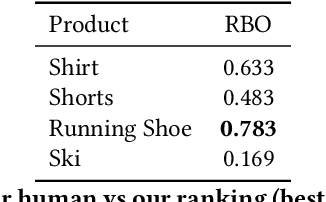

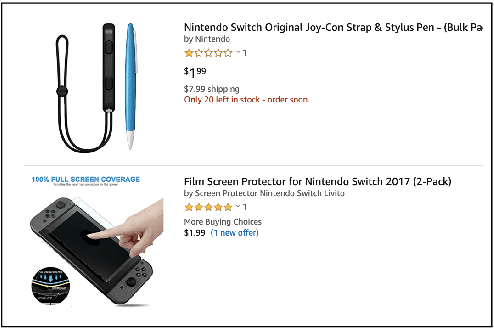

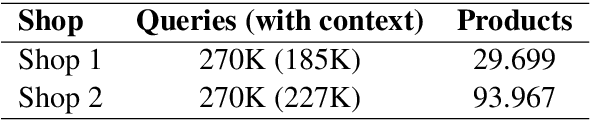

Abstract:Large eCommerce players introduced comparison tables as a new type of recommendations. However, building comparisons at scale without pre-existing training/taxonomy data remains an open challenge, especially within the operational constraints of shops in the long tail. We present preliminary results from building a comparison pipeline designed to scale in a multi-shop scenario: we describe our design choices and run extensive benchmarks on multiple shops to stress-test it. Finally, we run a small user study on property selection and conclude by discussing potential improvements and highlighting the questions that remain to be addressed.

SIGIR 2021 E-Commerce Workshop Data Challenge

Apr 27, 2021

Abstract:The 2021 SIGIR workshop on eCommerce is hosting the Coveo Data Challenge for "In-session prediction for purchase intent and recommendations". The challenge addresses the growing need for reliable predictions within the boundaries of a shopping session, as customer intentions can be different depending on the occasion. The need for efficient procedures for personalization is even clearer if we consider the e-commerce landscape more broadly: outside of giant digital retailers, the constraints of the problem are stricter, due to smaller user bases and the realization that most users are not frequently returning customers. We release a new session-based dataset including more than 30M fine-grained browsing events (product detail, add, purchase), enriched by linguistic behavior (queries made by shoppers, with items clicked and items not clicked after the query) and catalog meta-data (images, text, pricing information). On this dataset, we ask participants to showcase innovative solutions for two open problems: a recommendation task (where a model is shown some events at the start of a session, and it is asked to predict future product interactions); an intent prediction task, where a model is shown a session containing an add-to-cart event, and it is asked to predict whether the item will be bought before the end of the session.

Query2Prod2Vec Grounded Word Embeddings for eCommerce

Apr 02, 2021

Abstract:We present Query2Prod2Vec, a model that grounds lexical representations for product search in product embeddings: in our model, meaning is a mapping between words and a latent space of products in a digital shop. We leverage shopping sessions to learn the underlying space and use merchandising annotations to build lexical analogies for evaluation: our experiments show that our model is more accurate than known techniques from the NLP and IR literature. Finally, we stress the importance of data efficiency for product search outside of retail giants, and highlight how Query2Prod2Vec fits with practical constraints faced by most practitioners.

BERT Goes Shopping: Comparing Distributional Models for Product Representations

Dec 17, 2020

Abstract:Word embeddings (e.g., word2vec) have been applied successfully to eCommerce products through prod2vec. Inspired by the recent performance improvements on several NLP tasks brought by contextualized embeddings, we propose to transfer BERT-like architectures to eCommerce: our model -- ProdBERT -- is trained to generate representations of products through masked session modeling. Through extensive experiments over multiple shops, different tasks, and a range of design choices, we systematically compare the accuracy of ProdBERT and prod2vec embeddings: while ProdBERT is found to be superior to traditional methods in several scenarios, we highlight the importance of resources and hyperparameters in the best performing models. Finally, we conclude by providing guidelines for training embeddings under a variety of computational and data constraints.

Blending Search and Discovery: Tag-Based Query Refinement with Contextual Reinforcement Learning

Oct 15, 2020

Abstract:We tackle tag-based query refinement as a mobile-friendly alternative to standard facet search. We approach the inference challenge with reinforcement learning, and propose a deep contextual bandit that can be efficiently scaled in a multi-tenant SaaS scenario.

Fantastic Embeddings and How to Align Them: Zero-Shot Inference in a Multi-Shop Scenario

Jul 20, 2020

Abstract:This paper addresses the challenge of leveraging multiple embedding spaces for multi-shop personalization, proving that zero-shot inference is possible by transferring shopping intent from one website to another without manual intervention. We detail a machine learning pipeline to train and optimize embeddings within shops first, and support the quantitative findings with additional qualitative insights. We then turn to the harder task of using learned embeddings across shops: if products from different shops live in the same vector space, user intent - as represented by regions in this space - can then be transferred in a zero-shot fashion across websites. We propose and benchmark unsupervised and supervised methods to "travel" between embedding spaces, each with its own assumptions on data quantity and quality. We show that zero-shot personalization is indeed possible at scale by testing the shared embedding space with two downstream tasks, event prediction and type-ahead suggestions. Finally, we curate a cross-shop anonymized embeddings dataset to foster an inclusive discussion of this important business scenario.

Shopping in the Multiverse: A Counterfactual Approach to In-Session Attribution

Jul 20, 2020

Abstract:We tackle the challenge of in-session attribution for on-site search engines in eCommerce. We phrase the problem as a causal counterfactual inference, and contrast the approach with rule-based systems from industry settings and prediction models from the multi-touch attribution literature. We approach counterfactuals in analogy with treatments in formal semantics, explicitly modeling possible outcomes through alternative shopper timelines; in particular, we propose to learn a generative browsing model over a target shop, leveraging the latent space induced by prod2vec embeddings; we show how natural language queries can be effectively represented in the same space and how "search intervention" can be performed to assess causal contribution. Finally, we validate the methodology on a synthetic dataset, mimicking important patterns emerged in customer interviews and qualitative analysis, and we present preliminary findings on an industry dataset from a partnering shop.

How to Grow a Tree: Personalized Category Suggestions for eCommerce Type-Ahead

May 26, 2020

Abstract:In an attempt to balance precision and recall in the search page, leading digital shops have been effectively nudging users into select category facets as early as in the type-ahead suggestions. In this work, we present SessionPath, a novel neural network model that improves facet suggestions on two counts: first, the model is able to leverage session embeddings to provide scalable personalization; second, SessionPath predicts facets by explicitly producing a probability distribution at each node in the taxonomy path. We benchmark SessionPath on two partnering shops against count-based and neural models, and show how business requirements and model behavior can be combined in a principled way.

"An Image is Worth a Thousand Features": Scalable Product Representations for In-Session Type-Ahead Personalization

Mar 11, 2020

Abstract:We address the problem of personalizing query completion in a digital commerce setting, in which the bounce rate is typically high and recurring users are rare. We focus on in-session personalization and improve a standard noisy channel model by injecting dense vectors computed from product images at query time. We argue that image-based personalization displays several advantages over alternative proposals (from data availability to business scalability), and provide quantitative evidence and qualitative support on the effectiveness of the proposed methods. Finally, we show how a shared vector space between similar shops can be used to improve the experience of users browsing across sites, opening up the possibility of applying zero-shot unsupervised personalization to increase conversions. This will prove to be particularly relevant to retail groups that manage multiple brands and/or websites and to multi-tenant SaaS providers that serve multiple clients in the same space.

Personalization of Saliency Estimation

Nov 21, 2017

Abstract:Most existing saliency models use low-level features or task descriptions when generating attention predictions. However, the link between observer characteristics and gaze patterns is rarely investigated. We present a novel saliency prediction technique which takes viewers' identities and personal traits into consideration when modeling human attention. Instead of only computing image salience for average observers, we consider the interpersonal variation in the viewing behaviors of observers with different personal traits and backgrounds. We present an enriched derivative of the GAN network, which is able to generate personalized saliency predictions when fed with image stimuli and specific information about the observer. Our model contains a generator which generates grayscale saliency heat maps based on the image and an observer label. The generator is paired with an adversarial discriminator which learns to distinguish generated salience from ground truth salience. The discriminator also has the observer label as an input, which contributes to the personalization ability of our approach. We evaluate the performance of our personalized salience model by comparison with a benchmark model along with other un-personalized predictions, and illustrate improvements in prediction accuracy for all tested observer groups.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge