Bing Wu

TactileAR: Active Tactile Pattern Reconstruction

Oct 11, 2024

Abstract:High-resolution (HR) contact surface information is essential for robotic grasping and precise manipulation tasks. However, it remains a challenge for current taxel-based sensors to obtain HR tactile information. In this paper, we focus on utilizing low-resolution (LR) tactile sensors to reconstruct the localized, dense, and HR representation of contact surfaces. In particular, we build a Gaussian triaxial tactile sensor degradation model and propose a tactile pattern reconstruction framework based on the Kalman filter. This framework enables the reconstruction of 2-D HR contact surface shapes using collected LR tactile sequences. In addition, we present an active exploration strategy to enhance the reconstruction efficiency. We evaluate the proposed method in real-world scenarios with comparison to existing prior-information-based approaches. Experimental results confirm the efficiency of the proposed approach and demonstrate satisfactory reconstructions of complex contact surface shapes. Code: https://github.com/wmtlab/tactileAR

Adversarial Code Learning for Image Generation

Jan 30, 2020

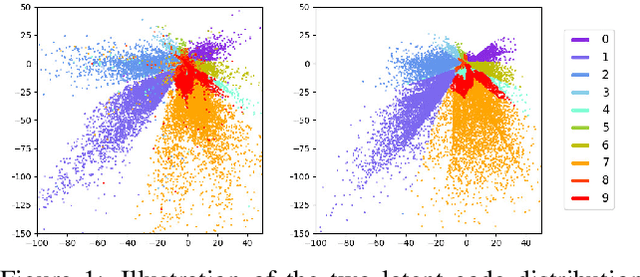

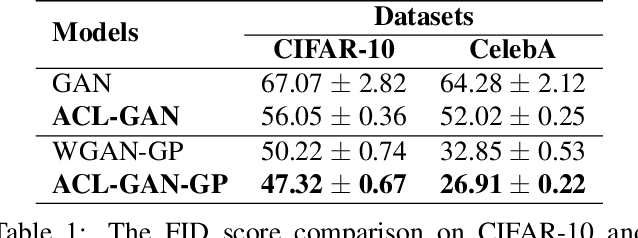

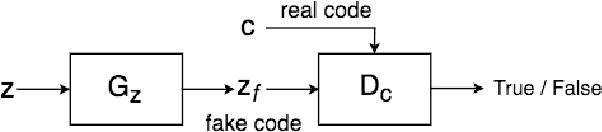

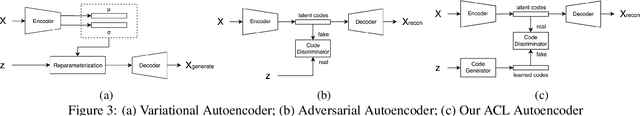

Abstract:We introduce the "adversarial code learning" (ACL) module that improves overall image generation performance to several types of deep models. Instead of performing a posterior distribution modeling in the pixel spaces of generators, ACLs aim to jointly learn a latent code with another image encoder/inference net, with a prior noise as its input. We conduct the learning in an adversarial learning process, which bears a close resemblance to the original GAN but again shifts the learning from image spaces to prior and latent code spaces. ACL is a portable module that brings up much more flexibility and possibilities in generative model designs. First, it allows flexibility to convert non-generative models like Autoencoders and standard classification models to decent generative models. Second, it enhances existing GANs' performance by generating meaningful codes and images from any part of the prior. We have incorporated our ACL module with the aforementioned frameworks and have performed experiments on synthetic, MNIST, CIFAR-10, and CelebA datasets. Our models have achieved significant improvements which demonstrated the generality for image generation tasks.

Fashion-AttGAN: Attribute-Aware Fashion Editing with Multi-Objective GAN

Apr 20, 2019

Abstract:In this paper, we introduce attribute-aware fashion-editing, a novel task, to the fashion domain. We re-define the overall objectives in AttGAN and propose the Fashion-AttGAN model for this new task. A dataset is constructed for this task with 14,221 and 22 attributes, which has been made publically available. Experimental results show the effectiveness of our Fashion-AttGAN on fashion editing over the original AttGAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge