Bilal Abbasi

Improved robustness to adversarial examples using Lipschitz regularization of the loss

Oct 23, 2018

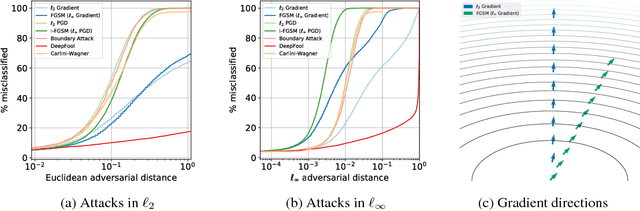

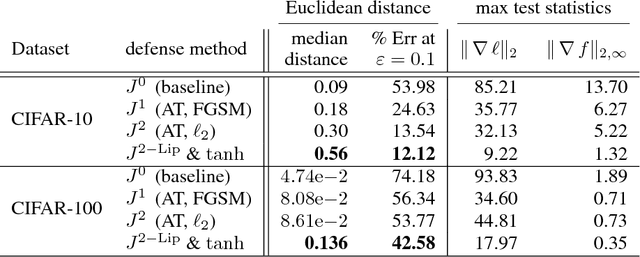

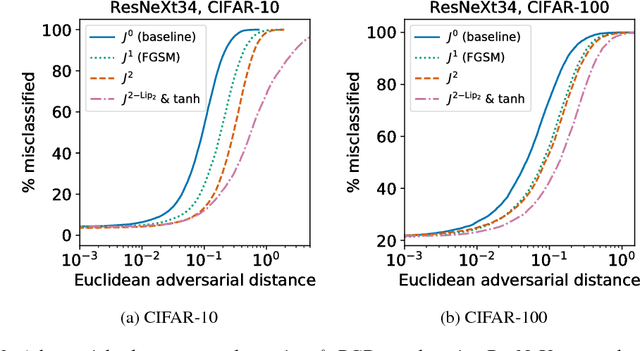

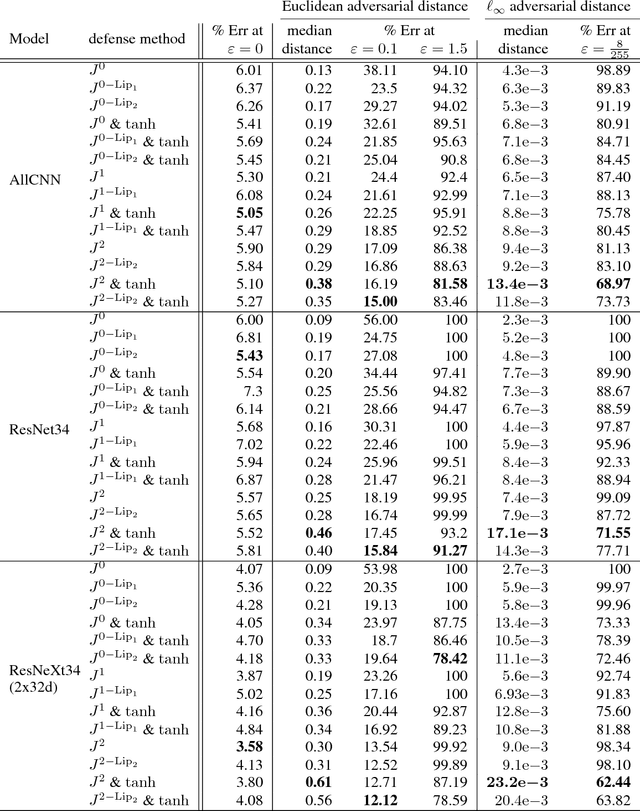

Abstract:Adversarial training is an effective method for improving robustness to adversarial attacks. We show that adversarial training using the Fast Signed Gradient Method can be interpreted as a form of regularization. We implemented a more effective form of adversarial training, which in turn can be interpreted as regularization of the loss in the 2-norm, $\|\nabla_x \ell(x)\|_2$. We obtained further improvements to adversarial robustness, as well as provable robustness guarantees, by augmenting adversarial training with Lipschitz regularization.

Anomaly detection and classification for streaming data using PDEs

Mar 15, 2017

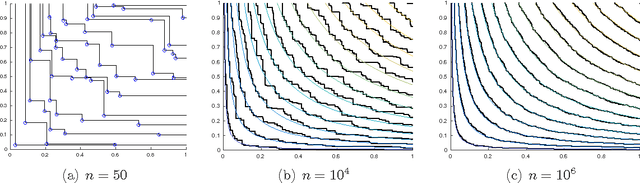

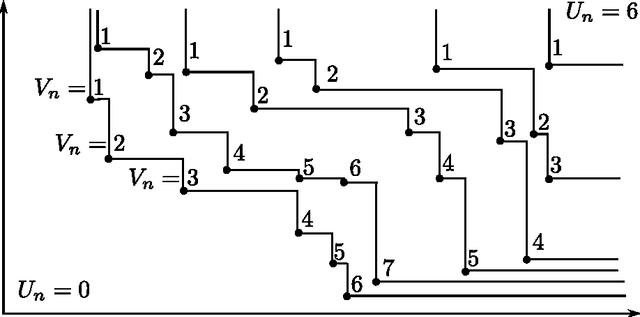

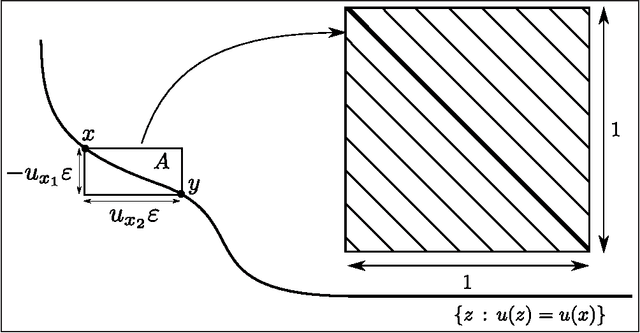

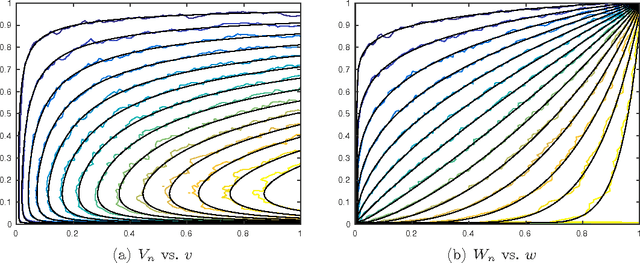

Abstract:Nondominated sorting, also called Pareto Depth Analysis (PDA), is widely used in multi-objective optimization and has recently found important applications in multi-criteria anomaly detection. Recently, a partial differential equation (PDE) continuum limit was discovered for nondominated sorting leading to a very fast approximate sorting algorithm called PDE-based ranking. We propose in this paper a fast real-time streaming version of the PDA algorithm for anomaly detection that exploits the computational advantages of PDE continuum limits. Furthermore, we derive new PDE continuum limits for sorting points within their nondominated layers and show how the new PDEs can be used to classify anomalies based on which criterion was more significantly violated. We also prove statistical convergence rates for PDE-based ranking, and present the results of numerical experiments with both synthetic and real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge