Improved robustness to adversarial examples using Lipschitz regularization of the loss

Paper and Code

Oct 23, 2018

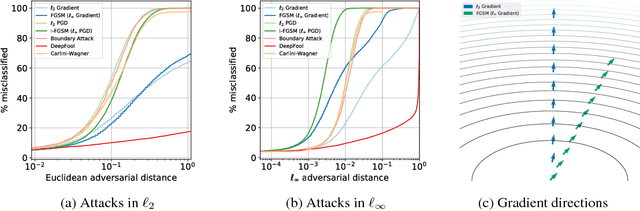

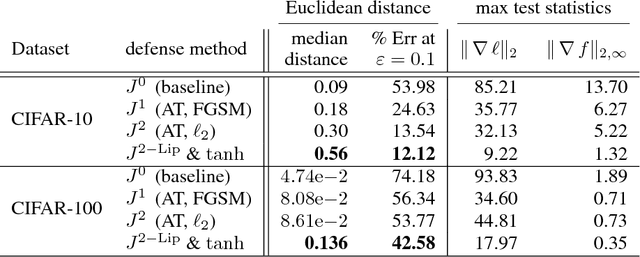

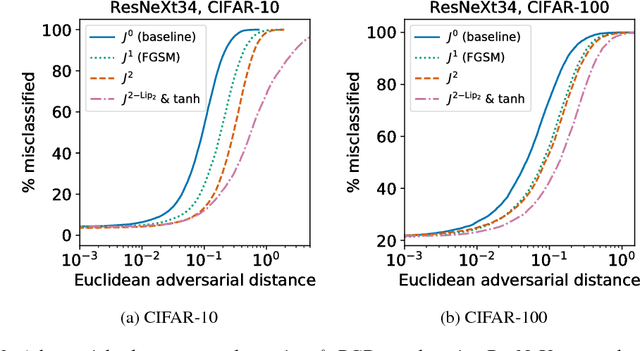

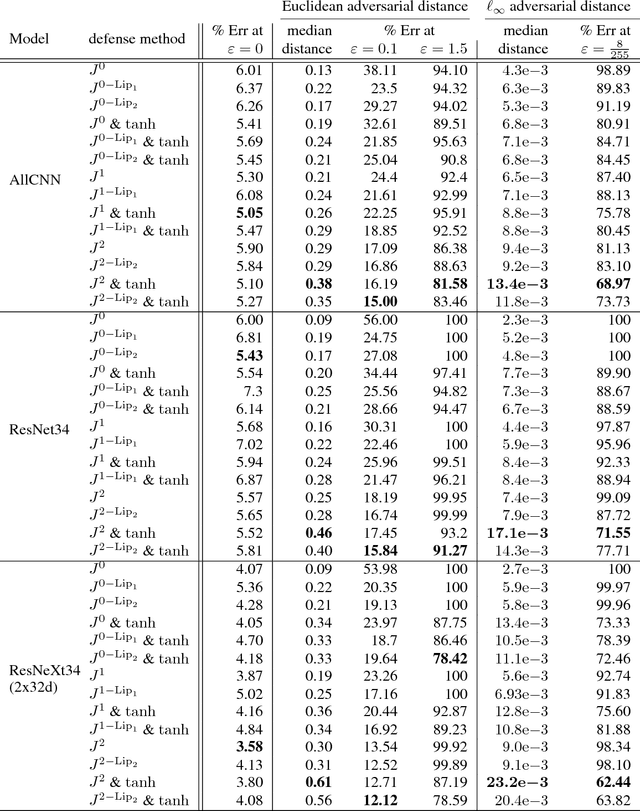

Adversarial training is an effective method for improving robustness to adversarial attacks. We show that adversarial training using the Fast Signed Gradient Method can be interpreted as a form of regularization. We implemented a more effective form of adversarial training, which in turn can be interpreted as regularization of the loss in the 2-norm, $\|\nabla_x \ell(x)\|_2$. We obtained further improvements to adversarial robustness, as well as provable robustness guarantees, by augmenting adversarial training with Lipschitz regularization.

* Updated version with additional results measured in L-infinity norm;

typos corrected

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge