Bikram Koirala

A Two-step Linear Mixing Model for Unmixing under Hyperspectral Variability

Feb 24, 2025Abstract:Spectral unmixing is an important task in the research field of hyperspectral image processing. It can be thought of as a regression problem, where the observed variable (i.e., an image pixel) is to be found as a function of the response variables (i.e., the pure materials in a scene, called endmembers). The Linear Mixing Model (LMM) has received a great deal of attention, due to its simplicity and ease of use in, e.g., optimization problems. Its biggest flaw is that it assumes that any pure material can be characterized by one unique spectrum throughout the entire scene. In many cases this is incorrect: the endmembers face a significant amount of spectral variability caused by, e.g., illumination conditions, atmospheric effects, or intrinsic variability. Researchers have suggested several generalizations of the LMM to mitigate this effect. However, most models lead to ill-posed and highly non-convex optimization problems, which are hard to solve and have hyperparameters that are difficult to tune. In this paper, we propose a two-step LMM that bridges the gap between model complexity and computational tractability. We show that this model leads to only a mildly non-convex optimization problem, which we solve with an interior-point solver. This method requires virtually no hyperparameter tuning, and can therefore be used easily and quickly in a wide range of unmixing tasks. We show that the model is competitive and in some cases superior to existing and well-established unmixing methods and algorithms. We do this through several experiments on synthetic data, real-life satellite data, and hybrid synthetic-real data.

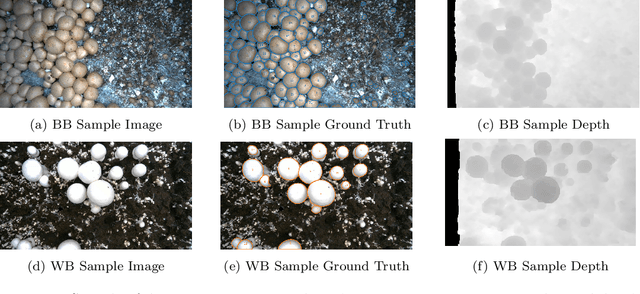

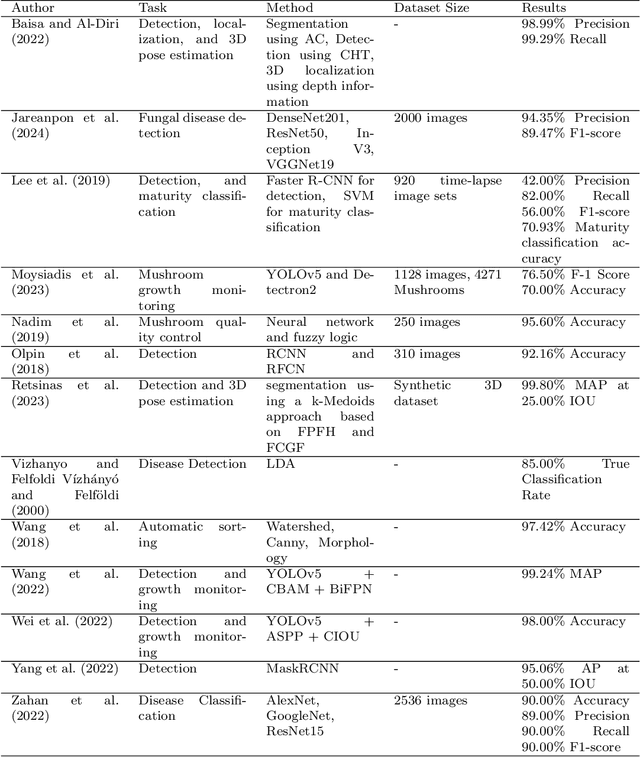

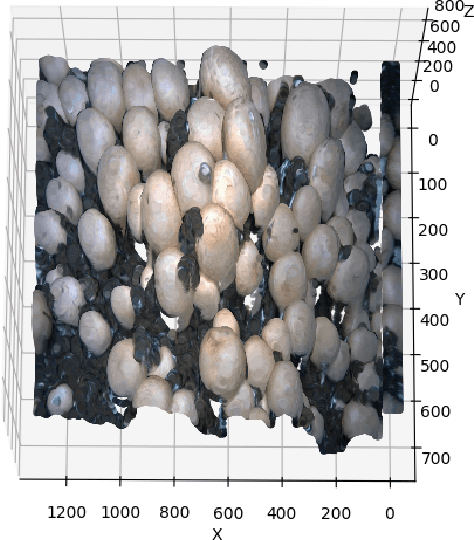

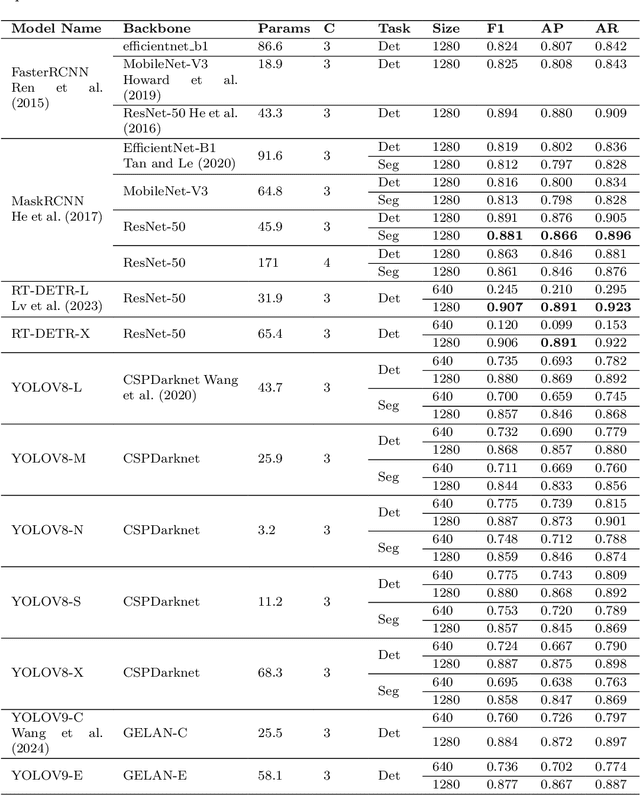

M18K: A Comprehensive RGB-D Dataset and Benchmark for Mushroom Detection and Instance Segmentation

Jul 15, 2024

Abstract:Automating agricultural processes holds significant promise for enhancing efficiency and sustainability in various farming practices. This paper contributes to the automation of agricultural processes by providing a dedicated mushroom detection dataset related to automated harvesting, growth monitoring, and quality control of the button mushroom produced using Agaricus Bisporus fungus. With over 18,000 mushroom instances in 423 RGB-D image pairs taken with an Intel RealSense D405 camera, it fills the gap in mushroom-specific datasets and serves as a benchmark for detection and instance segmentation algorithms in smart mushroom agriculture. The dataset, featuring realistic growth environment scenarios with comprehensive annotations, is assessed using advanced detection and instance segmentation algorithms. The paper details the dataset's characteristics, evaluates algorithmic performance, and for broader applicability, we have made all resources publicly available including images, codes, and trained models via our GitHub repository https://github.com/abdollahzakeri/m18k

A Multisensor Hyperspectral Benchmark Dataset For Unmixing of Intimate Mixtures

Aug 30, 2023

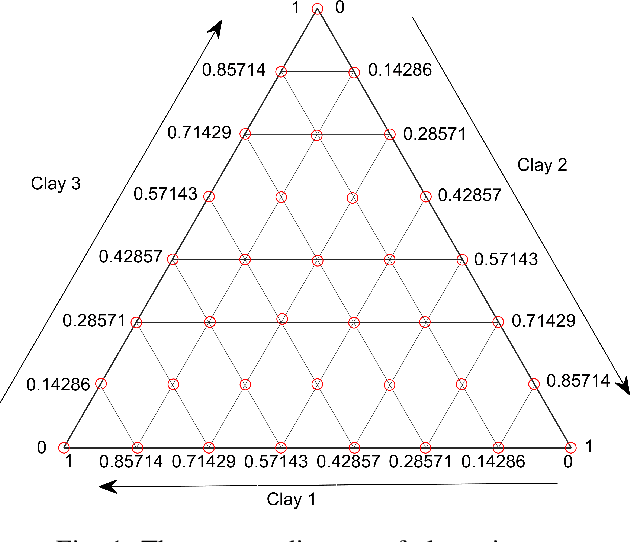

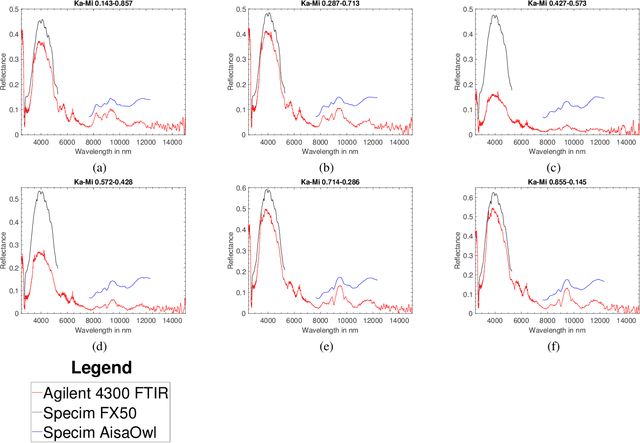

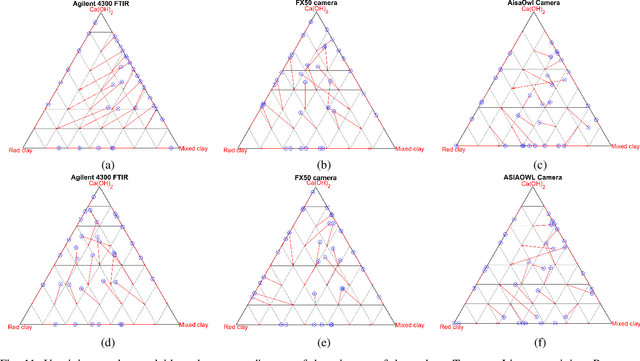

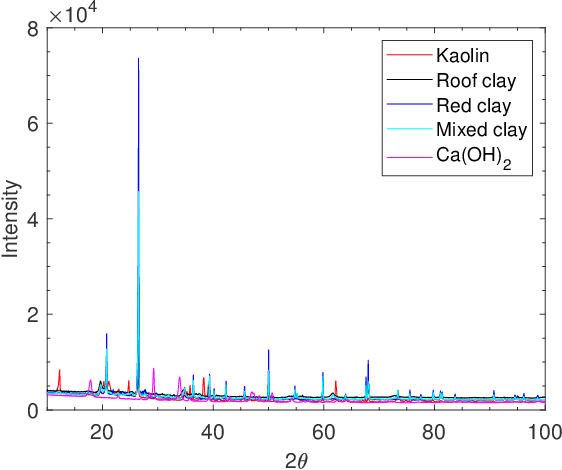

Abstract:Optical hyperspectral cameras capture the spectral reflectance of materials. Since many materials behave as heterogeneous intimate mixtures with which each photon interacts differently, the relationship between spectral reflectance and material composition is very complex. Quantitative validation of spectral unmixing algorithms requires high-quality ground truth fractional abundance data, which are very difficult to obtain. In this work, we generated a comprehensive laboratory ground truth dataset of intimately mixed mineral powders. For this, five clay powders (Kaolin, Roof clay, Red clay, mixed clay, and Calcium hydroxide) were mixed homogeneously to prepare 325 samples of 60 binary, 150 ternary, 100 quaternary, and 15 quinary mixtures. Thirteen different hyperspectral sensors have been used to acquire the reflectance spectra of these mixtures in the visible, near, short, mid, and long-wavelength infrared regions (350-15385) nm. {\color{black} Overlaps in wavelength regions due to the operational ranges of each sensor} and variations in acquisition conditions {\color{black} resulted in} a large amount of spectral variability. Ground truth composition is given by construction, but to verify that the generated samples are sufficiently homogeneous, XRD and XRF elemental analysis is performed. We believe these data will be beneficial for validating advanced methods for nonlinear unmixing and material composition estimation, including studying spectral variability and training supervised unmixing approaches. The datasets can be downloaded from the following link: https://github.com/VisionlabUA/Multisensor_datasets.

Deep Hyperspectral Unmixing using Transformer Network

Mar 31, 2022

Abstract:Currently, this paper is under review in IEEE. Transformers have intrigued the vision research community with their state-of-the-art performance in natural language processing. With their superior performance, transformers have found their way in the field of hyperspectral image classification and achieved promising results. In this article, we harness the power of transformers to conquer the task of hyperspectral unmixing and propose a novel deep unmixing model with transformers. We aim to utilize the ability of transformers to better capture the global feature dependencies in order to enhance the quality of the endmember spectra and the abundance maps. The proposed model is a combination of a convolutional autoencoder and a transformer. The hyperspectral data is encoded by the convolutional encoder. The transformer captures long-range dependencies between the representations derived from the encoder. The data are reconstructed using a convolutional decoder. We applied the proposed unmixing model to three widely used unmixing datasets, i.e., Samson, Apex, and Washington DC mall and compared it with the state-of-the-art in terms of root mean squared error and spectral angle distance. The source code for the proposed model will be made publicly available at \url{https://github.com/preetam22n/DeepTrans-HSU}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge