Bicheng Yan

SURGIN: SURrogate-guided Generative INversion for subsurface multiphase flow with quantified uncertainty

Sep 16, 2025Abstract:We present a direct inverse modeling method named SURGIN, a SURrogate-guided Generative INversion framework tailed for subsurface multiphase flow data assimilation. Unlike existing inversion methods that require adaptation for each new observational configuration, SURGIN features a zero-shot conditional generation capability, enabling real-time assimilation of unseen monitoring data without task-specific retraining. Specifically, SURGIN synergistically integrates a U-Net enhanced Fourier Neural Operator (U-FNO) surrogate with a score-based generative model (SGM), framing the conditional generation as a surrogate prediction-guidance process in a Bayesian perspective. Instead of directly learning the conditional generation of geological parameters, an unconditional SGM is first pretrained in a self-supervised manner to capture the geological prior, after which posterior sampling is performed by leveraging a differentiable U-FNO surrogate to enable efficient forward evaluations conditioned on unseen observations. Extensive numerical experiments demonstrate SURGIN's capability to decently infer heterogeneous geological fields and predict spatiotemporal flow dynamics with quantified uncertainty across diverse measurement settings. By unifying generative learning with surrogate-guided Bayesian inference, SURGIN establishes a new paradigm for inverse modeling and uncertainty quantification in parametric functional spaces.

Zero-Shot Digital Rock Image Segmentation with a Fine-Tuned Segment Anything Model

Nov 17, 2023Abstract:Accurate image segmentation is crucial in reservoir modelling and material characterization, enhancing oil and gas extraction efficiency through detailed reservoir models. This precision offers insights into rock properties, advancing digital rock physics understanding. However, creating pixel-level annotations for complex CT and SEM rock images is challenging due to their size and low contrast, lengthening analysis time. This has spurred interest in advanced semi-supervised and unsupervised segmentation techniques in digital rock image analysis, promising more efficient, accurate, and less labour-intensive methods. Meta AI's Segment Anything Model (SAM) revolutionized image segmentation in 2023, offering interactive and automated segmentation with zero-shot capabilities, essential for digital rock physics with limited training data and complex image features. Despite its advanced features, SAM struggles with rock CT/SEM images due to their absence in its training set and the low-contrast nature of grayscale images. Our research fine-tunes SAM for rock CT/SEM image segmentation, optimizing parameters and handling large-scale images to improve accuracy. Experiments on rock CT and SEM images show that fine-tuning significantly enhances SAM's performance, enabling high-quality mask generation in digital rock image analysis. Our results demonstrate the feasibility and effectiveness of the fine-tuned SAM model (RockSAM) for rock images, offering segmentation without extensive training or complex labelling.

Enhancing Rock Image Segmentation in Digital Rock Physics: A Fusion of Generative AI and State-of-the-Art Neural Networks

Nov 10, 2023

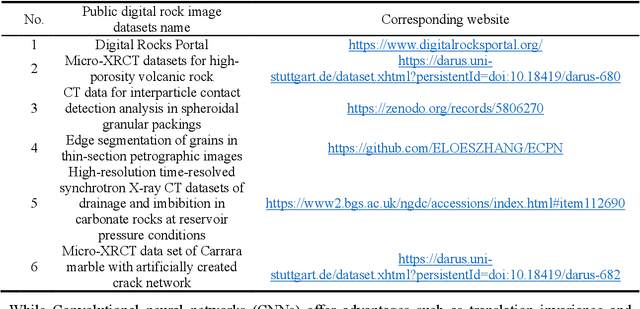

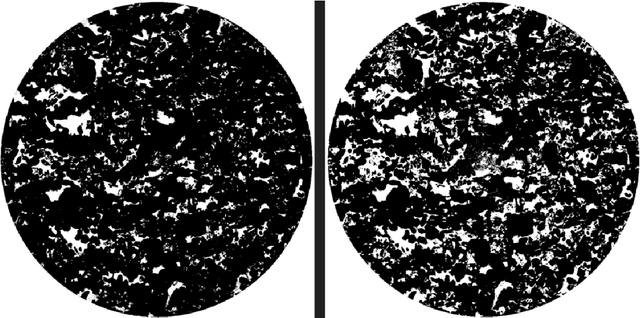

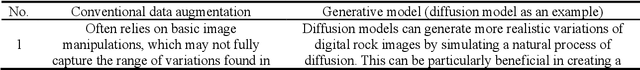

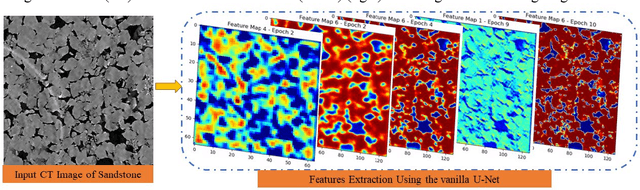

Abstract:In digital rock physics, analysing microstructures from CT and SEM scans is crucial for estimating properties like porosity and pore connectivity. Traditional segmentation methods like thresholding and CNNs often fall short in accurately detailing rock microstructures and are prone to noise. U-Net improved segmentation accuracy but required many expert-annotated samples, a laborious and error-prone process due to complex pore shapes. Our study employed an advanced generative AI model, the diffusion model, to overcome these limitations. This model generated a vast dataset of CT/SEM and binary segmentation pairs from a small initial dataset. We assessed the efficacy of three neural networks: U-Net, Attention-U-net, and TransUNet, for segmenting these enhanced images. The diffusion model proved to be an effective data augmentation technique, improving the generalization and robustness of deep learning models. TransU-Net, incorporating Transformer structures, demonstrated superior segmentation accuracy and IoU metrics, outperforming both U-Net and Attention-U-net. Our research advances rock image segmentation by combining the diffusion model with cutting-edge neural networks, reducing dependency on extensive expert data and boosting segmentation accuracy and robustness. TransU-Net sets a new standard in digital rock physics, paving the way for future geoscience and engineering breakthroughs.

A Robust Deep Learning Workflow to Predict Multiphase Flow Behavior during Geological CO2 Sequestration Injection and Post-Injection Periods

Jul 15, 2021

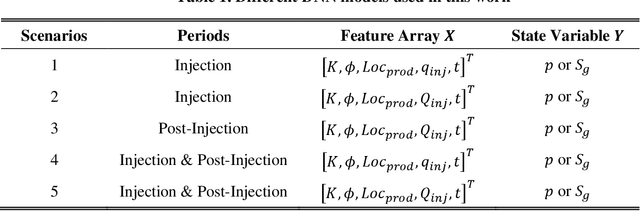

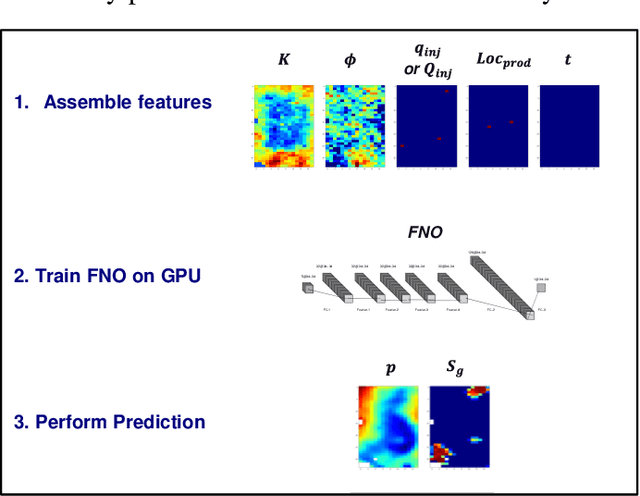

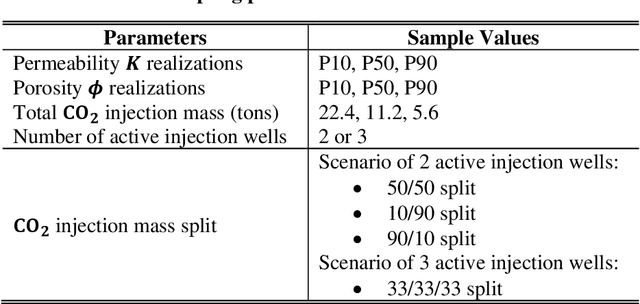

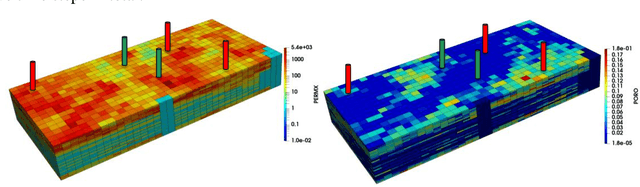

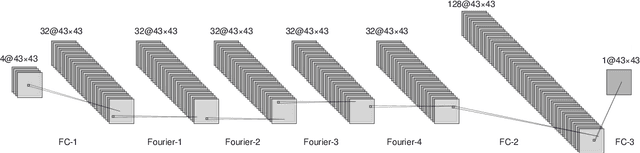

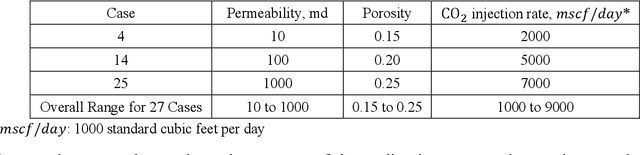

Abstract:This paper contributes to the development and evaluation of a deep learning workflow that accurately and efficiently predicts the temporal-spatial evolution of pressure and CO2 plumes during injection and post-injection periods of geologic CO2 sequestration (GCS) operations. Based on a Fourier Neuron Operator, the deep learning workflow takes input variables or features including rock properties, well operational controls and time steps, and predicts the state variables of pressure and CO2 saturation. To further improve the predictive fidelity, separate deep learning models are trained for CO2 injection and post-injection periods due the difference in primary driving force of fluid flow and transport during these two phases. We also explore different combinations of features to predict the state variables. We use a realistic example of CO2 injection and storage in a 3D heterogeneous saline aquifer, and apply the deep learning workflow that is trained from physics-based simulation data and emulate the physics process. Through this numerical experiment, we demonstrate that using two separate deep learning models to distinguish post-injection from injection period generates the most accurate prediction of pressure, and a single deep learning model of the whole GCS process including the cumulative injection volume of CO2 as a deep learning feature, leads to the most accurate prediction of CO2 saturation. For the post-injection period, it is key to use cumulative CO2 injection volume to inform the deep learning models about the total carbon storage when predicting either pressure or saturation. The deep learning workflow not only provides high predictive fidelity across temporal and spatial scales, but also offers a speedup of 250 times compared to full physics reservoir simulation, and thus will be a significant predictive tool for engineers to manage the long term process of GCS.

Improving Deep Learning Performance for Predicting Large-Scale Porous-Media Flow through Feature Coarsening

May 08, 2021

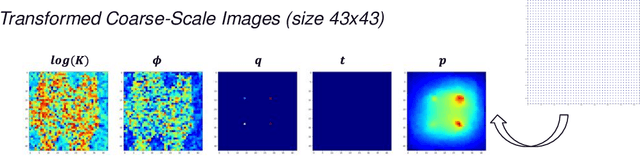

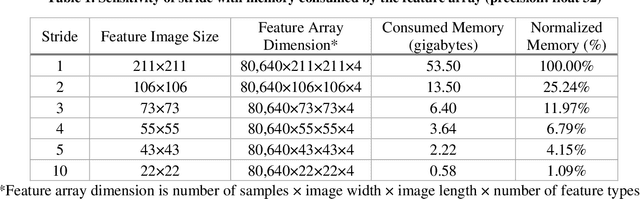

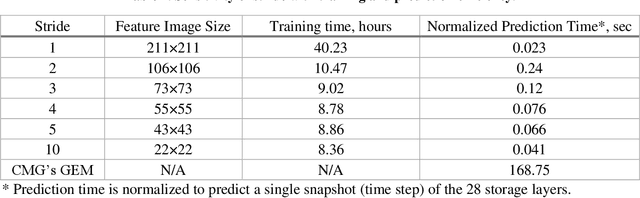

Abstract:Physics-based simulation for fluid flow in porous media is a computational technology to predict the temporal-spatial evolution of state variables (e.g. pressure) in porous media, and usually requires high computational expense due to its nonlinearity and the scale of the study domain. This letter describes a deep learning (DL) workflow to predict the pressure evolution as fluid flows in large-scale 3D heterogeneous porous media. In particular, we apply feature coarsening technique to extract the most representative information and perform the training and prediction of DL at the coarse scale, and further recover the resolution at the fine scale by 2D piecewise cubic interpolation. We validate the DL approach that is trained from physics-based simulation data to predict pressure field in a field-scale 3D geologic CO_2 storage reservoir. We evaluate the impact of feature coarsening on DL performance, and observe that the feature coarsening can not only decrease training time by >74% and reduce memory consumption by >75%, but also maintains temporal error <1.5%. Besides, the DL workflow provides predictive efficiency with ~1400 times speedup compared to physics-based simulation.

A Physics-Constrained Deep Learning Model for Simulating Multiphase Flow in 3D Heterogeneous Porous Media

Apr 30, 2021

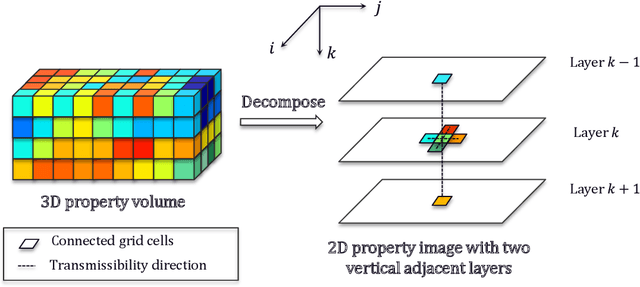

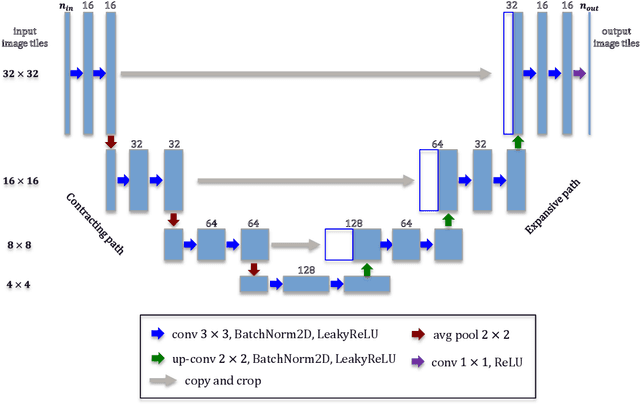

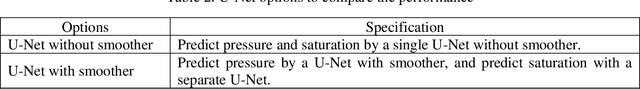

Abstract:In this work, an efficient physics-constrained deep learning model is developed for solving multiphase flow in 3D heterogeneous porous media. The model fully leverages the spatial topology predictive capability of convolutional neural networks, and is coupled with an efficient continuity-based smoother to predict flow responses that need spatial continuity. Furthermore, the transient regions are penalized to steer the training process such that the model can accurately capture flow in these regions. The model takes inputs including properties of porous media, fluid properties and well controls, and predicts the temporal-spatial evolution of the state variables (pressure and saturation). While maintaining the continuity of fluid flow, the 3D spatial domain is decomposed into 2D images for reducing training cost, and the decomposition results in an increased number of training data samples and better training efficiency. Additionally, a surrogate model is separately constructed as a postprocessor to calculate well flow rate based on the predictions of state variables from the deep learning model. We use the example of CO2 injection into saline aquifers, and apply the physics-constrained deep learning model that is trained from physics-based simulation data and emulates the physics process. The model performs prediction with a speedup of ~1400 times compared to physics-based simulations, and the average temporal errors of predicted pressure and saturation plumes are 0.27% and 0.099% respectively. Furthermore, water production rate is efficiently predicted by a surrogate model for well flow rate, with a mean error less than 5%. Therefore, with its unique scheme to cope with the fidelity in fluid flow in porous media, the physics-constrained deep learning model can become an efficient predictive model for computationally demanding inverse problems or other coupled processes.

A Gradient-based Deep Neural Network Model for Simulating Multiphase Flow in Porous Media

Apr 30, 2021

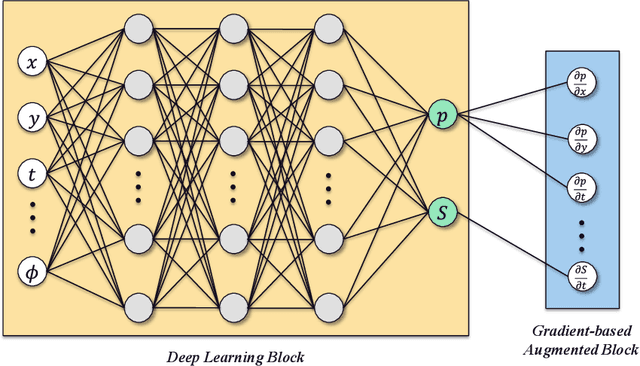

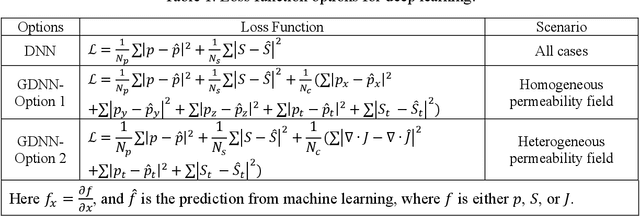

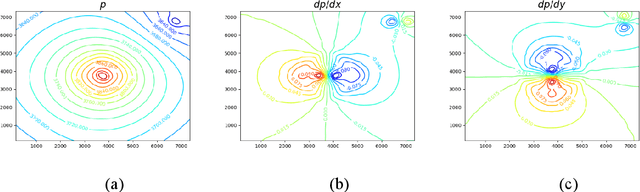

Abstract:Simulation of multiphase flow in porous media is crucial for the effective management of subsurface energy and environment related activities. The numerical simulators used for modeling such processes rely on spatial and temporal discretization of the governing partial-differential equations (PDEs) into algebraic systems via numerical methods. These simulators usually require dedicated software development and maintenance, and suffer low efficiency from a runtime and memory standpoint. Therefore, developing cost-effective, data-driven models can become a practical choice since deep learning approaches are considered to be universal approximations. In this paper, we describe a gradient-based deep neural network (GDNN) constrained by the physics related to multiphase flow in porous media. We tackle the nonlinearity of flow in porous media induced by rock heterogeneity, fluid properties and fluid-rock interactions by decomposing the nonlinear PDEs into a dictionary of elementary differential operators. We use a combination of operators to handle rock spatial heterogeneity and fluid flow by advection. Since the augmented differential operators are inherently related to the physics of fluid flow, we treat them as first principles prior knowledge to regularize the GDNN training. We use the example of pressure management at geologic CO2 storage sites, where CO2 is injected in saline aquifers and brine is produced, and apply GDNN to construct a predictive model that is trained from physics-based simulation data and emulates the physics process. We demonstrate that GDNN can effectively predict the nonlinear patterns of subsurface responses including the temporal-spatial evolution of the pressure and saturation plumes. GDNN has great potential to tackle challenging problems that are governed by highly nonlinear physics and enables development of data-driven models with higher fidelity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge