Berk Cicek

Interpretable Responsibility Sharing as a Heuristic for Task and Motion Planning

Sep 09, 2024

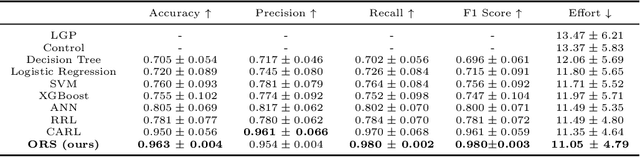

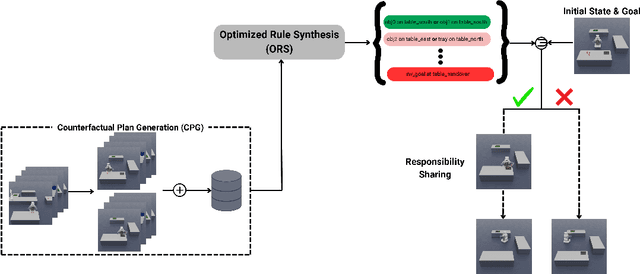

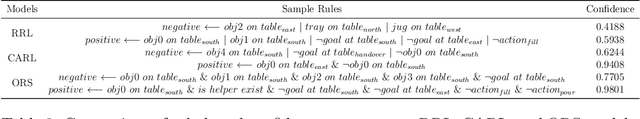

Abstract:This article introduces a novel heuristic for Task and Motion Planning (TAMP) named Interpretable Responsibility Sharing (IRS), which enhances planning efficiency in domestic robots by leveraging human-constructed environments and inherent biases. Utilizing auxiliary objects (e.g., trays and pitchers), which are commonly found in household settings, IRS systematically incorporates these elements to simplify and optimize task execution. The heuristic is rooted in the novel concept of Responsibility Sharing (RS), where auxiliary objects share the task's responsibility with the embodied agent, dividing complex tasks into manageable sub-problems. This division not only reflects human usage patterns but also aids robots in navigating and manipulating within human spaces more effectively. By integrating Optimized Rule Synthesis (ORS) for decision-making, IRS ensures that the use of auxiliary objects is both strategic and context-aware, thereby improving the interpretability and effectiveness of robotic planning. Experiments conducted across various household tasks demonstrate that IRS significantly outperforms traditional methods by reducing the effort required in task execution and enhancing the overall decision-making process. This approach not only aligns with human intuitive methods but also offers a scalable solution adaptable to diverse domestic environments. Code is available at https://github.com/asyncs/IRS.

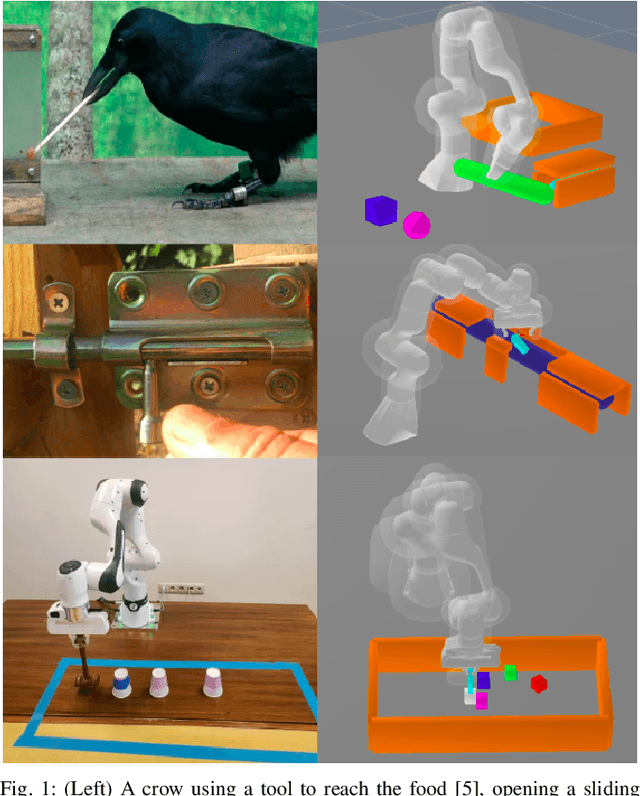

H-MaP: An Iterative and Hybrid Sequential Manipulation Planner

Mar 15, 2024

Abstract:This study introduces the Hybrid Sequential Manipulation Planner (H-MaP), a novel approach that iteratively does motion planning using contact points and waypoints for complex sequential manipulation tasks in robotics. Combining optimization-based methods for generalizability and sampling-based methods for robustness, H-MaP enhances manipulation planning through active contact mode switches and enables interactions with auxiliary objects and tools. This framework, validated by a series of diverse physical manipulation tasks and real-robot experiments, offers a scalable and adaptable solution for complex real-world applications in robotic manipulation.

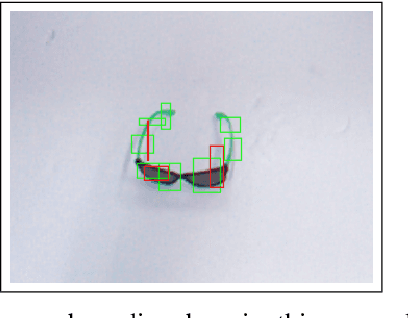

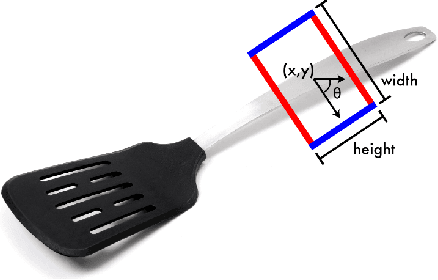

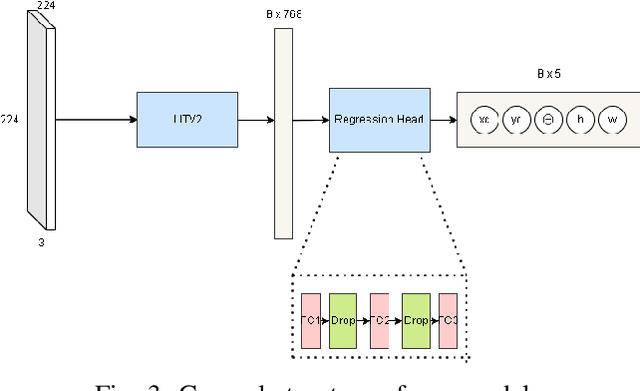

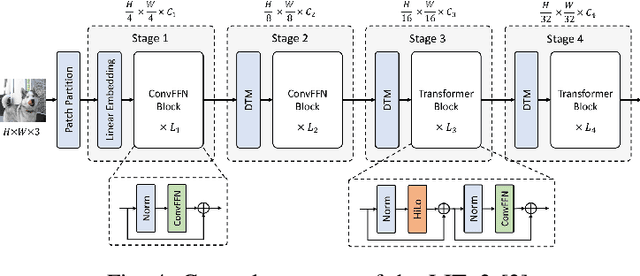

FViT-Grasp: Grasping Objects With Using Fast Vision Transformers

Nov 23, 2023

Abstract:This study addresses the challenge of manipulation, a prominent issue in robotics. We have devised a novel methodology for swiftly and precisely identifying the optimal grasp point for a robot to manipulate an object. Our approach leverages a Fast Vision Transformer (FViT), a type of neural network designed for processing visual data and predicting the most suitable grasp location. Demonstrating state-of-the-art performance in terms of speed while maintaining a high level of accuracy, our method holds promise for potential deployment in real-time robotic grasping applications. We believe that this study provides a baseline for future research in vision-based robotic grasp applications. Its high speed and accuracy bring researchers closer to real-life applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge