Benyamin Jamialahmadi

Balcony: A Lightweight Approach to Dynamic Inference of Generative Language Models

Mar 06, 2025Abstract:Deploying large language models (LLMs) in real-world applications is often hindered by strict computational and latency constraints. While dynamic inference offers the flexibility to adjust model behavior based on varying resource budgets, existing methods are frequently limited by hardware inefficiencies or performance degradation. In this paper, we introduce Balcony, a simple yet highly effective framework for depth-based dynamic inference. By freezing the pretrained LLM and inserting additional transformer layers at selected exit points, Balcony maintains the full model's performance while enabling real-time adaptation to different computational budgets. These additional layers are trained using a straightforward self-distillation loss, aligning the sub-model outputs with those of the full model. This approach requires significantly fewer training tokens and tunable parameters, drastically reducing computational costs compared to prior methods. When applied to the LLaMA3-8B model, using only 0.2% of the original pretraining data, Balcony achieves minimal performance degradation while enabling significant speedups. Remarkably, we show that Balcony outperforms state-of-the-art methods such as Flextron and Layerskip as well as other leading compression techniques on multiple models and at various scales, across a variety of benchmarks.

A Variational Auto-Encoder Approach for Image Transmission in Wireless Channel

Oct 08, 2020

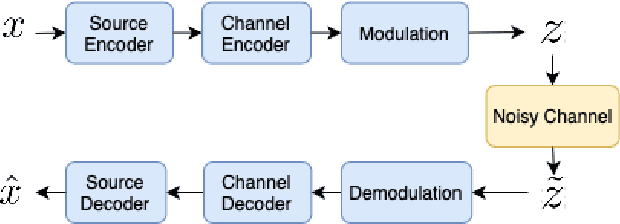

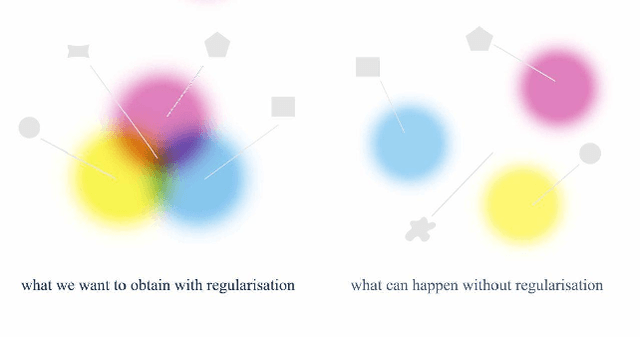

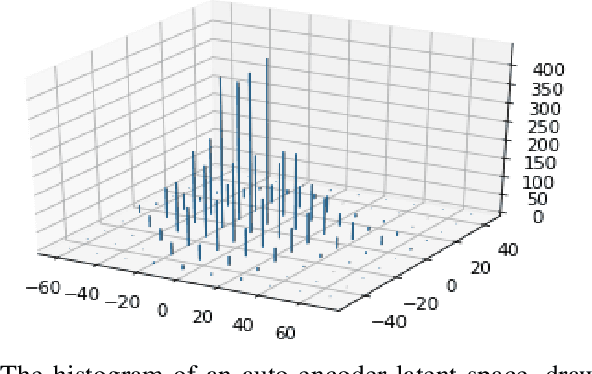

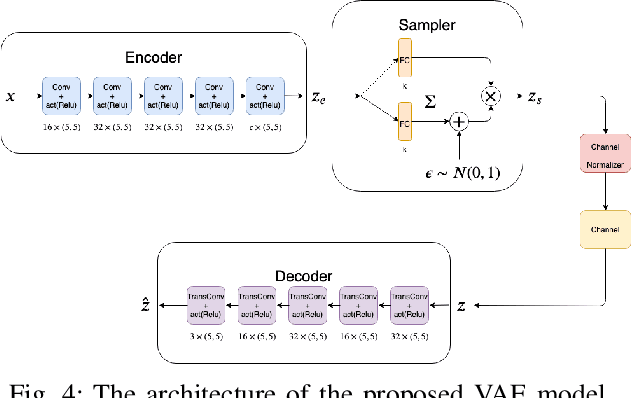

Abstract:Recent advancements in information technology and the widespread use of the Internet have led to easier access to data worldwide. As a result, transmitting data through noisy channels is inevitable. Reducing the size of data and protecting it during transmission from corruption due to channel noises are two classical problems in communication and information theory. Recently, inspired by deep neural networks' success in different tasks, many works have been done to address these two problems using deep learning techniques. In this paper, we investigate the performance of variational auto-encoders and compare the results with standard auto-encoders. Our findings suggest that variational auto-encoders are more robust to channel degradation than auto-encoders. Furthermore, we have tried to excel in the human perceptual quality of reconstructed images by using perception-based error metrics as our network's loss function. To this end, we use the structural similarity index (SSIM) as a perception-based metric to optimize the proposed neural network. Our experiments demonstrate that the SSIM metric visually improves the quality of the reconstructed images at the receiver.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge