Benedikt Schesch

Facial Image Feature Analysis and its Specialization for Fréchet Distance and Neighborhoods

Jun 26, 2024

Abstract:Assessing distances between images and image datasets is a fundamental task in vision-based research. It is a challenging open problem in the literature and despite the criticism it receives, the most ubiquitous method remains the Fr\'echet Inception Distance. The Inception network is trained on a specific labeled dataset, ImageNet, which has caused the core of its criticism in the most recent research. Improvements were shown by moving to self-supervision learning over ImageNet, leaving the training data domain as an open question. We make that last leap and provide the first analysis on domain-specific feature training and its effects on feature distance, on the widely-researched facial image domain. We provide our findings and insights on this domain specialization for Fr\'echet distance and image neighborhoods, supported by extensive experiments and in-depth user studies.

Large Scale Constrained Clustering With Reinforcement Learning

Feb 15, 2024Abstract:Given a network, allocating resources at clusters level, rather than at each node, enhances efficiency in resource allocation and usage. In this paper, we study the problem of finding fully connected disjoint clusters to minimize the intra-cluster distances and maximize the number of nodes assigned to the clusters, while also ensuring that no two nodes within a cluster exceed a threshold distance. While the problem can easily be formulated using a binary linear model, traditional combinatorial optimization solvers struggle when dealing with large-scale instances. We propose an approach to solve this constrained clustering problem via reinforcement learning. Our method involves training an agent to generate both feasible and (near) optimal solutions. The agent learns problem-specific heuristics, tailored to the instances encountered in this task. In the results section, we show that our algorithm finds near optimal solutions, even for large scale instances.

Agent-based Graph Neural Networks

Jun 22, 2022

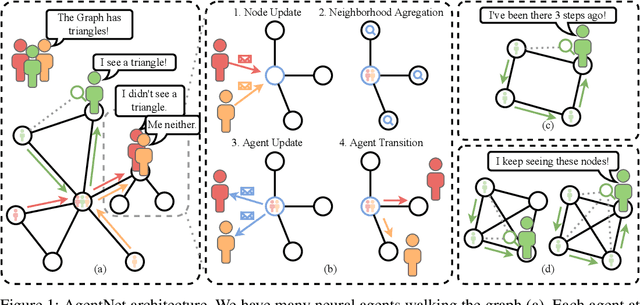

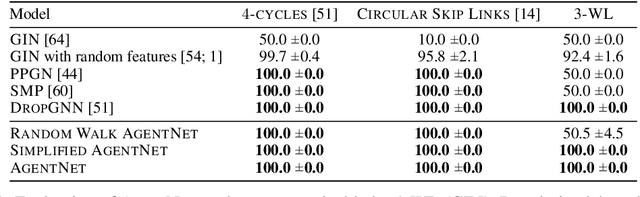

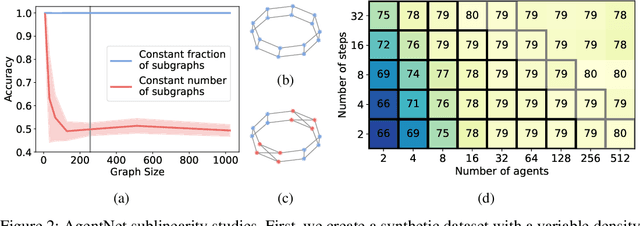

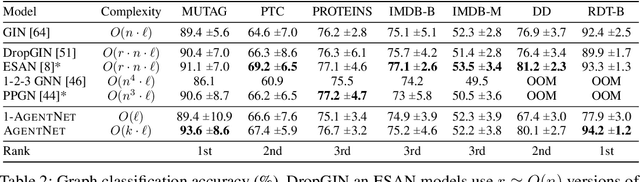

Abstract:We present a novel graph neural network we call AgentNet, which is designed specifically for graph-level tasks. AgentNet is inspired by sublinear algorithms, featuring a computational complexity that is independent of the graph size. The architecture of AgentNet differs fundamentally from the architectures of known graph neural networks. In AgentNet, some trained \textit{neural agents} intelligently walk the graph, and then collectively decide on the output. We provide an extensive theoretical analysis of AgentNet: We show that the agents can learn to systematically explore their neighborhood and that AgentNet can distinguish some structures that are even indistinguishable by 3-WL. Moreover, AgentNet is able to separate any two graphs which are sufficiently different in terms of subgraphs. We confirm these theoretical results with synthetic experiments on hard-to-distinguish graphs and real-world graph classification tasks. In both cases, we compare favorably not only to standard GNNs but also to computationally more expensive GNN extensions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge