Ben Haines

Quantity over Quality: Training an AV Motion Planner with Large Scale Commodity Vision Data

Mar 03, 2022

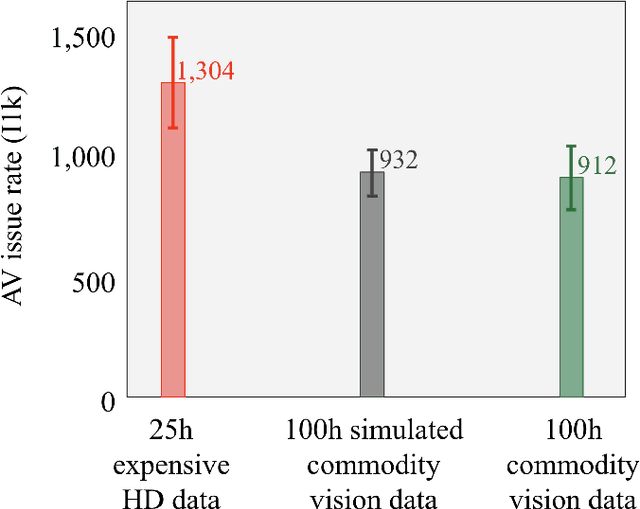

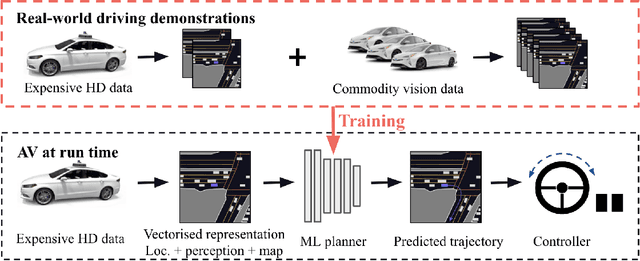

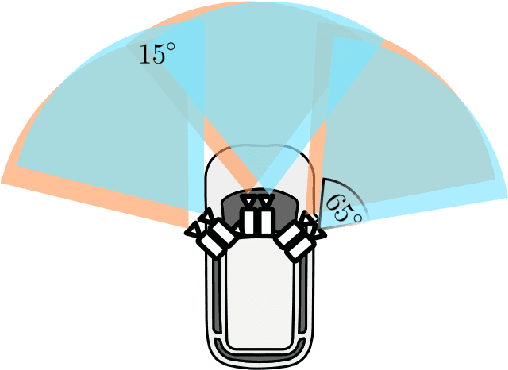

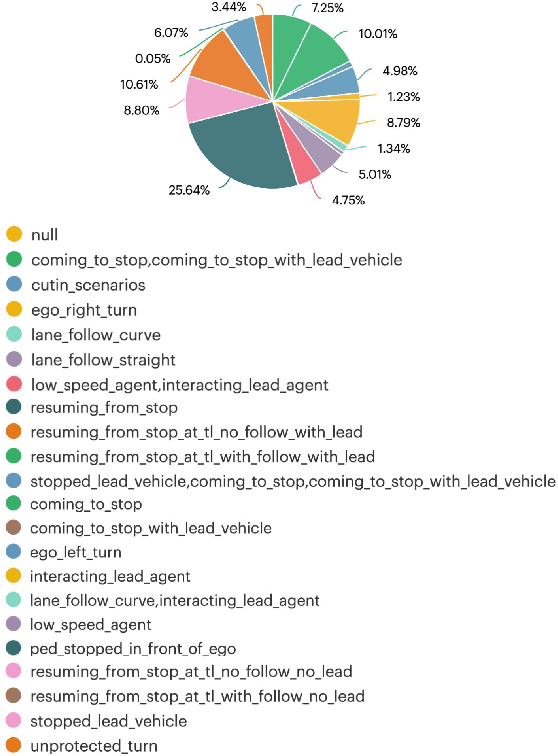

Abstract:With the Autonomous Vehicle (AV) industry shifting towards Autonomy 2.0, the performance of self-driving systems starts to rely heavily on large quantities of expert driving demonstrations. However, collecting this demonstration data typically involves expensive HD sensor suites (LiDAR + RADAR + cameras), which quickly becomes financially infeasible at the scales required. This motivates the use of commodity vision sensors for data collection, which are an order of magnitude cheaper than the HD sensor suites, but offer lower fidelity. If it were possible to leverage these for training an AV motion planner, observing the `long tail' of driving events would become a financially viable strategy. As our main contribution we show it is possible to train a high-performance motion planner using commodity vision data which outperforms planners trained on HD-sensor data for a fraction of the cost. We do this by comparing the autonomy system performance when training on these two different sensor configurations, and showing that we can compensate for the lower sensor fidelity by means of increased quantity: a planner trained on 100h of commodity vision data outperforms one with 25h of expensive HD data. We also share the technical challenges we had to tackle to make this work. To the best of our knowledge, we are the first to demonstrate that this is possible using real-world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge