Behzad Sadrfaridpour

Detecting and Counting Oysters

May 20, 2021

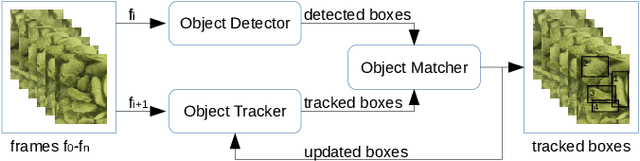

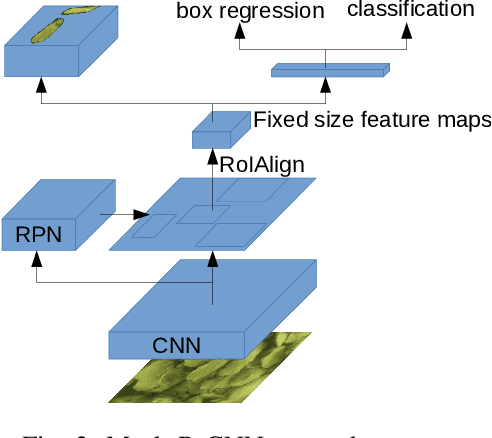

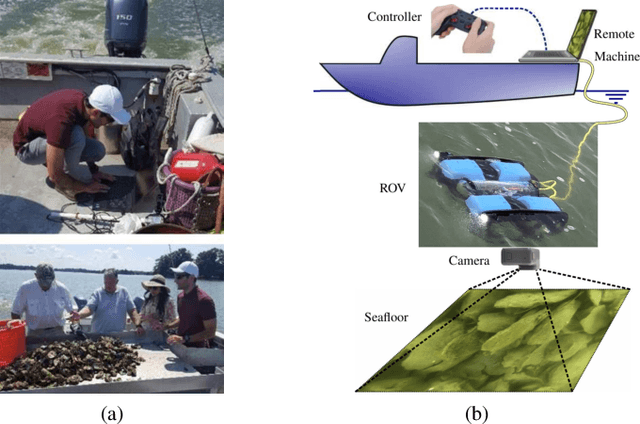

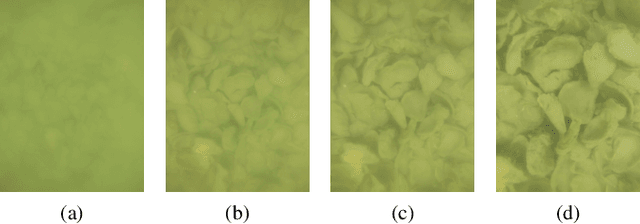

Abstract:Oysters are an essential species in the Chesapeake Bay living ecosystem. Oysters are filter feeders and considered the vacuum cleaners of the Chesapeake Bay that can considerably improve the Bay's water quality. Many oyster restoration programs have been initiated in the past decades and continued to date. Advancements in robotics and artificial intelligence have opened new opportunities for aquaculture. Drone-like ROVs with high maneuverability are getting more affordable and, if equipped with proper sensory devices, can monitor the oysters. This work presents our efforts for videography of the Chesapeake bay bottom using an ROV, constructing a database of oysters, implementing Mask R-CNN for detecting oysters, and counting their number in a video by tracking them.

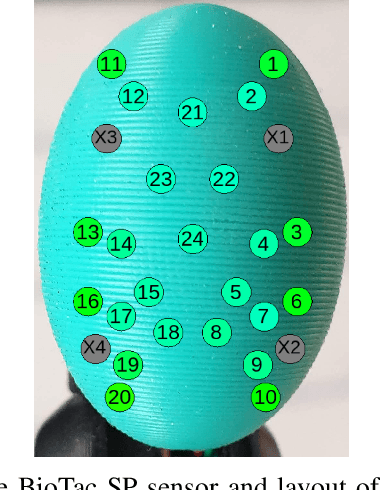

Grasping in the Dark: Compliant Grasping using Shadow Dexterous Hand and BioTac Tactile Sensor

Nov 02, 2020

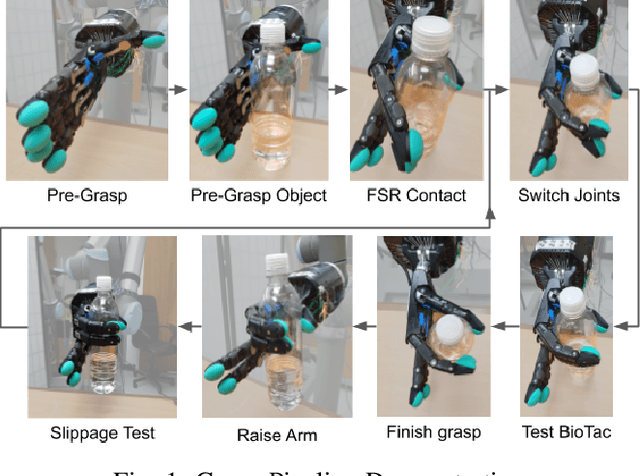

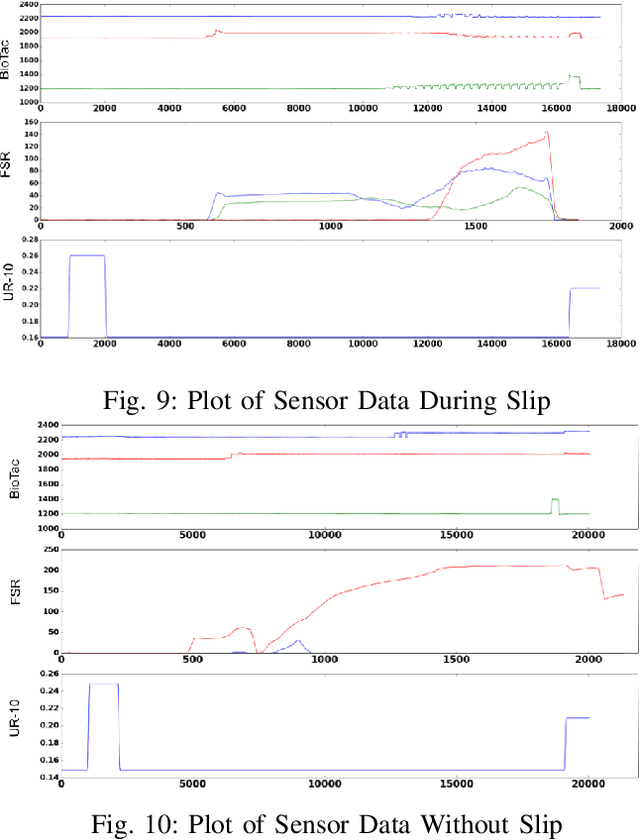

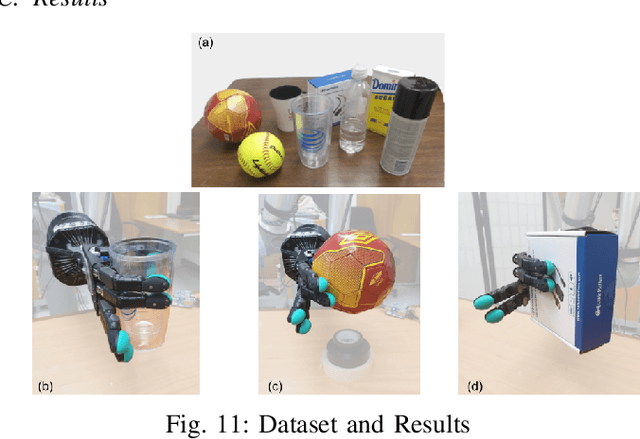

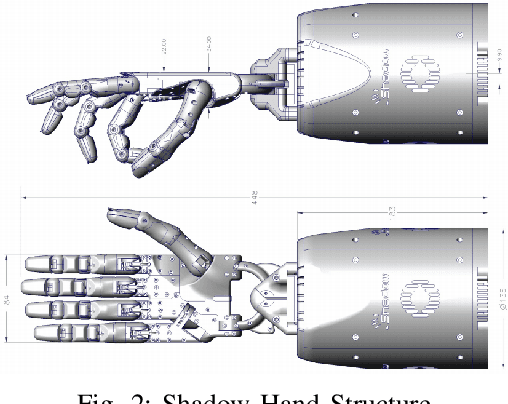

Abstract:When it comes to grasping and manipulating objects, the human hand is the benchmark based on which we design and model grasping strategies and algorithms. The task of imitating human hand in robotic end-effectors, especially in scenarios where visual input is limited or absent, is an extremely challenging one. In this paper we present an adaptive, compliant grasping strategy using only tactile feedback. The proposed algorithm can grasp objects of varying shapes, sizes and weights without having a priori knowledge of the objects. The proof of concept algorithm presented here uses classical control formulations for closed-loop grasping. The algorithm has been experimentally validated using a Shadow Dexterous Hand equipped with BioTac tactile sensors. We demonstrate the success of our grasping policies on a variety of objects, such as bottles, boxes and balls.

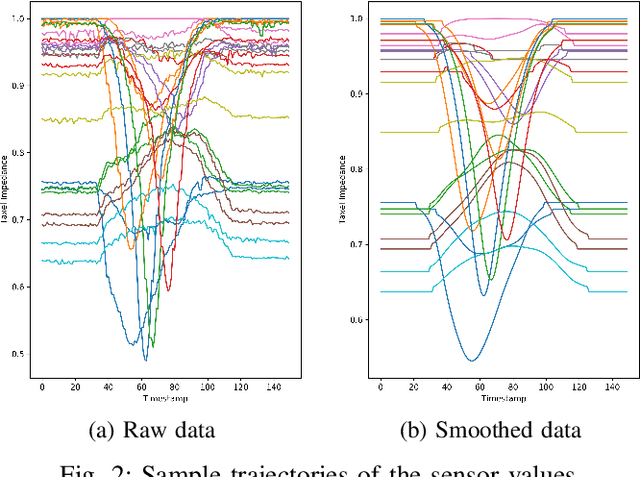

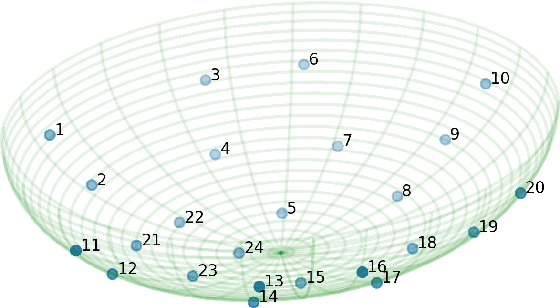

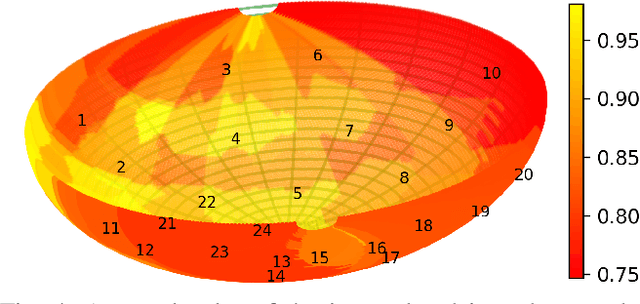

Computational Tactile Flow for Anthropomorphic Grippers

Mar 19, 2019

Abstract:Grasping objects requires tight integration between visual and tactile feedback. However, there is an inherent difference in the scale at which both these input modalities operate. It is thus necessary to be able to analyze tactile feedback in isolation in order to gain information about the surface the end-effector is operating on, such that more fine-grained features may be extracted from the surroundings. For tactile perception of the robot, inspired by the concept of the tactile flow in humans, we present the computational tactile flow to improve the analysis of the tactile feedback in robots using a Shadow Dexterous Hand. In the computational tactile flow model, given a sequence of pressure values from the tactile sensors, we define a virtual surface for the pressure values and define the tactile flow as the optical flow of this surface. We provide case studies that demonstrate how the computational tactile flow maps reveal information on the direction of motion and 3D structure of the surface, and feedback regarding the action being performed by the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge