Atanas Gotchev

Wavefront Coding for Accommodation-Invariant Near-Eye Displays

Oct 14, 2025Abstract:We present a new computational near-eye display method that addresses the vergence-accommodation conflict problem in stereoscopic displays through accommodation-invariance. Our system integrates a refractive lens eyepiece with a novel wavefront coding diffractive optical element, operating in tandem with a pre-processing convolutional neural network. We employ end-to-end learning to jointly optimize the wavefront-coding optics and the image pre-processing module. To implement this approach, we develop a differentiable retinal image formation model that accounts for limiting aperture and chromatic aberrations introduced by the eye optics. We further integrate the neural transfer function and the contrast sensitivity function into the loss model to account for related perceptual effects. To tackle off-axis distortions, we incorporate position dependency into the pre-processing module. In addition to conducting rigorous analysis based on simulations, we also fabricate the designed diffractive optical element and build a benchtop setup, demonstrating accommodation-invariance for depth ranges of up to four diopters.

Design and manufacturing of an optimized retro reflective marker for photogrammetric pose estimation in ITER

May 11, 2022

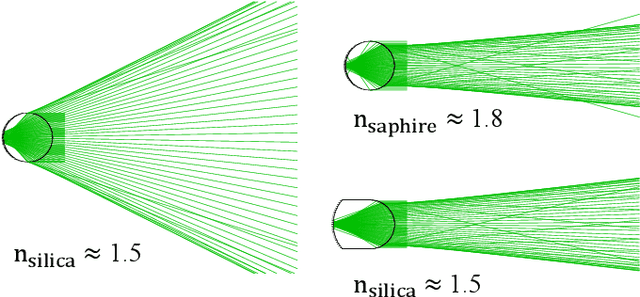

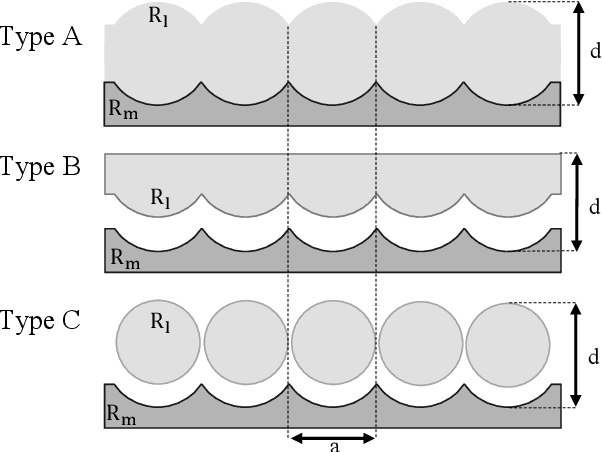

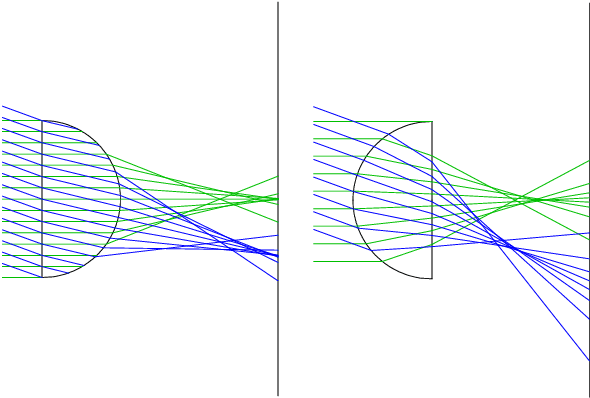

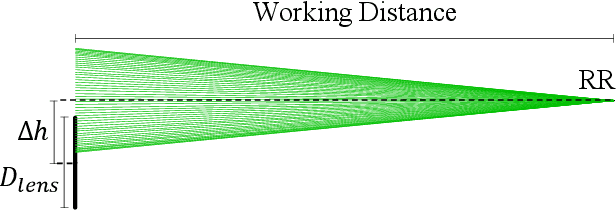

Abstract:Retro reflective markers can remarkably aid photogrammetry tasks in challenging visual environments. They have been demonstrated to be key enablers of pose estimation for remote handling in ITER. However, the strict requirements of the ITER environment have previously markedly constrained the design of such elements and limited their performance. In this work, we identify several retro reflector designs based on the cat's eye principle that are applicable to the ITER usecase and propose a methodology for optimizing their performance. We circumvent some of the environmental constraints by changing the curvature radius and distance to the reflective surface. We model, manufacture and test a marker that fulfils all the application requirements while achieving a gain of around 100\% in performance over the previous solution in the targeted working range.

Optical modelling of accommodative light field display system and prediction of human eye responses

Apr 02, 2022

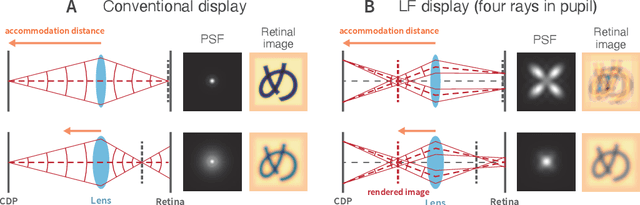

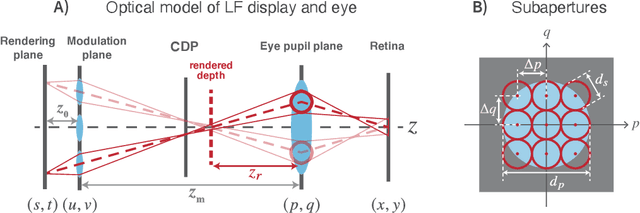

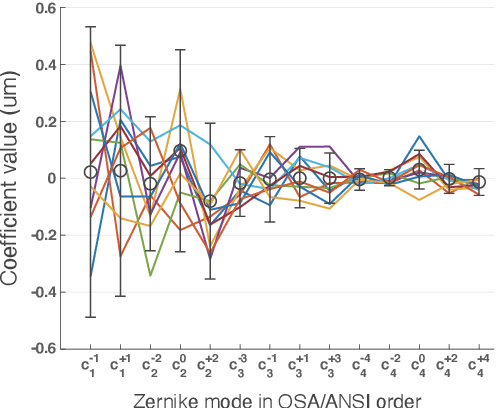

Abstract:The spatio-angular resolution of a light field (LF) display is a crucial factor for delivering adequate spatial image quality and eliciting an accommodation response. Previous studies have modelled retinal image formation with an LF display and evaluated whether accommodation would be evoked correctly. The models were mostly based on ray-tracing and a schematic eye model, which pose computational complexity and inaccurately represent the human eye population's behaviour. We propose an efficient wave-optics-based framework to model the human eye and a general LF display. With the model, we simulated the retinal point spread function (PSF) of a point rendered by an LF display at various depths to characterise the retinal image quality. Additionally, accommodation responses to rendered LF images were estimated by computing the visual Strehl ratio based on the optical transfer function (VSOTF) from the PSFs. We assumed an ideal LF display that had an infinite spatial resolution and was free from optical aberrations in the simulation. We tested images rendered at 0--4 dioptres of depths having angular resolutions of up to 4x4 viewpoints within a pupil. The simulation predicted small and constant accommodation errors, which contradict the findings of previous studies. An evaluation of the optical resolution of the rendered retinal image suggested a trade-off between the maximum resolution achievable and the depth range of a rendered image where in-focus resolution is kept high. The proposed framework can be used to evaluate the upper bound of the optical performance of an LF display for realistically aberrated eyes, which may help to find an optimal spatio-angular resolution required to render a high quality 3D scene.

Bi-directional Loop Closure for Visual SLAM

Apr 01, 2022

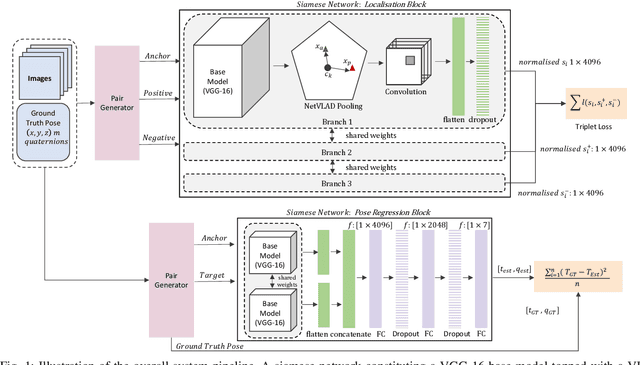

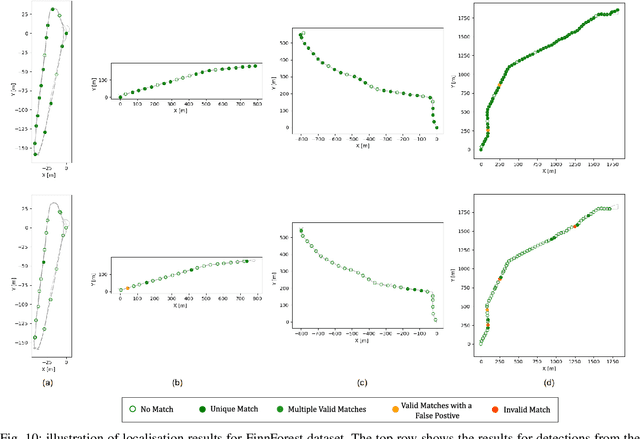

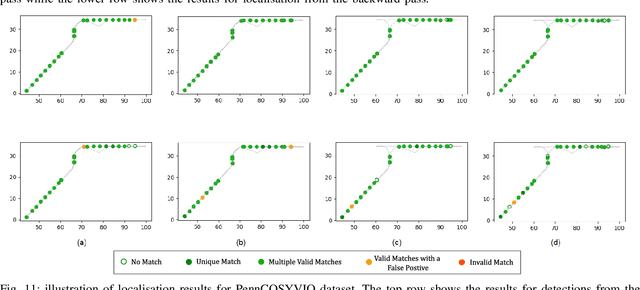

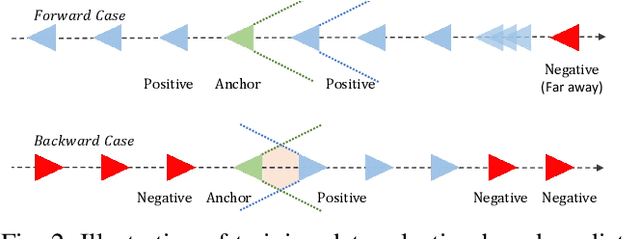

Abstract:A key functional block of visual navigation system for intelligent autonomous vehicles is Loop Closure detection and subsequent relocalisation. State-of-the-Art methods still approach the problem as uni-directional along the direction of the previous motion. As a result, most of the methods fail in the absence of a significantly similar overlap of perspectives. In this study, we propose an approach for bi-directional loop closure. This will, for the first time, provide us with the capability to relocalize to a location even when traveling in the opposite direction, thus significantly reducing long-term odometry drift in the absence of a direct loop. We present a technique to select training data from large datasets in order to make them usable for the bi-directional problem. The data is used to train and validate two different CNN architectures for loop closure detection and subsequent regression of 6-DOF camera pose between the views in an end-to-end manner. The outcome packs a considerable impact and aids significantly to real-world scenarios that do not offer direct loop closure opportunities. We provide a rigorous empirical comparison against other established approaches and evaluate our method on both outdoor and indoor data from the FinnForest dataset and PennCOSYVIO dataset.

DRST: Deep Residual Shearlet Transform for Densely Sampled Light Field Reconstruction

Mar 19, 2020

Abstract:The Image-Based Rendering (IBR) approach using Shearlet Transform (ST) is one of the most effective methods for Densely-Sampled Light Field (DSLF) reconstruction. The ST-based DSLF reconstruction typically relies on an iterative thresholding algorithm for Epipolar-Plane Image (EPI) sparse regularization in shearlet domain, involving dozens of transformations between image domain and shearlet domain, which are in general time-consuming. To overcome this limitation, a novel learning-based ST approach, referred to as Deep Residual Shearlet Transform (DRST), is proposed in this paper. Specifically, for an input sparsely-sampled EPI, DRST employs a deep fully Convolutional Neural Network (CNN) to predict the residuals of the shearlet coefficients in shearlet domain in order to reconstruct a densely-sampled EPI in image domain. The DRST network is trained on synthetic Sparsely-Sampled Light Field (SSLF) data only by leveraging elaborately-designed masks. Experimental results on three challenging real-world light field evaluation datasets with varying moderate disparity ranges (8 - 16 pixels) demonstrate the superiority of the proposed learning-based DRST approach over the non-learning-based ST method for DSLF reconstruction. Moreover, DRST provides a 2.4x speedup over ST, at least.

Learning Wavefront Coding for Extended Depth of Field Imaging

Dec 31, 2019

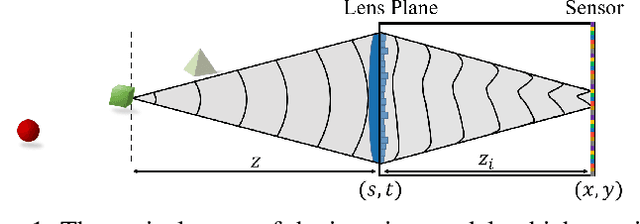

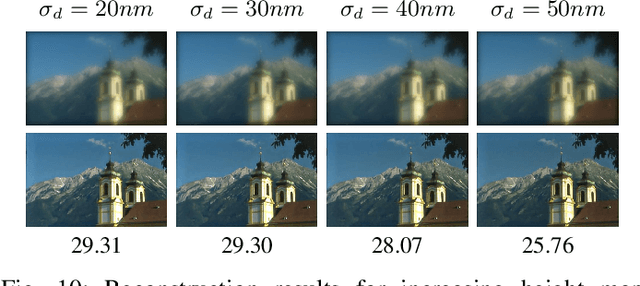

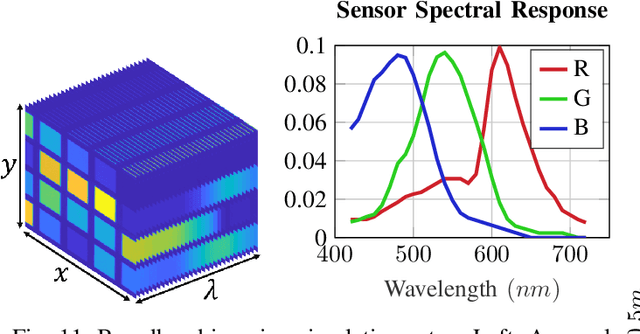

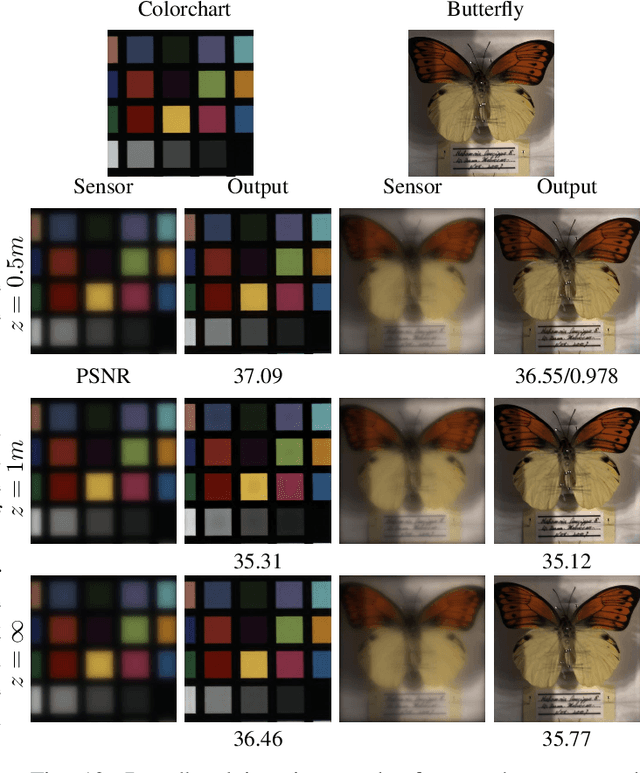

Abstract:The depth of field constitutes an important quality factor of imaging systems that highly affects the content of the acquired spatial information in the captured images. Extended depth of field (EDoF) imaging is a challenging problem due to its highly ill-posed nature, hence it has been extensively addressed in the literature. We propose a computational imaging approach for EDoF, where we employ wavefront coding via a diffractive optical element (DOE) and we achieve deblurring through a convolutional neural network. Thanks to the end-to-end differentiable modeling of optical image formation and computational post-processing, we jointly optimize the optical design, i.e., DOE, and the deblurring through standard gradient descent methods. Based on the properties of the underlying refractive lens and the desired EDoF range, we provide an analytical expression for the search space of the DOE, which helps in the convergence of the end-to-end network. We achieve superior EDoF imaging performance compared to state of the art, where we demonstrate results with minimal artifacts in various scenarios, including deep 3D scenes and broadband imaging.

Light Field Reconstruction Using Shearlet Transform

Sep 29, 2015

Abstract:In this article we develop an image based rendering technique based on light field reconstruction from a limited set of perspective views acquired by cameras. Our approach utilizes sparse representation of epipolar-plane images in a directionally sensitive transform domain, obtained by an adapted discrete shearlet transform. The used iterative thresholding algorithm provides high-quality reconstruction results for relatively big disparities between neighboring views. The generated densely sampled light field of a given 3D scene is thus suitable for all applications which requires light field reconstruction. The proposed algorithm is compared favorably against state of the art depth image based rendering techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge