Ashutosh Dutta

Deep Reinforcement Learning to Maximize Arterial Usage during Extreme Congestion

May 16, 2023

Abstract:Collisions, crashes, and other incidents on road networks, if left unmitigated, can potentially cause cascading failures that can affect large parts of the system. Timely handling such extreme congestion scenarios is imperative to reduce emissions, enhance productivity, and improve the quality of urban living. In this work, we propose a Deep Reinforcement Learning (DRL) approach to reduce traffic congestion on multi-lane freeways during extreme congestion. The agent is trained to learn adaptive detouring strategies for congested freeway traffic such that the freeway lanes along with the local arterial network in proximity are utilized optimally, with rewards being congestion reduction and traffic speed improvement. The experimental setup is a 2.6-mile-long 4-lane freeway stretch in Shoreline, Washington, USA with two exits and associated arterial roads simulated on a microscopic and continuous multi-modal traffic simulator SUMO (Simulation of Urban MObility) while using parameterized traffic profiles generated using real-world traffic data. Our analysis indicates that DRL-based controllers can improve average traffic speed by 21\% when compared to no-action during steep congestion. The study further discusses the trade-offs involved in the choice of reward functions, the impact of human compliance on agent performance, and the feasibility of knowledge transfer from one agent to other to address data sparsity and scaling issues.

Deep Reinforcement Learning for Cyber System Defense under Dynamic Adversarial Uncertainties

Feb 03, 2023

Abstract:Development of autonomous cyber system defense strategies and action recommendations in the real-world is challenging, and includes characterizing system state uncertainties and attack-defense dynamics. We propose a data-driven deep reinforcement learning (DRL) framework to learn proactive, context-aware, defense countermeasures that dynamically adapt to evolving adversarial behaviors while minimizing loss of cyber system operations. A dynamic defense optimization problem is formulated with multiple protective postures against different types of adversaries with varying levels of skill and persistence. A custom simulation environment was developed and experiments were devised to systematically evaluate the performance of four model-free DRL algorithms against realistic, multi-stage attack sequences. Our results suggest the efficacy of DRL algorithms for proactive cyber defense under multi-stage attack profiles and system uncertainties.

Constraints Satisfiability Driven Reinforcement Learning for Autonomous Cyber Defense

Apr 19, 2021

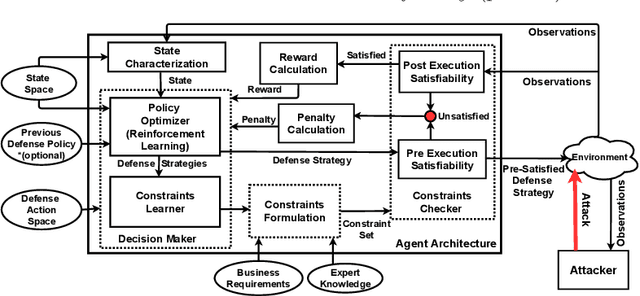

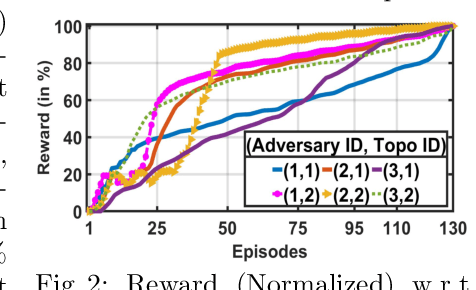

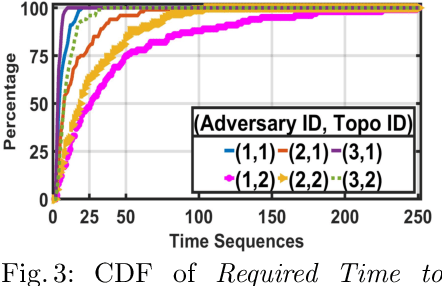

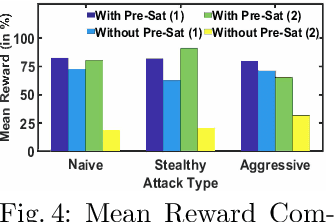

Abstract:With the increasing system complexity and attack sophistication, the necessity of autonomous cyber defense becomes vivid for cyber and cyber-physical systems (CPSs). Many existing frameworks in the current state-of-the-art either rely on static models with unrealistic assumptions, or fail to satisfy the system safety and security requirements. In this paper, we present a new hybrid autonomous agent architecture that aims to optimize and verify defense policies of reinforcement learning (RL) by incorporating constraints verification (using satisfiability modulo theory (SMT)) into the agent's decision loop. The incorporation of SMT does not only ensure the satisfiability of safety and security requirements, but also provides constant feedback to steer the RL decision-making toward safe and effective actions. This approach is critically needed for CPSs that exhibit high risk due to safety or security violations. Our evaluation of the presented approach in a simulated CPS environment shows that the agent learns the optimal policy fast and defeats diversified attack strategies in 99\% cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge