Asfiya Baig

Domain Adaptation for Object Detection using SE Adaptors and Center Loss

May 25, 2022

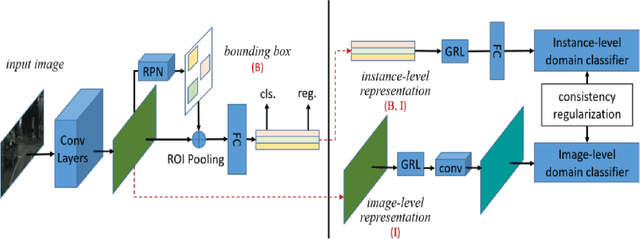

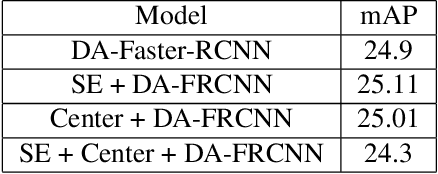

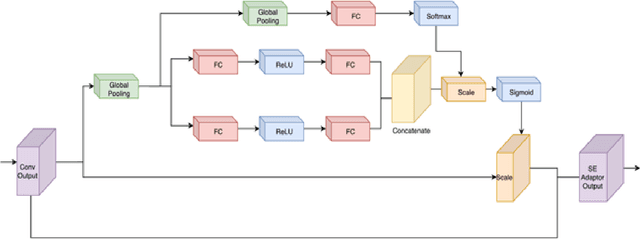

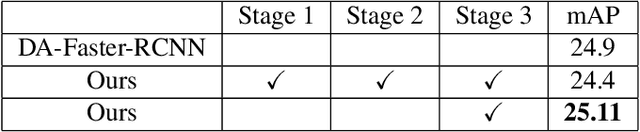

Abstract:Despite growing interest in object detection, very few works address the extremely practical problem of cross-domain robustness especially for automative applications. In order to prevent drops in performance due to domain shift, we introduce an unsupervised domain adaptation method built on the foundation of faster-RCNN with two domain adaptation components addressing the shift at the instance and image levels respectively and apply a consistency regularization between them. We also introduce a family of adaptation layers that leverage the squeeze excitation mechanism called SE Adaptors to improve domain attention and thus improves performance without any prior requirement of knowledge of the new target domain. Finally, we incorporate a center loss in the instance and image level representations to improve the intra-class variance. We report all results with Cityscapes as our source domain and Foggy Cityscapes as the target domain outperforming previous baselines.

Structure Aware and Class Balanced 3D Object Detection on nuScenes Dataset

May 25, 2022Abstract:3-D object detection is pivotal for autonomous driving. Point cloud based methods have become increasingly popular for 3-D object detection, owing to their accurate depth information. NuTonomy's nuScenes dataset greatly extends commonly used datasets such as KITTI in size, sensor modalities, categories, and annotation numbers. However, it suffers from severe class imbalance. The Class-balanced Grouping and Sampling paper addresses this issue and suggests augmentation and sampling strategy. However, the localization precision of this model is affected by the loss of spatial information in the downscaled feature maps. We propose to enhance the performance of the CBGS model by designing an auxiliary network, that makes full use of the structure information of the 3D point cloud, in order to improve the localization accuracy. The detachable auxiliary network is jointly optimized by two point-level supervisions, namely foreground segmentation and center estimation. The auxiliary network does not introduce any extra computation during inference, since it can be detached at test time.

Constrained Motion Planning Networks X

Oct 17, 2020

Abstract:Constrained motion planning is a challenging field of research, aiming for computationally efficient methods that can find a collision-free path connecting a given start and goal by transversing zero-volume constraint manifolds for a given planning problem. These planning problems come up surprisingly frequently, such as in robot manipulation for performing daily life assistive tasks. However, few solutions to constrained motion planning are available, and those that exist struggle with high computational time complexity in finding a path solution on the manifolds. To address this challenge, we present Constrained Motion Planning Networks X (CoMPNetX). It is a neural planning approach, comprising a conditional deep neural generator and discriminator with neural gradients-based fast projections to the constraint manifolds. We also introduce neural task and scene representations conditioned on which the CoMPNetX generates implicit manifold configurations to turbo-charge any underlying classical planner such as Sampling-based Motion Planning methods for quickly solving complex constrained planning tasks. We show that our method, equipped with any constrained-adherence technique, finds path solutions with high success rates and lower computation times than state-of-the-art traditional path-finding tools on various challenging scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge