Artur S. d'Avila Garcez

Grokking Explained: A Statistical Phenomenon

Feb 03, 2025Abstract:Grokking, or delayed generalization, is an intriguing learning phenomenon where test set loss decreases sharply only after a model's training set loss has converged. This challenges conventional understanding of the training dynamics in deep learning networks. In this paper, we formalize and investigate grokking, highlighting that a key factor in its emergence is a distribution shift between training and test data. We introduce two synthetic datasets specifically designed to analyze grokking. One dataset examines the impact of limited sampling, and the other investigates transfer learning's role in grokking. By inducing distribution shifts through controlled imbalanced sampling of sub-categories, we systematically reproduce the phenomenon, demonstrating that while small-sampling is strongly associated with grokking, it is not its cause. Instead, small-sampling serves as a convenient mechanism for achieving the necessary distribution shift. We also show that when classes form an equivariant map, grokking can be explained by the model's ability to learn from similar classes or sub-categories. Unlike earlier work suggesting that grokking primarily arises from high regularization and sparse data, we demonstrate that it can also occur with dense data and minimal hyper-parameter tuning. Our findings deepen the understanding of grokking and pave the way for developing better stopping criteria in future training processes.

Continual Reasoning: Non-Monotonic Reasoning in Neurosymbolic AI using Continual Learning

May 03, 2023Abstract:Despite the extensive investment and impressive recent progress at reasoning by similarity, deep learning continues to struggle with more complex forms of reasoning such as non-monotonic and commonsense reasoning. Non-monotonicity is a property of non-classical reasoning typically seen in commonsense reasoning, whereby a reasoning system is allowed (differently from classical logic) to jump to conclusions which may be retracted later, when new information becomes available. Neural-symbolic systems such as Logic Tensor Networks (LTN) have been shown to be effective at enabling deep neural networks to achieve reasoning capabilities. In this paper, we show that by combining a neural-symbolic system with methods from continual learning, LTN can obtain a higher level of accuracy when addressing non-monotonic reasoning tasks. Continual learning is added to LTNs by adopting a curriculum of learning from knowledge and data with recall. We call this process Continual Reasoning, a new methodology for the application of neural-symbolic systems to reasoning tasks. Continual Reasoning is applied to a prototypical non-monotonic reasoning problem as well as other reasoning examples. Experimentation is conducted to compare and analyze the effects that different curriculum choices may have on overall learning and reasoning results. Results indicate significant improvement on the prototypical non-monotonic reasoning problem and a promising outlook for the proposed approach on statistical relational learning examples.

Accountability in AI: From Principles to Industry-specific Accreditation

Oct 08, 2021

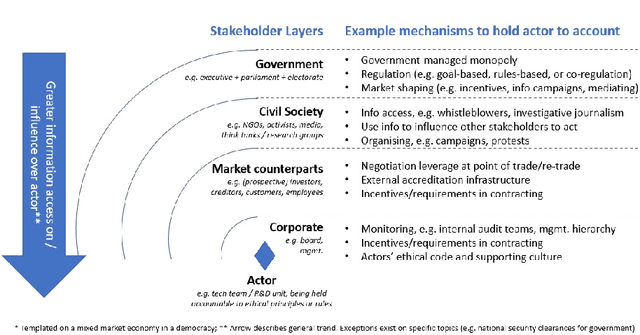

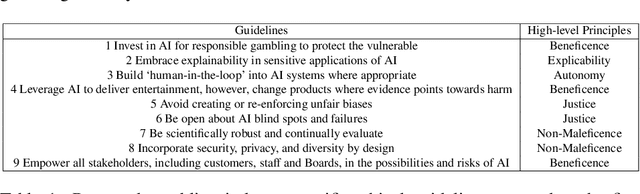

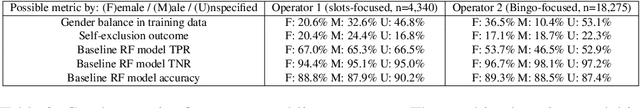

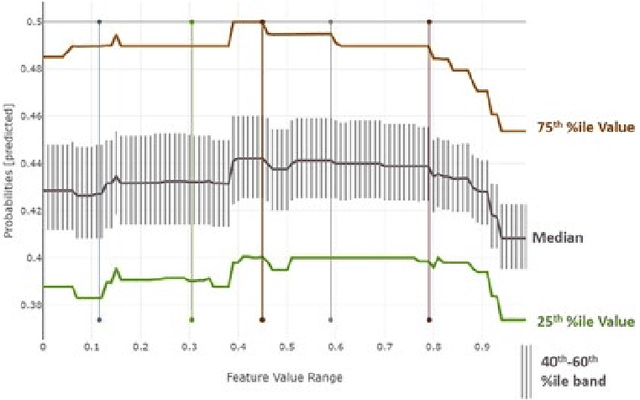

Abstract:Recent AI-related scandals have shed a spotlight on accountability in AI, with increasing public interest and concern. This paper draws on literature from public policy and governance to make two contributions. First, we propose an AI accountability ecosystem as a useful lens on the system, with different stakeholders requiring and contributing to specific accountability mechanisms. We argue that the present ecosystem is unbalanced, with a need for improved transparency via AI explainability and adequate documentation and process formalisation to support internal audit, leading up eventually to external accreditation processes. Second, we use a case study in the gambling sector to illustrate in a subset of the overall ecosystem the need for industry-specific accountability principles and processes. We define and evaluate critically the implementation of key accountability principles in the gambling industry, namely addressing algorithmic bias and model explainability, before concluding and discussing directions for future work based on our findings. Keywords: Accountability, Explainable AI, Algorithmic Bias, Regulation.

A Hybrid Recurrent Neural Network For Music Transcription

Nov 06, 2014

Abstract:We investigate the problem of incorporating higher-level symbolic score-like information into Automatic Music Transcription (AMT) systems to improve their performance. We use recurrent neural networks (RNNs) and their variants as music language models (MLMs) and present a generative architecture for combining these models with predictions from a frame level acoustic classifier. We also compare different neural network architectures for acoustic modeling. The proposed model computes a distribution over possible output sequences given the acoustic input signal and we present an algorithm for performing a global search for good candidate transcriptions. The performance of the proposed model is evaluated on piano music from the MAPS dataset and we observe that the proposed model consistently outperforms existing transcription methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge